SMP architecture

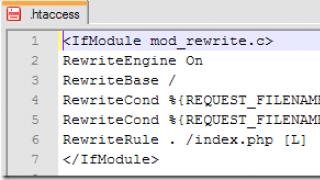

SMP architecture (symmetric multiprocessing) is a symmetric multiprocessing architecture. The main feature of systems with the SMP architecture is the presence of a common physical memory, shared by all processors.

Memory is a way of transferring messages between processors, while all computing devices, when accessing it, have equal rights and the same addressing for all memory cells. Therefore, the SMP architecture is called symmetric.

The main advantages of SMP systems:

Simplicity and versatility for programming. The SMP architecture does not impose restrictions on the programming model used when creating an application: usually the parallel branches model is used, when all processors work completely independently of each other - however, it is possible to implement models that use interprocessor communication. The use of shared memory increases the speed of such an exchange; the user also has access to the entire amount of memory at once. For SMP systems, there are comparatively effective means automatic parallelization.

Ease of use. Typically, SMP systems use an air-conditioning-based refrigeration system for ease of maintenance.

Relatively not high price.

Flaws:

Systems with shared memory built on the system bus are poorly scalable

This important drawback of the SMP system does not allow them to be considered truly promising. Poor scalability is due to the fact that the bus is only capable of processing one transaction at a time, which results in conflict resolution problems when multiple processors are accessing the same areas of shared physical memory at the same time.

MPP architecture

MPP architecture (massive parallel processing) - massively parallel architecture. main feature such an architecture is that the memory is physically separated. In this case, the system is built from individual modules containing processor, local bank operating memory(OP), two communication processors (routers) or a network adapter, sometimes - hard drives and / or other input / output devices.

Main advantage:

The main advantage of systems with separate memory is good scalability: unlike SMP systems, in machines with separate memory, each processor has access only to its local memory, and therefore there is no need for clock-wise synchronization of processors. Almost all performance records today are set on machines of this very architecture, consisting of several thousand processors (ASCI Red, ASCI Blue Pacific).

Flaws:

The absence of shared memory significantly reduces the speed of interprocessor exchange, since there is no common medium for storing data intended for exchange between processors. Special programming technique is required to implement inter-processor messaging.

each processor can only use a limited amount of the local memory bank.

due to these architectural shortcomings, significant efforts are required in order to maximize the use of system resources. This is what determines the high price software for massively parallel systems with separate memory.

PVP architecture

PVP (Parallel Vector Process) is a parallel architecture with vector processors.

The main feature of PVP systems is the presence of special vector-pipelined processors, which provide commands for the same type of processing of vectors of independent data, which are effectively executed on pipelined functional devices. Typically, several such processors (1-16) work simultaneously with shared memory (similar to SMP) in multiprocessor configurations. Several such nodes can be combined using a switch (similar to MPP). Since the transfer of data to vector format is much faster than in scalar (the maximum speed can be 64 Gb / s, which is 2 orders of magnitude faster than in scalar machines), then the problem of interaction between data streams during parallelization becomes insignificant. And what is poorly parallelized on scalar machines is well parallelized on vector machines. Thus, PVP architecture systems can be machines general purpose(general purpose systems). However, because vector processors are very expensive, these machines will not be available to the public.

Cluster architecture

A cluster is two or more computers(often called nodes), joined together by network technologies based on a bus architecture or a switch and presented to users as a single information and computing resource. Servers, workstations and even ordinary personal computers can be selected as cluster nodes. The health benefits of clustering become apparent in the event of a node failure, allowing another node in the cluster to take over the load on the failed node, and users will not notice the interruption in access.

Amdahl's Law(eng. Amdahl "s law, sometimes also Amdahl-Wer Law) - illustrates the limitation of the growth of the computing system performance with an increase in the number of computers. Gene Amdals formulated the law in 1967, having discovered an essentially simple, but insurmountable in content limitation on productivity growth when computations are parallelized: “In the case when a task is divided into several parts, the total execution time on a parallel system cannot be less fragment ". According to this law, acceleration of program execution due to parallelization of its instructions on a set of computers is limited by the time required to execute its sequential instructions.

Suppose you need to solve some computational problem. Suppose that its algorithm is such that the share of the total volume of calculations can only be obtained by sequential calculations, and, accordingly, the share can be perfectly parallelized (that is, the computation time will be inversely proportional to the number of nodes involved). Then the acceleration that can be obtained on a computing system from processors, in comparison with a uniprocessor solution, will not exceed the value

Illustration

The table shows how many times faster a program with a proportion of sequential calculations will be executed when using processors. ... So, if half of the code is sequential, then the total gain will never exceed two.

] Ideological value

Amdahl's law shows that the increase in computational efficiency depends on the problem algorithm and is bounded from above for any problem with. Increasing the number of processors in a computing system does not make sense for every task.

Moreover, if we take into account the time required to transfer data between nodes of a computer system, then the dependence of the computation time on the number of nodes will have a maximum. This imposes a limitation on the scalability of the computing system, that is, it means that from a certain moment the addition of new nodes to the system will be increase time of task calculation.

For MIMD systems, there is now a generally accepted classification based on the methods used to organize the RAM in these systems. According to this classification, first of all, they distinguish multiprocessor computing systems(or multiprocessors) or computing systems with shared memory (multiprocessors, common memory systems, shared-memory systems) and multicomputer computing systems(multicomputers) or computing systems with distributed memory (multicomputers, distributed memory systems). The structure of multiprocessor and multicomputer systems is shown in Fig. 1, where is the processor, is the memory module.

Rice. 1. a) - the structure of the multiprocessor; b) - the structure of the multicomputer.

Multiprocessors.

In multiprocessors, the address space of all processors is the same. This means that if the same variable occurs in the programs of several multiprocessor processors, then to get or change the value of this variable, these processors will refer to one physical cell of shared memory. This circumstance has both positive and negative consequences.

On the one hand, there is no need to physically move data between switching programs, which eliminates the time spent on interprocessor communication.

On the other hand, since the simultaneous access of several processors to common data can lead to incorrect results, systems for synchronizing parallel processes and ensuring memory coherence are needed. Because processors need to access shared memory very often, the bandwidth requirements of the communications media are extremely high.

The latter circumstance limits the number of processors in multiprocessors to a few dozen. The acuteness of the problem of access to shared memory can be partially removed by dividing memory into blocks that allow parallelizing memory accesses from different processors.

Note one more advantage of multiprocessors - a multiprocessor system operates under a single copy of an operating system (usually UNIX-like) and does not require individual customization each processor node.

Homogeneous multiprocessors with equal (symmetric) access to shared RAM are usually called SMP systems (systems with symmetric multiprocessor architecture). SMP systems appeared as an alternative to expensive multiprocessor systems based on vector-pipelined processors and vector-parallel processors (see Fig. 2).

Multicomputers.

Due to the simplicity of their architecture, multicomputers are currently the most widespread. Multicomputers have no shared memory. Therefore, interprocessor communication in such systems is usually carried out through communication network using messaging.

Each processor in a multicomputer has an independent address space. Therefore, the presence of a variable with the same name in programs different processors, leads to addressing physically different cells own memory these processors. This circumstance requires physical movement of data between switching programs in different processors. Most often, the main part of the calls is made by each processor to its own memory. Therefore, the requirements for the switching environment are weakened. As a result, the number of processors in multicomputer systems can reach several thousand, tens of thousands, and even hundreds of thousands.

The peak performance of the largest shared memory systems is lower than the peak performance of the largest shared memory systems; the cost of systems with shared memory is higher than the cost of similar performance systems with distributed memory.

Homogeneous distributed memory multicomputers are called computing systems with massively parallel architecture(MPP systems) - see Fig. 2.

Something in between SMP systems and MPP systems are NUMA systems.

Cluster systems (computing clusters).

Cluster systems(computing clusters) represent more than cheap option MPP systems. A computing cluster consists of a collection of personal computers or workstations), united by a local network as a communication medium. Computational clusters are considered in detail later.

Rice. 2. Classification of multiprocessors and multicomputers.

SMP systems

All processors in an SMP system have symmetric access to memory, i.e. SMP system memory is UMA memory. Symmetry means the following: equal rights of all processors to access memory; the same addressing for all memory elements; equal access time for all processors in the system to memory (excluding deadlocks).

General structure The SMP system is shown in Fig. 3. The communication environment of an SMP system is built on the basis of a high-speed system bus or high-speed switch. Besides identical processors and shared memory M, I / O devices are connected to the same bus or switch.

Behind the seeming simplicity of SMP systems, there are significant problems associated mainly with RAM. The fact is that at present the speed of the RAM lags far behind the speed of the processor. To bridge this gap, modern processors are equipped with high-speed buffer memory (cache memory). The speed of access to this memory is several tens of times higher than the speed of access to the main memory of the processor. However, the presence of a cache violates the principle of equal access to any point in memory, since the data in the cache memory of one processor is not available to other processors. Therefore, after each modification of a copy of a variable located in the cache memory of a processor, it is necessary to perform a synchronous modification of this variable itself, located in the main memory. In modern SMP systems, cache coherency is supported by hardware or the operating system.

Rice. 3. General structure of the SMP system

The most famous SMP systems are SMP servers and workstations from IBM, HP, Compaq, Dell, Fujitsu, etc. An SMP system operates under a single operating system (most often UNIX and the like).

Due to the limited bandwidth of the communication environment, SMP systems do not scale well. Currently in real systems not more than a few dozen processors are used.

A known unpleasant property of SMP systems is that their cost increases faster than performance as the number of processors in the system increases.

MPP systems.

MPP systems are built from processor nodes containing a processor, a local unit of random access memory, a communication processor or a network adapter, sometimes hard disks and / or other input / output devices. In fact, such modules are fully functional computers (see Fig. 4.). Access to the block of RAM of this module has only the processor of the same module. Modules interact with each other through a certain communication medium. There are two options for operating the operating system on MPP systems. In one embodiment, a complete operating system functions only on control computer, and on each individual module a heavily trimmed version of the operating system runs, supporting only the basic functions of the operating system kernel. In the second version, a full-fledged UNIX-like operating system runs on each module. Note that the need for an operating system (in one form or another) on each processor of the MPP-system makes it possible to use only a limited amount of memory for each of the processors.

Compared to SMP systems, the MPP system architecture eliminates both the problem of contention when accessing memory and the problem of cache coherence.

The main advantage of MPP systems is good scalability. So the super-computer of the CRAY T3E series is capable of scalable up to 2048 processors. Almost all performance records to date have been set on MPP systems consisting of several thousand processors.

Rice. 4. General structure of the MPP-system.

On the other hand, the absence of shared memory noticeably reduces the speed of interprocessor exchange in MPP systems. For MPP systems, this circumstance brings to the fore the problem of the efficiency of the communication environment.

In addition, MPP systems require a special programming technique to implement data exchange between processors. This explains the high cost of software for MPP systems. This also explains the fact that writing efficient parallel programs for MPP systems is a more difficult task than writing the same programs for SMP systems. For a wide range of problems for which well-proven sequential algorithms are known, it is not possible to construct efficient parallel algorithms for MPP systems.

NUMA systems.

Logically general access to data can also be provided with physically allocated memory. In this case, the distance between different processors and different memory elements, generally speaking, is different and the duration of access of different processors to different memory elements is different. Those. the memory of such systems is NUMA memory.

A NUMA system is usually built on the basis of homogeneous processor nodes, consisting of not a large number processors and memory block. The modules are bundled together using some kind of high-speed communication medium (see Figure 5). A single address space is supported, access to remote memory is supported by hardware, i.e. to the memory of other modules. At the same time, access to local memory is carried out several times faster than to remote memory. Essentially, a NUMA system is an MPP system, where SMP nodes are used as separate computational elements.

Among NUMA systems, the following types of systems are distinguished:

- COMA systems in which only the processor's local cache memory is used as main memory (cache-only memory architecture - COMA);

- CC-NUMA systems, in which the coherence of the local cache memory of different processors (cache-coherent NUMA - CC-NUMA) is provided in hardware;

- NCC-NUMA systems in which the hardware does not support the coherence of the local memory cache of different processors (non-cache coherent NUMA - NCC-NUMA). TO this type for example, the Cray T3E system.

Rice. 5. General structure of the NUMA-system.

The logical general availability of memory in NUMA systems, on the one hand, allows you to work with a single address space, and, on the other hand, allows you to simply provide high scalability of the system. This technology currently makes it possible to create systems containing up to several hundred processors.

NUMA systems are mass-produced by many computer firms as multiprocessor servers and firmly hold the leadership in the class of small supercomputers.

02/15/1995 V. Pyatyonok

Uniprocessor architecture Modified uniprocessor architecture SMP architecture Wellfleet's SMP router architecture Architectural overview Packet processing details Abstract References Routers have evolved from three different architectures: uniprocessor, modified uniprocessor, and symmetric multiprocessor. All three have been designed to support highly critical applications.

In their development, routers used three different architectures: uniprocessor, modified uniprocessor, and symmetric multiprocessor. All three have been designed to support highly critical applications. However, the core of these requirements, namely high, scalable performance, as well as high availability, including full fault tolerance and component recovery ("hot spare"), they are not able to satisfy to the same extent. This article discusses the advantages of a symmetric multiprocessor architecture.

Uniprocessor architecture

The single-processor architecture uses multiple network interface modules to provide additional flexibility in node configuration. The network interface modules are connected to a single central processing unit via a common system bus. This single processor takes care of all processing tasks. And these tasks are for modern level development corporate networks complex and varied: filtering and forwarding packets, necessary modification of packet headers, updating routing tables and network addresses, interpretation of service control packets, responses to SNMP requests, the formation of control packets, the provision of other specific services such as spoofing, that is, setting special filters to achieve improved security and network performance.

This traditional architectural solution is the easiest to implement. However, it is not difficult to imagine the limitations that will affect the performance and availability of such a system.

Indeed, all packets from all network interfaces must be processed by a single central processing unit. As additional network interfaces are added, performance degrades markedly. In addition, each packet must travel twice on the bus - from the source module to the processor, and then from the processor to the destination module. A packet travels this path even if it is destined for the same network interface it came from. This also leads to a significant drop in performance as the number of network interface modules increases. Thus, there is a classic "bottleneck".

Small and reliable. If the CPU fails, the router as a whole will be disrupted. In addition, for such an architecture it is impossible to implement "hot recovery" from a reserve of damaged system elements.

In modern implementations of this architecture of routers, in order to resolve performance limitations, as a rule, a sufficiently powerful RISC processor and a high-speed system bus are used. This is a purely forceful attempt to solve the problem - increased productivity for a large initial investment. However, such implementations do not provide performance scaling, and the level of their reliability is predetermined by the reliability of the processor.

Modified uniprocessor architecture

In order to overcome some of the above disadvantages of the uniprocessor architecture, a modification has been invented. The underlying architecture is retained: the interface modules are connected to a single processor via a common system bus. However, each of the network interface modules includes a special peripheral processor - in order to at least partially unload CPU.

Peripheral processors are usually bit-slaces or universal microprocessors that filter and route packets destined for the network interface of the same module from which they entered the router. (Unfortunately, in many of the currently implementations can only do this for certain types of packets, such as Ethernet frames, but not IEEE 802.3.

The central processing unit is still responsible for tasks that cannot be left to the peripheral processor (including routing between modules, system-wide operations, administration and management). Therefore, the performance optimization achieved in this way is rather limited (in fairness, it should be noted that in some cases, with proper network design, you can achieve good results). At the same time, despite a slight decrease in the number of packets transmitted over the system bus, it still remains a very bottleneck.

The inclusion of peripheral processors in the architecture does not increase the availability of the router as a whole.

SMP architecture

The symmetric multiprocessor architecture is free of the disadvantages inherent in the aforementioned architectures. In this case, the processing power is fully distributed among all the network interface modules.

Each network interface module has its own dedicated processor module that handles all routing tasks. In this case, all routing tables, the other the necessary information and the software implementing the protocols are replicated (i.e. copied) to each processor unit. When a processor unit receives routing information, it updates its own table and then propagates the updates to all other processor units.

This architecture, of course, provides almost linear (if we neglect the costs of replication and the bandwidth of the communication channel between the modules) scalability. This, in turn, means the prospect of significant network expansion without a noticeable drop in performance. If necessary, you just need to add an additional network interface module - after all, there is simply no central processor in this architecture.

All packets are processed by local processors. External (that is, intended for other modules) packets are transmitted over the communication channel between the processors only once. This leads to a significant reduction in traffic within the router.

In terms of availability, the system will not fail if a single processor module breaks down. This breakdown will only affect those network segments that are connected to the damaged processor module. In addition, a damaged module can be replaced with a functional one without turning off the router and without affecting all other modules.

The benefits of the SMP architecture are recognized by computer manufacturers. Over the past several years, many similar platforms have appeared, and only limited number standard operating systems, capable of taking full advantage of the hardware, held back their proliferation. Other manufacturers, including manufacturers of active network devices, also use the SMP architecture to create specialized computing devices.

In the rest of this section, we'll take a closer look at the technical details of Wellfleet's SMP router architecture.

Wellfleet's SMP Router Architecture

Wellfleet, one of the leading manufacturers of routers and bridges, has certainly spared no expense in evaluating and testing various router architectures that support various global and local area networks over various physical media and designed for different conditions traffic. The results of these studies were formulated in the form of a list of requirements considered in the design of routers intended for building corporate network environments for highly critical applications. Here are some of these requirements - those that, in our opinion, justify the use of a multiprocessor architecture.

1. The need for scalable performance, high availability, configuration flexibility dictates the use of the SMP architecture.

2. The level of computing power requirements of multi-protocol routing (especially when using modern routing protocols like TCP / IP OSPF) can only be met by modern powerful 32-bit microprocessors. However, since routing involves serving a large number of similar requests in parallel, fast switching between different processes is required, which requires extremely low latency in context switching, as well as integrated cache memory.

3. A large memory capacity is required to store the protocol and control software, routing and address tables, statistics and other information.

4. To ensure maximum speed transmission between networks and router processing modules, high-speed network interface controllers and interprocessor communication controllers with integrated capabilities are required direct access to memory (DMA - Direct Memory Access).

5. Minimizing latency requires high-bandwidth 32-bit data and address channels for all resources.

6. Requirements for increased availability include distribution of computing power, redundant power subsystems and, as an additional, but very important feature, duplicated interprocessor communication channels.

7. The need to embrace wide range network environments - from a single remote site or workgroup network to a high-performance organization with high availability backbone - Requires a scalable multiprocessor architecture.

Architecture overview

Figure 2 is a schematic representation of the symmetric multiprocessor architecture used in all modular routers manufactured by Wellfleet. There are three main architectural elements: communication modules, processor modules and interprocess communication.

Communication modules provide physical network interfaces that allow connections to local and global networks almost any type. Each communication module is directly connected to its intended processor module through an intelligent communication interface (ILI - Intelligent Link Interface). The packets received by the communication module are forwarded to the processor module connected to it via their own, direct connection. The processor determines which network interface these packets are intended for, and either redirects them to another network interface of the same communication module, or, via a high-speed interprocessor connection, to another processor module, which will transmit this packet to the connected communication module.

Let's take a closer look at the structure of each of the components.

The processor module includes:

The actual central processor;

Local memory, which stores protocols and routing tables, address tables, and other information that is used locally by the CPU;

Global memory that plays the role of a buffer for "transit" data packets coming from the communication module to the processor module attached to it or from other processor modules (it is called global because it is visible and accessible to all processor modules);

OMA processor, which provides direct memory access when transferring packets between global memory buffers located in different processor modules;

Communication interface providing connection to the corresponding communication module;

Internal 32-bit data channels linking all of the above resources to provide the highest possible throughput and minimum latency; multiple channels are provided to ensure simultaneous execution operations by different computing devices (for example, the CPU and DMA processor) and ensures that there are no bottlenecks that slow down packet forwarding and processing.

Various models of Wellfleet routers use ACE (Advanced Communication Engine) processor modules based on Motorola 68020 or 68030 processors, or Fast Routing Engine (FRE) modules based on MC68040.

The communication module includes:

Connectors that provide an interface to specific networks (eg synchronous, Ethernet, Token Ring FDDI);

Communication controllers that transfer packets between the physical network interface and the global memory using a DMA channel; communication controllers are also designed for a specific type of network interface and are capable of transmitting packets at a speed that matches the speed of a wire;

Filters ( additional opportunity for communication modules for FDDI and Ethernet), which pre-filter incoming packets, saving computational resources for meaningful file processing.

The standard VMEbus is often used as the interprocessor communication channel, providing an aggregate throughput of 320 Mbps.

The older models use the Parallel Packet Express (PPX) interface developed by Wellfleet itself with a bandwidth of 1 Gbps, using four independent, redundant 256 Mbps data channels with dynamic load distribution. This provides high overall performance and achieves what architecture does not. single point failure. Each processor module is connected to all four channels and can select any of them. A specific channel is chosen randomly, for each packet, which should ensure an even distribution of traffic among all available channels... If one of the PPX data channels becomes unavailable, the download is automatically distributed among the rest.

Package processing details

The incoming packets are received, depending on the network, by this or that communication controller. If an additional filter is included in the configuration of the Comms module, some of the packets are dropped and the other part is received. Accepted packages are placed by the communication controller into the global memory buffer of the directly attached processor module. For fast packet transfer, a DMA channel is included in each communications controller.

Once in global memory, packets are retrieved by the CPU for routing. The CPU detects the output network interface, modifies the packet as appropriate, and returns it to global memory. Then one of two things is done:

1. The packet is redirected to the network interface of the module directly attached to it. The egress network interface communications controller receives instructions from the CPU to fetch packets from global memory and send them to the network.

2. The packet is redirected to the network interface of another communication module. The DMA processor receives instructions from the CPU to send packets to another processor unit and loads them over the interprocessor connection into the global memory of the processor unit attached to the output network interface. The egress network interface link controller fetches packets from global memory and sends them to the network.

Routing decisions are made by the CPU independently of other processor modules. Each processor module maintains an independent routing and address database in its local memory, which is updated when the module receives information about changes in routing tables or addresses (in this case, the changes are sent to all other processor modules).

Simultaneous operation of the communication controller, CPU and DMA processor allows for high overall performance. (We emphasize that all this happens in a device where processing is parallelized across several multiprocessor modules). For example, imagine a situation where the communications controller is pushing packets into global memory while the CPU is updating the routing table in local memory and the DMA is pushing the packet onto the interprocessor link.

Summary

The fact of penetration of computer technologies developed for one area of application into others, adjacent, is not new in itself. However, each specific example attracts the attention of specialists. In the architecture of routers considered in this article, in addition to the idea of symmetric multiprocessing, designed to provide scalable performance and a high level of availability, the mechanism of duplicated data channels between processors (for the same purposes) is also used, as well as the idea of replication (or replication) of data, the use of which is more typical of the distributed DBMS industry.

Literature

Symmetric Multiprocessor Architecture. Wellfleet Communications, 10/1993.

G.G. Baron, G.M. Ladyzhensky. "Technology for replicating data in distributed systems", " Open systems", Spring 1994.

*) Wellfleet last fall teamed up with another network technology leader, SunOptics Communications. The merger led to the creation of a new network giant - Bay Networks. - Ed.

UMA - Uniform Memory Access

5. SMP-architecture is used in servers and PC based Intel processors, AMD, Sun, IBM, HP

(+): simplicity, "worked out" basic principles

(-): the entire exchange of m / d processor and memory is real. 1 bus each - a bottleneck of arch-ry - performance limitation, scalability.

Example:

MPP - architecture: Massive parallel processing

A massively parallel system. It was based on transputer- a powerful universal processor, a feature of which was the presence of 4 links (communication channels). Each link consists of two parts that serve to transfer information in opposite directions, and is used to connect transputers to each other and connect external devices. Architecture: many nodes, each node - OP + CPU

Classic MPP architecture: each node is connected to 4 nodes via a point-to-point link.

Example: Intel Peragon

Example: Intel Peragon

Cluster architecture

Implementation of the unification of machines, which seems to be a single whole for the OS, system software, application programs users.

Cluster types

- High Availability Systems (HA).

- Systems for high performance computing (High Performance, HP, Compute clusters).

- Multi-threaded systems.

- Load-balancing clusters. (distribution of computational load)

Example: theHIVE cluster architecture

5.NUMA architecture Non Uniform Memory Access - non-uniform memory access

5.NUMA architecture Non Uniform Memory Access - non-uniform memory access

Each processor has access to its own and someone else's memory (to access someone else's memory, a switching network or even a percent of someone else's node is used). Access to the memory of a foreign node can be supported by hardware: special. controllers.

-: expensive, poor scalability.

Now: NUMA has realized access to foreign memory programmatically.

Computing system NUMA consists of a set of nodes (contains one or more processors, a single copy of the OS runs on it), which are interconnected by a switch or a high-speed network.

The link topology is split into several levels. Each layer provides connections in groups with a small number of nodes. Such groups are considered as single nodes at a higher level.

The OP is physically distributed but logically public.

Depending on the access path to the data item, the time spent on this operation can vary significantly.

Examples of specific implementations: cc-NUMA, SOMA, NUMA-Q

Example: HP Integrity SuperDome

Simplified block diagrams of SMP (a) and MPP (b)

- Five main architectures of high-performance aircraft, their a brief description of, examples. Comparison of cluster architecture and NUMA.

In a cluster, each processor has access only to its own memory, in NUMA not only to its own, but also to someone else's (to access someone else's memory, a switching network and a processor of a foreign node are used).

- SMP architecture. Organization principles. Advantages, disadvantages. Scalability in the "narrow" and "wide" sense. Scope, examples of aircraft on SMP.

SMP architecture (symmetric multiprocessing)- symmetric multiprocessor architecture. The main feature of systems with the SMP architecture is the presence of a common physical memory, shared by all processors.

1. An SMP-system is built on the basis of a high-speed system bus, to the slots of which three types of functional blocks are connected: processors (CPU), RAM(OP), input / output subsystem (I / O).

2. Memory is a way of transferring messages between processors.

3. All computing devices, when accessing the memory, have equal rights and the same addressing for all memory cells.

4. The latter circumstance makes it possible to very efficiently exchange data with other computing devices.

5. SMP is used in servers and workstations based on Intel, AMD, Sun, IBM, HP processors.

6. The SMP system runs under a single OS (either UNIX-like or Windows). The OS automatically (during operation) distributes processes among processors, but sometimes explicit binding is also possible.

Organization principles:

An SMP system consists of several homogeneous processors and an array of shared memory.

One of the approaches often used in SMP architectures to form a scalable, shared memory system is to uniformly organize access to memory by organizing a scalable memory-processor channel.

Each memory access operation is interpreted as a processor-to-memory bus transaction.

In SMP, each processor has at least one cache of its own (and possibly several). We can say that an SMP system is one computer with several peer-to-peer processors.

Cache coherency is supported by hardware.

Everything else is in one instance: one memory, one I / O subsystem, one operating system.

The word "peer" means that each processor can do everything that any other. Each processor has access to all memory, can perform any I / O operation, interrupt other processors.

Scalability:

In the "narrow" sense: the ability to connect hardware within certain limits (processors, memory, interfaces).

In the "broad" sense: the linear growth of the performance metric with increasing hardware.

Advantages:

Simplicity and versatility for programming. The SMP architecture does not impose restrictions on the programming model used when creating an application: usually the parallel branches model is used, when all processors work completely independently of each other - however, it is possible to implement models that use interprocessor communication. The use of shared memory increases the speed of such an exchange; the user also has access to the entire amount of memory at once.

Ease of use. Typically, SMP systems use an air-conditioning-based refrigeration system for ease of maintenance.

Relatively low price.

Concurrency advantage. The implicit transfer of data between caches by SMP hardware is the fastest and cheapest communication medium in any general-purpose parallel architecture. Therefore, in the presence of a large number of short transactions (typical, for example, of banking applications), when it is necessary to frequently synchronize access to shared data, the SMP architecture is the best choice; any other architecture works worse.

The SMP architecture is the most secure. This does not mean that transferring data between caches is desirable. A parallel program will always execute faster the less its parts interact. But if these parts have to interact frequently, then the program will run faster on SMP.

Flaws:

SMP systems are poorly scalable:

1. The system bus has a limited (albeit high) bandwidth and a limited number of slots, the so-called "bottleneck".

2. At a time, the bus is capable of processing only one transaction, as a result of which there are problems of resolving conflicts when several processors are simultaneously accessing the same areas of shared physical memory. When such a conflict occurs, it depends on the communication speed and on the number of computational elements.

All of this inhibits performance gains as the number of processors and the number of connected users increase. In real systems, no more than 8-16-32 processors can be effectively used.

Application area: to work with banking applications

Example: Sun Fire T2000 architecture. UltraSPARC T1 architecture.

- SMP architecture. Improvement and modification of SMP architecture. SMP in modern multi-core processors... Cache coherency.

SMP architecture (symmetric multiprocessing)- symmetric multiprocessor architecture. The main feature of systems with the SMP architecture is the presence of a common physical memory, shared by all processors.

Improvement and modification of SMP:

Example: QBB architecture of GS DEC series server systems

In order to increase bus performance, an attempt was made to remove the bus, but leave shared memory access -> transition and replacement of the shared bus local switch(or a system of switches): each processor is connected to 4 memory banks at any given time.

In order to increase bus performance, an attempt was made to remove the bus, but leave shared memory access -> transition and replacement of the shared bus local switch(or a system of switches): each processor is connected to 4 memory banks at any given time.

Each percent works with some kind of memory bank,

Switches to a different memory bank

Starts working with another memory bank.

Rice. 3.1.

The memory serves, in particular, for the transfer of messages between processors, while all computing devices, when accessing it, have equal rights and the same addressing for all memory cells. Therefore, the SMP architecture is called symmetric. The latter circumstance makes it possible to very efficiently exchange data with other computing devices. The SMP system is built on the basis of a high-speed system bus (SGI PowerPath, Sun Gigaplane, DEC TurboLaser), to the slots of which functional blocks of the following types are connected: processors (CPU), input / output subsystem (I / O), etc. the I / O modules use slower buses (PCI, VME64). The most famous SMP systems are SMP servers and workstations based on Intel processors (IBM, HP, Compaq, Dell, ALR, Unisys, DG, Fujitsu, etc.) The whole system runs under a single OS (usually UNIX-like, but Windows NT is supported for Intel platforms). The OS automatically (during operation) distributes processes among processors, but sometimes explicit binding is also possible.

The main advantages of SMP systems:

- simplicity and versatility for programming. The SMP architecture does not impose restrictions on the programming model used when creating an application: it is usually a parallel branch model, when all processors work independently of each other. However, it is possible to implement models using interprocessor communication. The use of shared memory increases the speed of such an exchange; the user also has access to the entire amount of memory at once. For SMP systems, there are quite effective means of automatic parallelization;

- ease of use. Typically, SMP systems use an air conditioning system based on air cooled, which facilitates their maintenance;

- relatively low price.

Flaws:

- shared memory systems scale poorly.

This significant disadvantage SMP systems do not allow them to be considered truly promising. Cause of bad scalability is that at the moment the bus is capable of processing only one transaction, as a result of which there are problems of resolving conflicts when several processors simultaneously access the same areas shared physical memory... Computational elements begin to interfere with each other. When such a conflict occurs, it depends on the communication speed and on the number of computational elements. Currently, conflicts can occur with 8-24 processors. Moreover, system bus has a limited (albeit high) bandwidth (PS) and a limited number of slots. All of this obviously hinders performance gains as the number of processors and the number of connected users increases. In real systems, a maximum of 32 processors can be used. To build scalable systems based on SMP, cluster or NUMA architectures are used. When working with SMP systems, the so-called programming paradigm with shared memory (shared memory paradigm).

MPP architecture

MPP (massive parallel processing) - massively parallel architecture... The main feature of this architecture is that the memory is physically separated. In this case, the system is built from separate modules containing a processor, a local operating memory (OP) bank, communication processors(routers) or network adapters , sometimes hard drives and / or other input / output devices. In fact, such modules are fully functional computers (see Figure 3.2). Only processors (CPUs) from the same module have access to the OP bank from this module. Modules are connected by special communication channels... The user can identify the logical number of the processor to which he is connected and organize message exchange with other processors. There are two variants of the operating system (OS) operation on MPP-architecture machines. In one full-fledged operating system(OS) runs only on the control machine (front-end), a heavily trimmed version of the OS functions on each individual module, ensuring the operation of only the branch of the parallel application located in it. In the second version, each module runs a full-fledged UNIX-like OS, which is installed separately.

Rice. 3.2.

The main advantage of split memory systems is good scalability: unlike SMP systems, in machines with separate memory, each processor has access only to its own local memory, and therefore there is no need for clock-wise synchronization of processors. Almost all performance records today are set on machines of this very architecture, consisting of several thousand processors (