To measure the semantic content of information, i.e. its quantity at the semantic level, the most recognized is the thesaurus measure, which connects the semantic properties of information with the user’s ability to accept the incoming message. For this purpose the concept is used user's thesaurus.

Thesaurus is a collection of information available to a user or system.

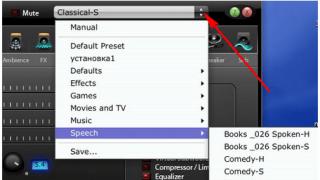

Depending on the relationship between the semantic content of information S and the user's thesaurus S p the amount of semantic information changes Ic, perceived by the user and subsequently included by him in his thesaurus. The nature of this dependence is shown in Fig. 2.2. Let us consider two limiting cases when the amount of semantic information Ic equals 0:

at S p 0 the user does not perceive or understand the incoming information;

at Sp; the user knows everything, but he does not need the incoming information.

Rice. 2.2. Dependence of the amount of semantic information. perceived by the consumer, from his thesaurus Ic=f(Sp)

Maximum amount of semantic information Ic the consumer acquires by agreeing on its semantic content S with your thesaurus S p (S p = S p opt), when the incoming information is understandable to the user and provides him with previously unknown (not in his thesaurus) information.

Consequently, the amount of semantic information in a message, the amount of new knowledge received by the user, is a relative value. The same message can have meaningful content for a competent user and be meaningless (semantic noise) for an incompetent user.

When assessing the semantic (content) aspect of information, it is necessary to strive to harmonize the values S And Sp.

A relative measure of the amount of semantic information can be the content coefficient WITH, which is defined as the ratio of the amount of semantic information to its volume:

Pragmatic measure information

This measure determines the usefulness of information (value) for the user to achieve his goal. This measure is also a relative value, determined by the peculiarities of using this information in a particular system. It is advisable to measure the value of information in the same units (or close to them) in which the objective function is measured.

Example 2.5. IN economic system pragmatic properties(value) of information can be determined by the increase in the economic effect of operation achieved through the use of this information to manage the system:

Inb(g)=P(g /b)-P(g),

Where Inb(g)-value information message b for control system g,

P(g)- a priori expected economic effect of the functioning of the control system g ,

P(g/b)- the expected effect of the functioning of system g, provided that the information contained in message b is used for control.

For comparison, we present the introduced information measures in Table 2.1.

Table 2.1. Information units and examples

QUALITY OF INFORMATION

The possibility and effectiveness of using information is determined by its basic consumer needs: quality indicators, as representativeness, meaningfulness, sufficiency, accessibility, relevance, timeliness, accuracy, reliability, sustainability.

- Representativeness information is associated with the correctness of its selection and formation in order to adequately reflect the properties of the object. The most important things here are:

- the correctness of the concept on the basis of which the original concept is formulated;

- validity of the selection of essential features and connections of the displayed phenomenon.

- Violation of the representativeness of information often leads to significant errors.

- Content information reflects semantic capacity, equal to the ratio the amount of semantic information in the message to the volume of processed data, i.e. C=Ic/Vd.

As the content of information increases, the semantic value increases throughput information system, since to obtain the same information requires converting a smaller amount of data.

Along with the content coefficient C, which reflects the semantic aspect, you can also use the information content coefficient, characterized by the ratio of the number syntactic information(according to Shannon) to data volume Y=I/Vd.

- Sufficiency (completeness) information means that it contains minimal but sufficient for acceptance the right decision composition (set of indicators). The concept of completeness of information is associated with its semantic content (semantics) and pragmatics. As incomplete, i.e. Insufficient information to make the right decision, and redundant information reduces the effectiveness of decisions made by the user.

- Availability information to the user's perception is ensured by the implementation of appropriate procedures for its acquisition and transformation. For example, in an information system, information is transformed into an accessible and user-friendly form. This is achieved, in particular, by coordinating its semantic form with the user’s thesaurus.

- Relevance information is determined by the degree of preservation of the value of information for management at the time of its use and depends on the dynamics of changes in its characteristics and on the time interval that has passed since the occurrence of this information.

- Timeliness information means its arrival no later than a predetermined point in time, consistent with the time of solving the task.

- Accuracy information is determined by the degree of proximity of the received information to the real state of the object, process, phenomenon, etc. For information displayed digital code, four classification concepts of accuracy are known:

- formal precision, measured by the unit value of the least significant digit of a number;

- real accuracy, determined by the value of the unit of the last digit of the number, the accuracy of which is guaranteed;

- the maximum accuracy that can be obtained under the specific operating conditions of the system;

- required accuracy determined functional purpose indicator.

Credibility information is determined by its ability to reflect realistically existing facilities with the required accuracy. The reliability of information is measured by the confidence probability of the required accuracy, i.e. the probability that the parameter value displayed by the information differs from true meaning this parameter within the required accuracy.

Sustainability information reflects its ability to respond to changes in source data without violating the required accuracy. The stability of information, as well as representativeness, is determined by the chosen methodology for its selection and formation.

In conclusion, it should be noted that such parameters of information quality as representativeness, content, sufficiency, accessibility, sustainability are entirely determined at the methodological level of information systems development. The parameters of relevance, timeliness, accuracy and reliability are also determined to a greater extent at the methodological level, but their value is significantly influenced by the nature of the system’s functioning, primarily its reliability. At the same time, the parameters of relevance and accuracy are strictly related to the parameters of timeliness and reliability, respectively.

Syntactic measure of information

As syntactic measure quantity of information represents the volume of data.

ABOUT data volume V d in a message “in” is measured by the number of characters (digits) in this message. As we mentioned, in binary system notation unit of measurement is bit. In practice, along with this “smallest” unit of data measurement, a larger unit is often used - byte equal to 8 bits. For convenience, kilo (10 3), mega (10 6), giga (10 9) and tera (10 12) bytes, etc. are used as meters. The volume of short messages is measured in familiar bytes. written messages, thick books, musical works, images, and software products. It is clear that this measure cannot in any way characterize what and why these units of information carry. Measure the novel by L.N. in kilobytes. Tolstoy's "War and Peace" is useful, for example, to understand whether it can fit on free space hard drive. This is as useful as measuring the size of a book—its height, thickness, and width—to judge whether it will fit on a bookshelf, or weighing it to see if a briefcase can bear the combined weight

So. one syntactic measure of information is clearly not enough to characterize a message: in our example with the weather in the latter case The friend's message contained a non-zero amount of data, but it did not contain the information we needed. The conclusion about the usefulness of the information follows from considering the content of the message. To measure the semantic content of information, i.e. its quantity at the semantic level, we introduce the concept of “thesaurus of the information recipient.”

A thesaurus is a collection of information and connections between them that the recipient of the information has. We can say that a thesaurus is the accumulated knowledge of the recipient.

Very simple case when the recipient is technical device - Personal Computer, the thesaurus is formed by the “weaponry” of a computer - the programs and devices embedded in it that allow it to receive, process and present text messages on different languages, using different alphabets, fonts, as well as audio and video information from local or worldwide network. If your computer is not equipped network card, you cannot expect to receive messages on it from other network users in any form. The lack of drivers with Russian fonts will not allow you to work with messages in Russian, etc.

If the recipient is a person, his thesaurus is also a kind of intellectual weaponry of a person, an arsenal of his knowledge. It also forms a kind of filter for incoming messages. The received message is processed using existing knowledge in order to obtain information. If the thesaurus is very rich, then the arsenal of knowledge is deep and diverse; it will allow you to extract information from almost any message. A small thesaurus containing little knowledge can be a barrier to understanding messages that require better preparation.

Let us note, however, that understanding the message alone is not enough to influence decision making - it must contain the information necessary for this, which is not in our thesaurus and which we want to include in it. In the case of weather, our thesaurus did not have the latest, “current” weather information for the university area. If a message we receive changes our thesaurus, our choice of solution may also change. This change in the thesaurus serves as a semantic measure of the amount of information and a unique measure of the usefulness of the received message.

Formally, the amount of semantic information Is, later included in the thesaurus is determined by the ratio of the recipient's thesaurus S i, and the content of the information transmitted in the message “to” S. A graphical view of this dependence is shown in Fig. 1.

Let us consider cases when the amount of semantic information I s equal to or close to zero:

At S i= 0 the recipient does not perceive the incoming information;

At 0< Si< S 0 получатель воспринимает, но не понимает поступившую в сообщении информацию;

At S i-» ∞the recipient has exhaustive knowledge and the incoming information cannot replenish his thesaurus.

Rice. Dependence of the amount of semantic information on the recipient's thesaurus

With thesaurus S i> S 0 amount of semantic information I s, received from an attached message β information Sgrows quickly at first with the growth of the recipient’s own thesaurus, and then - starting from a certain value S i - drops . The drop in the amount of information useful to the recipient occurs because the recipient’s knowledge base has become quite solid and it is becoming increasingly difficult to surprise him with something new.

This can be illustrated by the example of students studying economic computer science and reading materials from websites on corporate IP . Initially, when forming the first knowledge about information systems reading gives little - a lot unclear terms, abbreviations, even the headings are not all clear. Persistence in reading books, attending lectures and seminars, and communicating with professionals helps to replenish the thesaurus. Over time, reading site materials becomes enjoyable and useful, and by the end of your professional career - after writing many articles and books - obtaining new useful information from a popular site will happen much less often.

We can talk about what is optimal for the given information. S the thesaurus of the recipient, under which he will receive maximum information Is, as well as the optimal information in the message “in” for a given thesaurus Sj. In our example, when the recipient is a computer, the optimal thesaurus means that its hardware and installed software perceive and correctly interpret for the user all symbols contained in the message “in”, conveying the meaning of the information S. If the message contains characters that do not correspond to the contents of the thesaurus, some of the information will be lost and the value I s will decrease.

On the other hand, if we know that the recipient does not have the ability to receive texts in Russian (his computer does not have necessary drivers), A foreign languages, on which our message can be sent, neither he nor we have studied, to transmit the necessary information we can resort to transliteration - writing Russian texts using letters of a foreign alphabet that is well perceived by the recipient’s computer. This way we will match our information with the computer thesaurus available to the recipient. The message will look ugly, but the whole necessary information the recipient will be able to read it.

Thus, maximum amount semantic information Is from the message β the recipient acquires by agreeing on its semantic content S c thesaurus Si,(at Si = Sj opt). Information from the same message may have meaningful content for a competent user but be meaningless for an incompetent user. The amount of semantic information in a message received by the user is an individual, personalized quantity - in contrast to syntactic information. However, semantic information is measured in the same way as syntactic information - in bits and bytes.

A relative measure of the amount of semantic information is the content coefficient C, which is defined as the ratio of the amount of semantic information to its data volume Vd, contained in the message β:

C = Is / Vd

Lecture 2 on the discipline “Informatics and ICT”

Syntactic measure of information.

This measure of the amount of information operates with impersonal information that does not express a semantic relationship to the object. Data volume Vd in this case, the message is measured by the number of characters (bits) in the message. IN various systems In notation, one digit has a different weight and the unit of measurement of data changes accordingly.

For example, in the binary number system the unit of measurement is the bit (bit-binary digit - binary digit). A bit is the answer to a single binary question (“yes” or “no”; “0” or “1”), transmitted over communication channels using a signal. Thus, the amount of information contained in the message in bits is determined by the number binary words natural language, the number of characters in each word, the number binary signals, necessary to express each sign.

IN modern computers Along with the minimum unit of data measurement “bit”, the enlarged unit of measurement “byte”, equal to 8 bits, is widely used. IN decimal system notation unit of measurement is “bit” (decimal place).

Amount of information I at the syntactic level it is impossible to determine without considering the concept of uncertainty of the state of the system (entropy of the system). Indeed, obtaining information about a system is always associated with a change in the degree of ignorance of the recipient about the state of this system, i.e. the amount of information is measured by a change (reduction) in the uncertainty of the system state.

Coefficient (degree) of information content(conciseness) of a message is determined by the ratio of the amount of information to the amount of data, i.e.

Y= I / Vd, with 0 With increase Y the amount of work to transform information (data) in the system is reduced. Therefore, they strive to increase the information content, for which special methods for optimal coding of information are being developed. Semantic measure of information To measure the semantic content of information, i.e. its quantity at the semantic level, the most recognized is the thesaurus measure, which connects the semantic properties of information with the user’s ability to accept the incoming message. For this purpose the concept is used user's thesaurus. Thesaurus is a collection of information available to a user or system. Depending on the relationship between the semantic content of information S and the user's thesaurus Sp the amount of semantic information changes Iс, perceived by the user and subsequently included by him in his thesaurus. The nature of this dependence is shown in Fig. 1. Consider two limiting cases when the amount of semantic information Iс equals 0: at Sp= 0 the user does not perceive or understand the incoming information; At Sp the user knows everything, and he does not need the incoming information. Topic 2. Basics of representing and processing information in a computer Literature 1. Informatics in Economics: Textbook/Ed. B.E. Odintsova, A.N. Romanova. – M.: University textbook, 2008. 2. Computer Science: Basic Course: Textbook/Ed. S.V. Simonovich. – St. Petersburg: Peter, 2009. 3. Computer science. General course: Textbook/Co-author: A.N. Guda, M.A. Butakova, N.M. Nechitailo, A.V. Chernov; Under general ed. IN AND. Kolesnikova. – M.: Dashkov and K, 2009. 4. Informatics for economists: Textbook/Ed. Matyushka V.M. - M.: Infra-M, 2006. 5. Economic informatics: Introduction to economic analysis of information systems. - M.: INFRA-M, 2005. Measures of information (syntactic, semantic, pragmatic) Various approaches can be used to measure information, but the most widely used are statistical(probabilistic), semantic and p pragmatic methods. Statistical(probabilistic) method of measuring information was developed by K. Shannon in 1948, who proposed to consider the amount of information as a measure of the uncertainty of the state of the system, which is removed as a result of receiving information. The quantitative expression of uncertainty is called entropy. If, after receiving a certain message, the observer has acquired additional information about the system X, then the uncertainty has decreased. The additional amount of information received is defined as: where is the additional amount of information about the system X, received in the form of a message; Initial uncertainty (entropy) of the system X; Finite uncertainty (entropy) of the system X, occurring after receipt of the message. If the system X may be in one of the discrete states, the number of which n, and the probability of finding the system in each of them is equal and the sum of the probabilities of all states is equal to one, then the entropy is calculated using Shannon’s formula: where is the entropy of system X; A- the base of the logarithm, which determines the unit of measurement of information; n– the number of states (values) in which the system can be. Entropy is a positive quantity, and since probabilities are always less than one, and their logarithm is negative, therefore the minus sign in K. Shannon’s formula makes the entropy positive. Thus, the same entropy, but with the opposite sign, is taken as a measure of the amount of information. The relationship between information and entropy can be understood as follows: obtaining information (its increase) simultaneously means reducing ignorance or information uncertainty (entropy) Thus, the statistical approach takes into account the likelihood of messages appearing: the message that is less likely is considered more informative, i.e. least expected. The amount of information reaches its maximum value if events are equally probable. R. Hartley proposed the following formula for measuring information: I=log2n , Where n- number of equally probable events; I– a measure of information in a message about the occurrence of one of n events The measurement of information is expressed in its volume. Most often this concerns the amount of computer memory and the amount of data transmitted over communication channels. A unit is taken to be the amount of information at which the uncertainty is reduced by half; such a unit of information is called bit

.

If the natural logarithm () is used as the base of the logarithm in Hartley's formula, then the unit of measurement of information is nat ( 1 bit = ln2 ≈ 0.693 nat). If the number 3 is used as the base of the logarithm, then - treat, if 10, then - dit (Hartley). In practice, a larger unit is more often used - byte(byte) equal to eight bits. This unit was chosen because it can be used to encode any of the 256 characters of the computer keyboard alphabet (256=28). In addition to bytes, information is measured in half words (2 bytes), words (4 bytes) and double words (8 bytes). Even larger units of measurement of information are also widely used: 1 Kilobyte (KB - kilobyte) = 1024 bytes = 210 bytes, 1 Megabyte (MB - megabyte) = 1024 KB = 220 bytes, 1 Gigabyte (GB - gigabyte) = 1024 MB = 230 bytes. 1 Terabyte (TB - terabyte) = 1024 GB = 240 bytes, 1 Petabyte (PByte - petabyte) = 1024 TB = 250 bytes. In 1980, the Russian mathematician Yu. Manin proposed the idea of building a quantum computer, in connection with which such a unit of information appeared as qubit ( quantum bit, qubit )

– “quantum bit” is a measure of measuring the amount of memory in a theoretically possible form of computer that uses quantum media, for example, electron spins. A qubit can take not two different values (“0” and “1”), but several, corresponding to normalized combinations of two ground spin states, which gives a larger number of possible combinations. Thus, 32 qubits can encode about 4 billion states. Semantic approach. A syntactic measure is not enough if you need to determine not the volume of data, but the amount of information needed in the message. In this case, the semantic aspect is considered, which allows us to determine the content of the information. To measure the semantic content of information, you can use the thesaurus of its recipient (consumer). The idea of the thesaurus method was proposed by N. Wiener and developed by our domestic scientist A.Yu. Schrader. Thesaurus called body of information that the recipient of the information has. Correlating the thesaurus with the content of the received message allows you to find out how much it reduces uncertainty. Dependence of the volume of semantic information of a message on the thesaurus of the recipient According to the dependence presented on the graph, if the user does not have any thesaurus (knowledge about the essence of the received message, that is =0), or the presence of such a thesaurus that has not changed as a result of the arrival of the message (), then the amount of semantic information in it is equal to zero. The optimal thesaurus () will be one in which the volume of semantic information will be maximum (). For example, semantic information in an incoming message on in an unfamiliar foreign language there will be zero, but the same situation will be in the case if the message is no longer news, since the user already knows everything. Pragmatic measure information determines its usefulness in achieving the consumer's goals. To do this, it is enough to determine the probability of achieving the goal before and after receiving the message and compare them. The value of information (according to A.A. Kharkevich) is calculated using the formula: where is the probability of achieving the goal before receiving the message; The probability of achieving the goal is the field of receiving the message; Quantity and quality of information Levels of information transmission problems When implementing information processes, information is always transferred in space and time from the source of information to the receiver (recipient) using signals. Signal

- a physical process (phenomenon) that carries a message (information) about an event or state of an observation object. Message-

a form of representing information in the form of a set of signs (symbols) used for transmission. A message as a set of signs from the point of view of semiotics - a science that studies the properties of signs and sign systems - can be studied at three levels: 1) syntactic, where the internal properties of messages are considered, i.e. the relationships between signs, reflecting the structure of a given sign system. 2) semantic, where the relationships between signs and the objects, actions, qualities they denote are analyzed, i.e. the semantic content of the message, its relationship to the source of information; 3) pragmatic, where the relationship between the message and the recipient is considered, i.e. the consumer content of the message, its relationship to the recipient. Problems syntactic level concern the creation of theoretical foundations for building information systems. At this level, they consider the problems of delivering messages to the recipient as a set of characters, taking into account the type of media and method of presenting information, the speed of transmission and processing, the size of information presentation codes, the reliability and accuracy of the conversion of these codes, etc., completely abstracting from the semantic content of messages and their intended purpose. At this level, information considered only from a syntactic perspective is usually called data, since the semantic side does not matter. Problems semantic level are associated with formalizing and taking into account the meaning of the transmitted information, determining the degree of correspondence between the image of the object and the object itself. At this level, the information that the information reflects is analyzed, semantic connections are considered, concepts and ideas are formed, the meaning and content of the information are revealed, and its generalization is carried out. On a pragmatic level interested in the consequences of receiving and using this information by the consumer. Problems at this level are associated with determining the value and usefulness of using information when the consumer develops a solution to achieve his goal. The main difficulty here is that the value and usefulness of information can be completely different for different recipients and, in addition, it depends on a number of factors, such as, for example, the timeliness of its delivery and use. Information measures Measures of syntactic level information To measure information at the syntactic level, two parameters are introduced: the amount of information (data) - V D(volume approach) and amount of information - I(entropy approach). Volume of information V D. When implementing information processes, information is transmitted in the form of a message, which is a set of symbols of an alphabet. If the amount of information contained in a message of one character is taken as one, then the volume of information (data) V D in any other message will be equal to the number of characters (digits) in this message. Thus, in the decimal number system, one digit has a weight equal to 10, and accordingly the unit of measurement of information will be dit (decimal place). In this case, a message in the form n V D= P dit. For example, the four-digit number 2003 has a data volume V D = 4 dit. In the binary number system, one digit has a weight equal to 2, and accordingly the unit of measurement of information will be the bit (bit (binary digit)- binary digit). In this case, a message in the form n-digital number has data volume V D = p bit. For example, the eight-bit binary code 11001011 has a data volume V D= 8 bits. In modern computing, along with the minimum data unit of bits, the enlarged unit of bytes, equal to 8 bits, is widely used. When working with large volumes of information, larger units of measurement are used to calculate its quantity, such as kilobyte (KB), megabyte (MB), gigabyte (GB), terabyte (TB): 1 kbyte = 1024 bytes = 2 10 bytes; 1 MB = 1024 KB = 2 20 bytes = 1,048,576 bytes; 1 GB = 1024 MB = 2 30 bytes = 1,073,741,824 bytes; . 1 TB = 1024 GB = 2 40 bytes = 1,099,511,627,776 bytes. Amount of information I (entropy approach). In information and coding theory, an entropy approach to measuring information is adopted. This approach is based on the fact that the fact of obtaining information is always associated with a decrease in the diversity or uncertainty (entropy) of the system. Based on this, the amount of information in a message is determined as a measure of reducing the uncertainty of the state of a given system after receiving the message. Once an observer has identified something in a physical system, the entropy of the system decreases because, to the observer, the system has become more orderly. Thus, with the entropy approach, information is understood as the quantitative value of uncertainty that has disappeared during some process (testing, measurement, etc.). In this case, entropy is introduced as a measure of uncertainty N, and the amount of information is: Where H apr

- a priori entropy about the state of the system under study; Haps- posterior entropy. A posteriori- originating from experience (tests, measurements). A priori- a concept characterizing knowledge that precedes experience (testing) and is independent of it. In the case when during the test the existing uncertainty is removed (a specific result is obtained, i.e. Haps

= 0), the amount of information received coincides with the initial entropy Let us consider as the system under study a discrete source of information (a source of discrete messages), by which we mean a physical system that has a finite set of possible states. This is a lot A= (a 1, a 2 , ..., a p) states of a system in information theory are called an abstract alphabet or an alphabet of a message source. Individual states a 1, a 2,..., a„ are called letters or symbols of the alphabet. Such a system can randomly take on one of a finite set of possible states at any given time. and i. Since some states are selected by the source more often, and others less frequently, then in the general case it is characterized by an ensemble A, i.e., a complete set of states with probabilities of their occurrence that add up to one: Let us introduce a measure of uncertainty in the choice of source state. It can also be considered as a measure of the amount of information obtained with the complete elimination of uncertainty regarding equally probable states of the source. Then at N=1 we get ON THE)= 0. This measure was proposed by the American scientist R. Hartley in 1928. The base of the logarithm in formula (2.3) is not of fundamental importance and determines only the scale or unit of measurement. Depending on the base of the logarithm, the following units of measurement are used. 1. Bits - in this case the base of the logarithm is equal to 2: 2. Nits - in this case the base of the logarithm is equal to e: 3. Dits - in this case the base of the logarithm is equal to 10: In computer science, formula (2.4) is usually used as a measure of uncertainty. In this case, the unit of uncertainty is called a binary unit, or bit, and represents the uncertainty of choosing from two equally probable events. Formula (2.4) can be obtained empirically: to remove uncertainty in a situation of two equally probable events, one experience and, accordingly, one bit of information are needed; in the case of uncertainty consisting of four equally probable events, 2 bits of information are enough to guess the desired fact. To identify a card from a deck of 32 cards, 5 bits of information are enough, that is, it is enough to ask five questions with answers “yes” or “no” to determine the card you are looking for. The proposed measure allows solving certain practical problems when all possible states of the information source have the same probability. In general, the degree of uncertainty in the implementation of the state of the information source depends not only on the number of states, but also on the probabilities of these states. If a source of information has, for example, two possible states with probabilities of 0.99 and 0.01, then the uncertainty of choice is significantly less than that of a source that has two equally probable states, since in this case the result is practically predetermined (realization of the state, probability which is equal to 0.99). American scientist K. Shannon generalized the concept of a measure of choice uncertainty H in case H depends not only on the number of states, but also on the probabilities of these states (probabilities p i character selection and i, alphabet A). This measure, which represents the uncertainty per state on average, is called entropy of a discrete source of information: If we again focus on measuring uncertainty in binary units, then the base of the logarithm should be taken equal to two: In equiprobable elections, the probability p i =1/N formula (2.6) is transformed into R. Hartley’s formula (2.3): The proposed measure was called entropy not by chance. The fact is that the formal structure of expression (2.5) coincides with the entropy of the physical system, previously defined by Boltzmann. Using formulas (2.4) and (2.6), we can determine the redundancy D message source alphabet A, which shows how rationally the symbols of a given alphabet are used: Where N max (A) - the maximum possible entropy, determined by formula (2.4); ON THE) - entropy of the source, determined by formula (2.6). The essence of this measure is that with an equally probable choice, the same information load on a sign can be ensured by using a smaller alphabet than in the case of an unequal choice. , and (2.2)

, and (2.2)![]() (2.4)

(2.4)![]()

![]()

(2.5)

(2.5) (2.6)

(2.6)