Get rid of your dirty markup with the free online HTML Cleaner. It's very easy to compose, edit, format and minify the web code with this online tool. Convert Word docs to tidy HTML and any other visual documents like Excel, PDF, Google Docs etc. It's extremely simple and efficient to work with the two attached visual and source editor which respond instantly to your actions.

HTML Cleaner is equipped with many useful features to make HTML cleaning and editing as easy as possible. Just paste your code in the text area, set up the cleaning preferences and press the Clean HTML button. It can handle any document created with Microsoft Excel, powerpoint, Google docs or any other composer. It helps you easily get rid of all inline styles and unnecessary codes which are added by Microsoft Word or other WYSIWYG editors. This HTML editor tool is useful when you're migrating the content from one website to the other and you want to clean up all alien classes and IDs the source site applies. Use the find and replace tool for your custom commands. The gibberish text generator lets you easily add dummy text to the editor.

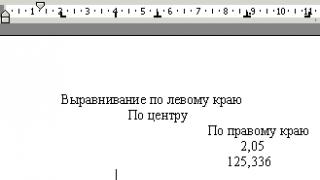

On the top of the page you can see the visual editor and the source code editor next to each other. Whichever you modify the changes will be reflected on the other in real time. The visual HTML editor allows beginners to easily compose their content just like when using any other word processor program, while on the right the source editor with highlighted code markup helps the advanced users to adjust the code. This makes this online program a nice tool to learn HTML coding.

Convert Word Documents To Clean HTML

To publish online PDFs, Microsoft Word, Excel, PowerPoint or any other documents composed with different word editor programs or just to copy the content copied from another website, paste the formatted content in the visual editor. The HTML source of the document will be immediately visible in the source editor as well. The control bar above the WYSIWYG editor controls this field while all other source cleaning settings are for editing the source code. click the Clean HTML button after setting up the cleaning preferences. Copy the cleaned code and publish it on your website.

There's no guarantee that the program corrects all errors in your code exactly the way you want so please try to enter a syntactically valid HTML.

Convert the HTML tables to structured div elements activating the corresponding checkbox.

Cleaning HTML code from Microsoft Word tags (2000-2007)?

In the past web designers used to build their websites using tables to organize page layout, but in the era of responsive web design tables are outdated and DIV’s are taking their place. This online tool helps you turn your tables to structured div elements with a few simple clicks.

You can make your source code more readable by organizing the tabs hierarchy in a tree view.

Become A Member

This website is a fully functional tool to clean and compose HTML code but you have the possibility to purchase a HTML G membership and access even more professional features. Using the free version of the HTML Cleaner you consent to include links in the edited documents. This cleanup tool might add a promotional third party link to the end of the cleaned documents and you need to leave this code unchanged as long as you use the free version.

Greetings friends, from this article you will learn how to clean up HTML code, how to optimize images, how to format and optimize meta tags correctly, how to make the site faster, and find out why you need to optimize the scripts on the site.

One of the important measures for internal website optimization is page code optimization. Due to this, you can improve the overall quality of the resource, increase the speed of loading pages, and increase the efficiency of interactive functions.

HTML validation and standardization

Fundamentally important for search promotion and provide a positive user experience so that the site can work equally well in any browser, on different operating systems, on mobile and desktop computers.

To achieve a common unification, all sites are developed on the basis of standard versions of HTML. The most relevant today are HTML versions 4.1 and HTML5. The latter, although still under development, has already published a huge number of such sites on the web.

All the most popular browsers Opera, Google Chrome, Mozilla Firefox in their latest versions use support for the new HTML5 hyperlanguage. The importance of using HTML5 to create websites is also that mobile computers on the base operating system Android does not support Flash technology and users will not be able to view videos in SWF format.

HTML5-based sites can play videos without having to download and install Adobe Flash Player. The trend towards non-flash is expanding, so for normal operation sites, it is useful to gradually abandon the use of flash videos and animated banners of this format.

Check the validity of the site's HTML code available for free on the official website of the Consortium World Wide Web, for example this one

validator.w3.org

Built-in validators are found in good website builders and content management systems.

Cleaning up the HTML code

In the process of creating and subsequent editing of web pages, the programmer hastily leaves technical notes, comments on the pages, forgets to remove unnecessary tags. All this not only slows down the site, but also reduces functionality. Through a simple removing HTML garbage you can speed up the loading of website pages by literally 35%.

Malicious garbage also includes broken, broken links that lead nowhere because the recipient has been deleted. Such links are not very popular with search engines. The presence of broken links can negatively affect the search performance of the resource.

Since broken links tend to appear by themselves from time to time, it is necessary to regularly search for and remove them. This applies to both external and internal links. If the content management system does not have the option to search for broken links, you can use free online services.

http://creatingonline.com/site_promotion/broken_link_checker.htm

http://anybrowser.com/linkchecker.html

Graphic Content Optimization

It is important to pay attention to the optimization of graphics, since all images must be processed appropriately in order to be published on the pages of the site.

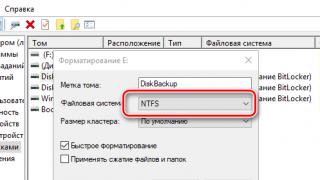

- For publishing photos, it is advisable to use the JPEG format, since this type of file provides the best quality with minimal weight.

- To save pictures for which quality is not very important, you can choose PNG format when the weight of the files is not more than 100 or 200 KB.

- Everything graphic files need, by modern standards, save in a compressed form and in a separate directory on the server, but do not embed in web pages.

After editing a page containing images, you need to check the download speed. The page should appear in the browser within no more than five seconds. Otherwise the show bounce rate increases dramatically.

All pictures and photos have meta tags that need to be optimized by adding keywords to improve their search on the Internet. The main requirement for optimizing image meta tags is uniqueness. All pictures should have different titles, tooltips and alternative texts.

Web page meta tags

Meta tags for different pages site must be unique. When duplicate tags are found, the search engine glues them together and some pages will not be indexed in this way. Experiments have shown that unique site meta tags improve rankings and traffic by about 18%.

Particular attention should be paid to the optimization of the Title and Description tags

- The page title should consist of no more than allowed search engine number of characters and contain the main keyword.

- Optimal quantity words for the title - six.

- The description of the page is designed as a kind of selling text for landing page and is usually composed of two short sentences. The first sentence contains the main keyword, and the second one contains the additional one.

- Keywords meta tags are not very important, but should be there just in case.

For example, I generally removed from the engine itself a part of the code that is responsible for meta tags.

Optimization of program elements - scripts

The principles of script optimization are the same as for graphics - do not place on the page, save in a compressed form in a special directory.

Your page should be clean, the robot visiting your site should see only a clean page, on which there will be only an article, and the necessary meta tags. Therefore, all scripts, counters, etc. are needed. put in a separate file.

Text content optimization

Absolutely everyone faces the task of cleaning html from unnecessary tags.

The first thing that comes to mind is to use the strip_tags() php function:

string strip_tags (string str[, string allowable_tags])

The function returns a string stripped of tags. Allowable_tags is passed tags that should not be removed. The function works, but, to put it mildly, imperfect. Along the way, there is no check for the validity of the code, which may lead to the removal of text that is not included in the tags.

Initiative developers did not sit idly by - you can find improved functions on the network. A good example is strip_tags_smart .

Whether or not to use ready-made solutions is the personal choice of the programmer. It so happened that I most often do not need a "universal" handler and it is more convenient to clean up the code with regular expressions.

What determines the choice of one or another processing method?

1. From the source material and the complexity of its analysis.

If you need to process fairly simple htmp texts, without any fancy layout, clear as day :), then you can use the standard functions.

If the texts have certain features that need to be taken into account, then special handlers are written here. Some may just use str_replace . For example:

$s = array("’" => "’", // Right-apostrophe (eg in I"m)

"“" => "“", // Opening speech mark

"–" => "—", // Long dash

"â€" => "”", // Closing speech mark

"Ã" => "é", // e acute accent

chr(226) . chr(128) . chr(153) => "’", // Right-apostrophe again

chr(226) . chr(128) . chr(147) => "—", // Long dash again

chr(226) . chr(128) . chr(156) => """, // Opening speech mark

chr(226) . chr(128) . chr(148) => "—", // M dash again

chr(226) . chr(128) => """, // Right speech mark

chr(195) . chr(169) => "é", // e acute again

);

foreach ($s as $needle => $replace)

{

$htmlText = str_replace($needle, $replace, $htmlText);

}

Others may be based on regular expressions. As an example:

Function getTextFromHTML($htmlText)

{

$search = array(""

""

""

""<[\/\!]*?[^<>]*?>"si", // Remove HTML tags

""([\r\n])[\s] "", // Remove spaces

""&(quot|#34);"i", // Replace HTML special chars

""&(amp|#38);"i",

""&(lt|#60);"i",

""&(gt|#62);"i",

""&(nbsp|#160);"i",

""&(iexcl|#161);"i",

""&(cent|#162);"i",

""&(pound|#163);"i",

""&(copy|#169);"i",

""(\d);"e"); // write as php

$replace = array("",

"",

"",

"",

"\\1",

"\"",

"&",

"<",

">",

" ",

chr(161),

chr(162),

chr(163),

chr(169),

"chr(\\1)");

Return preg_replace($search, $replace, $htmlText);

}

(At times like these, it's never been more fun to be able to take preg_replace with arrays as parameters.) If necessary, you supplement the array with your regular expressions. For example, this regular expression constructor can help you in compiling them. Beginning developers may find the article "All about HTML tags. 9 Regular Expressions to strip HTML tags" helpful. Look there for examples, analyze the logic.

2. From volumes.

Volumes are directly related to the complexity of the analysis (from previous paragraph). A large number of texts increases the likelihood that, while trying to foresee and clean up everything with regular expressions, you may miss something. In this case, the method of "multi-stage" cleaning is suitable. That is, clear it first, for example, with the strip_tags_smart function (we don’t delete the sources just in case). Then we selectively review a certain number of texts to identify "anomalies". Well, we "clean up" the anomalies with regular expressions.

3. From what you need to get as a result.

The processing algorithm can be simplified different ways depending on the situation. The case I described in , demonstrates this well. Let me remind you that the text there was in a div, in which, in addition to it, there was also a div with " bread crumbs", adsense ads, a list of similar articles. When analyzing a selection of articles, it turned out that the articles do not contain pictures and are simply divided into paragraphs using . In order not to clean the "main" div from extraneous things, you can find all the paragraphs very easy) and put their contents together.

In general, between supporters of parsing html-code, based purely on regular expressions, and parsing, which is based on the analysis of the DOM-structure of the document, real holiwars flare up on the network. Here, for example, on overflow. Innocent at first sight