The concept of a data warehouse

A "data warehouse" is a domain-specific, time-bound, and immutable collection of data to support management decision making.

The data in the storage comes from operational systems (OLTP systems), which are designed to automate business processes. In addition, the repository can be replenished from external sources, such as statistical reports, various directories, etc. The data warehouse, in addition to detailed information, contains aggregates, i.e. summarizing information, such as sales amounts, quantities, total expenses, etc.

A tax data warehouse should be viewed as an information hub that automates the calculation of deferred taxes, accepts and stores information from external sources, and transforms the data into a user-friendly format. Such a repository is a platform for storing accurate and up-to-date tax data that can be retrieved and transferred to external applications for the purposes of analysis, audit, planning and forecasting.

The data warehouse is a repository information resources and provides enterprise data consolidation for reporting and analysis purposes. Data and information, both operational and non-operational, are entered into the warehouse, usually using ETL tools, from sources, data as it becomes available, or on a regular basis. Data transformation allows you to process requests and analyze them in a timely manner, which simplifies and speeds up the process of fulfilling requests for information originally received from other sources.

The benefits of a repository include the ability to transform data into quality information needed to prepare tax reporting and compliance with tax laws, for users of all levels. Any interested parties - customers, partners, employees, managers and leaders - can receive interactive content anytime and anywhere.

The very existence of a single source of information for tax reporting and tax compliance is a big step forward for many tax authorities.

Why is it necessary to build data warehouses - after all, they contain obviously redundant information that is already in the databases or files of operating systems? It is impossible or very difficult to analyze data from operational systems directly. This is due to various reasons, including the fragmentation of data and their storage in different DBMS formats. But even if all the data in the enterprise is stored on a central database server, the analyst will almost certainly not understand their complex, sometimes confusing structures.

Thus, the task of the repository is to provide the "raw material" for analysis in one place and in a simple, understandable structure.

There is another reason that justifies the appearance of a separate storage - complex analytical queries for operational information slow down the current work of the company, blocking tables for a long time and seizing server resources.

Under the storage can be understood not necessarily a giant accumulation of data - the main thing is that it be convenient for analysis.

Data warehouse concept

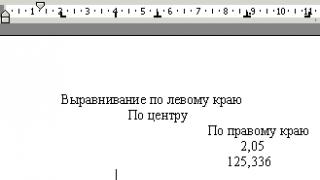

The author of the concept of data warehouses ( Data Warehouse) is B. Inmon, who defined data warehouses as: “domain-oriented, integrated, immutable, historical data sets organized for management support purposes”, designed to act as a “single and only source of truth”, providing managers and analysts with reliable information necessary for operational analysis and decision-making. The data warehouse schema can be represented as follows:

The physical implementation of this scheme can be very diverse. Let's consider the first option - a virtual data warehouse, this is a system that provides access to a conventional registration system that emulates working with a data warehouse. Virtual storage can be organized in two ways. You can create a series of "views" (view) in the database or use special means database access (for example, products of the desktop OLAP class).

Because building a data warehouse is a complex process that can take years, some organizations instead build data marts containing information for specific departments. For example, a marketing data mart might only contain customer, product, and sales information and not include supply plans. Multiple data marts for departments can coexist with the main data warehouse, giving a partial view of the contents of the warehouse. Data marts are much faster to build than storage, but there can be significant integration issues later if the initial planning was done without considering the full business model. This is the second way.

Building a full-fledged enterprise data warehouse is usually done in a three-tier architecture. At the first level, various data sources are located - internal recording systems, help systems, external sources (data news agencies, macroeconomic indicators). The second level contains a central repository, where information from all sources from the first level flows, and, possibly, an operational data warehouse that does not contain historical data and performs two main functions.

The concept of data warehouses is based on two fundamental ideas:

1) integration of previously separated detailed data in a single data warehouse, their coordination and, possibly, aggregation:

historical archives;

data from traditional ODS;

data from external sources.

2) separation of data sets used for operational processing and data sets used to solve analysis problems.

The purpose of the concept of data warehouses is to find out the requirements for data placed in the target database of the data warehouse (Table 1), to determine the general principles and stages of its construction, the main sources of data, to give recommendations on solving potential problems that arise during their unloading, cleaning, coordination, transportation and loading into the target database.

Table 1. Basic requirements for data in the Data Warehouse.

| Subject orientation | All data about a certain subject (business object) is collected (usually from a set various sources), are cleared, coordinated, supplemented, aggregated and presented in a single form convenient for their use in business analysis. |

| Integration | All data about different business objects are mutually coordinated and stored in a single corporate-wide Storage. |

| immutability | The initial (historical) data, after they have been agreed, verified and entered into the corporate-wide Storage, remain unchanged and are used exclusively in read mode. |

| Timeline support | The data is chronologically structured and reflects the history, for a sufficient period of time to complete the tasks of business analysis and forecasting. |

The subject of the concept of data warehouses is the data itself. After the traditional data processing system (DPS) is implemented and begins to function, it becomes exactly the same independent object of the real world as any manufacturing process. And the data, which is one of the final products of such production, has exactly the same properties and characteristics as any industrial product: shelf life, storage (storage) place, compatibility with data from other industries (SOD), market value, transportability, completeness, maintainability, etc.

It is from this point of view that data in data warehouses is considered. That is, the goal here is not ways to describe and display objects subject area, but the data itself, as an independent object of the subject area generated as a result of the functioning of previously created information systems.

For correct understanding This concept requires clarification of the following fundamental points:

· The concept of data warehousing is not a concept of data analysis, rather it is a concept of preparing data for analysis.

· The concept of data warehouses does not predetermine the architecture of the target analytical system. It talks about what processes should be running on the system, but not exactly where and how these processes should run.

· The concept of data warehouses involves not just a single logical view of the organization's data, but the implementation of a single integrated data source.

except single directory metadata, means of uploading, aggregating and reconciling data, the concept of data warehouses implies: integration, immutability, chronology support and data consistency. And if the first two properties (integration and immutability) affect data analysis modes, then the last two (chronology support and consistency) significantly narrow the list of analytical tasks to be solved.

Without the support of chronology (the availability of historical data), it is impossible to talk about solving the problems of forecasting and trend analysis. But the most critical and painful are the issues related to data reconciliation.

The main requirement of the analyst is not so much efficiency as the reliability of the answer. But credibility is ultimately determined by consistency. Until work has been done to mutually agree on the values of data from various sources, it is difficult to talk about their reliability.

Often, a manager is faced with a situation where different systems can and usually give a different answer to the same question. This may be due to the non-synchronism of the moments of data modification, differences in the interpretation of the same events, concepts and data, changes in the semantics of data during the development of the subject area, elementary errors during input and processing, partial loss of individual fragments of archives, etc. It is obvious that it is not realistic to take into account and determine in advance the algorithms for resolving all possible collisions. Moreover, it is impossible to do in operational mode, dynamically, directly in the process of generating a response to a request.

Similar information.

Note that data warehousing is an evolving technology. As for any developing technology, a certain amount of caution should be present when evaluating the actions of HD software vendors trying to position themselves among competitors. For example, discussions about the size of HD - from what size data store can be considered as a storage? With 50 GB? Note that in some areas of research, the size of the analyzed array can be very small. There is simply no data. And the analysis of such an array is possible.

Consider the main elements of the concept of data warehousing.

Extracting data from operating systems

The main element of the concept of data warehousing is that the most efficient access to data stored for analysis can only be provided if it is separated from the operating (transactional) system, i.e. data from the operating system must be moved to a separate data storage system. This approach is historical. Due to limitations in hardware and technology, in order to ensure the performance of a transactional system, data was archived on tapes or media outside of such a system. The problem of access to them required certain technological solutions.

It should be noted that with the development of the concept, the position of separating data for analysis from data in the OLTP system has undergone little change. It became more formal and enriched by the use of funds multivariate analysis data. Currently, a data warehouse can be built both on an existing OLTP system, and above it, and as an independent object. This should be decided by the IT project manager as part of the choice of the DW architecture.

The need to integrate data from multiple OLTP systems

Data Warehousing Systems are most useful when data can be retrieved from more than one OLTP system. When data needs to be collected from multiple business applications, it is natural to assume that this needs to be done in a different location from where the original applications are localized. Even before the creation of structured data warehouses, analysts in many cases combined data extracted from different systems, into one spreadsheet or database. A data warehouse can very effectively bring together data from specific applications such as sales, marketing, finance, manufacturing, taking into account their accumulation, i.e. save time series of key business indicators - the so-called historical data.

Note that one of the properties of data collected from various applications and used by analysts is the ability to cross-query such data. In many data warehouses, the "time" attribute is a natural criterion for filtering data. Analysts are interested in the behavior of time series of data characterizing business processes.

The goal of many data warehousing systems is a year-by-year review of activities. For example, you can compare sales during the first quarter of this year with sales during the first quarter of previous years. Time in HD is a fundamental attribute cross queries. For example, an analyst might try to evaluate the impact of a new marketing campaign taking place during certain periods by considering sales during the same periods. The ability to establish and understand the correlation between the activities of various departments in an organization is often cited as one of the most important arguments about the benefits of data warehousing systems.

Data Warehousing System not only can it work as an efficient platform for consolidating data from various sources, but it can also collect multiple versions of data from a single application. For example, if the organization has switched to new software, then the data warehouse will save the necessary data from previous system. In this respect data storage system can serve as a means of integrating inherited data, maintaining the continuity of analysis when changing the hardware and software platform of the OLTP system.

Differences between transactional and analytical data processing

One of the most important reasons for separating data for analysis from data for OLTP systems was the potential drop in query processing performance when running data analysis processes. High performance and short response times are critical parameters of OLTP systems. The performance penalty and overhead associated with processing predefined queries is usually easy to estimate. On the other hand, queries for analyzing data in a data warehouse are difficult to predict, and therefore, it is difficult for them to estimate the query execution time.

OLTP systems are designed to optimally execute predefined queries in near real-time mode of operation. For such systems, it is usually possible to determine the distribution of load over time, determine the time of peak loads, evaluate critical queries and apply optimization procedures supported by modern DBMS to them. It is also relatively easy to determine the maximum allowable time response to specific request in system. The cost of response time for such a request can be estimated based on the ratio of the cost of performing I / O operations / the cost of traffic over the network. For example, for an order processing system, you can specify the number of active order managers and the average number of orders during each hour of operation.

Although many of the inquiries and reports in data storage system are predefined, it is almost impossible to accurately predict the behavior of system metrics (response time, network traffic, etc.) when they are executed. The process of data exploration in a data warehouse often occurs in an unpredictable way. Leaders of all ranks know how to ask unexpected questions. During the parsing process, ad hoc queries may occur, which are caused by unexpected results or a lack of understanding by the end user of the data model being used. Further, many of the analysis processes tend to take into account many aspects of the organization's activities, while OLTP systems are well segmented by activity. The user may need more detailed information than stored in the summary tables. This can lead to joining two or more huge tables, which will result in the creation of a temporary table equal to the product of the number of rows in each table, and will drastically reduce system performance.

Data in data warehouse systems remains unchanged

Another key data property in data storage system is that the data in the HD remains unchanged. This means that once the data is in the data warehouse, it cannot be changed. For example, the order status does not change, the order size does not change, etc. This characteristic of the data warehouse is of great importance for the selection of data types when placing them in the data warehouse, as well as the choice of the time when the data should be entered in the data warehouse. The last property is called data granularity.

Consider what it means for data to be immutable. In an OLTP system, data objects go through constant changes to their attributes. For example, an order may change its status many times before being placed. Or, when a product is assembled on an assembly line, many manufacturing steps are applied to it. Generally speaking, data from an OLTP system needs to be loaded into the data warehouse only when its processing within the framework of business processes is fully completed. This may mean the completion of an order or a product cycle. Once an order is completed and shipped, it is unlikely to change its status. Or, once a product is assembled and put into storage, it is unlikely to make it to the first stage of the assembly cycle.

Other good example there may be a snapshot of constantly changing data at certain points in time in the HD. The inventory management module in an OLTP system can change inventory in almost every transaction; it is impossible to bring all these changes to the HD. You can specify that such a snapshot of the inventory status should be entered into the warehouse every week or daily, as it will be accepted for analysis in a particular organization. The data of such a snapshot, of course, is immutable.

After the data is entered into the data warehouse, its modification is possible in extremely rare cases. It is very difficult (although there are such attempts) to maintain dynamic data in a data warehouse. Synchronization task frequently changed data in OLTP systems and is still far from an acceptable solution. It should also be mentioned here that the placement of dynamically changing data in a data warehouse is currently the subject of intensive research. For example, the development of procedures for supporting slowly changing dimension tables in a data warehouse is a task that is already being solved at the software level of manufacturers of solutions in the field of data storage.

Data in the data warehouse is stored for a much longer time than in OLTP systems

Data in most OLTP systems is archived as soon as it becomes inactive. For example, an order may become inactive after it is completed; a bank account may become inactive after it has been closed. The main reason for archiving data at rest is the performance of the OLTP system (why store data if it's not being accessed). Large amounts of such data can significantly degrade query performance, assuming only active data is being processed. To process such data in the DBMS, various procedures for splitting base tables into sections are offered. On the other hand, since data warehouses are intended, in particular, to be an archive for OLTP data, data is stored in them for a very long period.

In fact, the project data warehousing systems can start without any specific plan for archiving data from the DW. The cost of maintaining the data after it has been loaded into the warehouse is low. The largest cost of creating a repository falls on data transformation( data transfer ) and their cleaning ( data scrubbing ). Data retention of five or more years is typical for data warehousing systems. Therefore, the procedures for archiving data from the data warehouse at the stages of their creation and operation at the beginning of the period can not be given much time. Especially when you consider the decline in prices for computer hardware.

In other words, separating OLTP system data from analysis system data is a fundamental concept of data warehousing. Business today is impossible without making informed decisions. Such solutions can be built on the basis of a comprehensive analysis of the results of business processes in the organization and the organization's activities in the market of goods and services. Decision-making time in modern conditions and information flows is reduced. The role of creation and support data analysis systems based on new information technologies increases. HD is one of the main links in the application of such technologies.

Can be distinguished the following reasons to separate data data warehousing systems And operational data processing systems(Fig. 1.5).

- The difference in target requirements for and OLTP systems.

- The need to collect data in the data warehouse from various information sources, i.e. if the data is generated in the OLTP system itself, then for data warehousing systems in most cases the data is generated outside of it.

- Data, getting into the data warehouse, in most cases remain unchanged.

- Data in the HD is stored for a long time.

Rice. 1.5.

Logical OLTP Data Transformation and Data Modeling

Data in a data warehouse is logically transformed when it is transferred to it from an OLTP system or other external source. The problems associated with the logical transformation of data when transferring them to a data warehouse may require significant analysis and efforts by designers. Architecture data warehousing systems and HD models are critical to the success of such projects. Below, some fundamental concepts of the relational database theory will be considered, which are not completely applicable to data warehousing systems. Even though most data warehouses are deployed on relational databases, some basic principles of relational databases are deliberately violated when creating a logical and physical model of a data warehouse.

The data model of a data warehouse defines its logical and physical structure. Unlike simply archived data, this case it is impossible to do without detailed modeling procedures. Such modeling in the early stages of a storage system design is necessary to create an effective system that captures data from all business processes and procedures of an organization.

The data modeling process should structure the data in the data warehouse in a form independent of relational model data of the system that supplies this data. As will be shown below, the data warehouse model is likely to be less normalized than the data source OLTP system model.

In OLTP systems, data for different subsystems can overlap significantly. For example, product development information is used in various forms in many subsystems of an OLTP system. Data Warehousing System should consolidate all such data in one system. Some object attributes that are essential for an OLTP system will be unnecessary for a data warehouse. New attributes may appear as the entity in the DW changes its quality. The main requirement is that all data in the data warehouse must participate in the analysis process.

The data model of the DW needs to be extended and structured so that data from different applications can be added. A data warehouse project in most cases cannot include data from all possible business applications of an organization. Usually, the amount of data in the data warehouse increases according to the increment principle: data is extracted from OLTP systems and added to the data warehouse in certain portions. They start by saving especially significant data, then systematically increase their volume as necessary.

The data warehouse model adjusts to the business structure

Next important point lies in the fact that the logical model of the data warehouse is tuned to the business structure (focused on the subject area), and not to the aggregation of the logical models of specific applications. The entities (objects) supported in the data warehouse are similar to the entities (objects) of a business - such as customers, products (goods), orders and distributors. Within specific organizational units, there may be a very narrow view of the organization's business entities, such as customers. For example, a loan service team at a bank may know something about a customer only in the context of one or more loans. Another division of the same bank may know about the same customer in the context deposit account. The presentation of customer data in the data warehouse is much larger than that of a specific bank unit. The client in the CD represents the client of the bank in all his relationships with the bank. From the point of view of relational theory, the basic set of functional dependencies supported in the database is changing.

Data warehouse should be built on the attributes of business entities (subject-oriented), collecting data about these entities from various sources. The data structure of any single data source is likely to be inadequate for a DW. per data structure specific application influenced by factors such as:

- type of specific business process. Yes, in automated subsystem procurement data structure may be dictated by the nature of procurement business procedures in a given market sector;

- model influence operating systems. For example, original application can be quite old and take into account the development of the data model by changing the structure of the application database. Many such changes may be poorly documented;

- limitations of the software and hardware platform. The logical data structure may not support some logical relationships between data or may have limitations associated with the limitations of the software and hardware platform.

The DW model is not bound by the limitations of the source data models. For it, a model should be developed that reflects the structure of the organization's business, and not the structure of the business process. Such extended model data should be understandable to both analysts and managers. Thus, the data warehouse designer must configure the data warehouse objects to the organization's business structure, taking into account its business processes and business procedures.

Transforming Information Describing the State of Objects in an OLTP System

The next important point is that before placing the data in the data warehouse, they must be converted. Most data from an OLTP system or other external source cannot be maintained in a data warehouse. Many of the object attributes in an OLTP system are very dynamic and constantly changing. Many of these attributes are not loaded into the DW, while others are static in time and are loaded into the DW. The data warehouse should not contain information about objects that are dynamic and are constantly in a state of modification.

To understand what it means to lose information describing Current state object, consider an example of an order management system that tracks stock status while filling an order. First, consider the "Order" entity in the OLTP system. An order can go through many different statuses or states before it is completed and completed status. The status of an order may indicate that it is ready to be filled, that the order is being filled, that it has been returned for revision, that it is ready for shipment, and so on. A particular order can go through many states, which are reflected in the status of the order and are determined by the business processes that were applied to it. It is practically impossible to transfer all the attributes of such an object to the data warehouse. Data Warehousing System, should probably contain only one end snapshot of an object such as an order. Thus, the "Order" object must be converted to be placed in the data warehouse. Then information about many objects of the "order" type can be collected in the data warehouse and the final data warehouse object, the "Order", can be built.

Consider a more complex example data transformation when managing a stock of goods in an OLTP system. The stock may change in each transaction. The quantity of a particular product in the warehouse can be reduced by the transaction of the order filling subsystem or increased when the purchased product arrives. If the order processing system performs tens of thousands of transactions per day, then it is likely that the actual inventory level in the database will have many states and be captured in many snapshots during that day. It is impossible to commit all these permanent changes to the database and transfer them to the data warehouse. Displaying this behavior of an object in the system - the data source is still one of the unsolved problems in data warehousing systems. There are a number of approaches to solving this problem. For example, you can periodically capture inventory level snapshots in the warehouse.

This approach can be applied to a very large part of the data in OLTP systems. In turn, such a decision will entail a number of tasks related to the choice of the time period, the amount of data to be captured, etc. Thus, most of the data about the state of objects in the OLTP system cannot be directly transferred to the data warehouse. They must be converted at the logical level.

Data Model Denormalization

The next point in the design of relational data warehouses is to decide how important it is in a data warehouse to comply with the principles of relational theory, namely: to allow denormalization of the model, in particular, to increase query performance. Before we look at denormalization of a data model in the context of data warehousing, let's briefly review the main points of relational database theory and normalization process. E.F. Codd developed relational database theory in the late 1960s while working at an IBM research center. Today, most popular database platforms follow this model completely. The relational database model is a collection of two-dimensional tables consisting of rows and columns. In the terminology of the relational model, these tables, rows and columns are respectively called relations or entities, tuples, attributes (attribute) and domains (domain). The model identifies unique keys (Key) for all tables and describes relationships between tables through attribute (key) values.

Normalization is a relational database modeling process where relationships or tables are partitioned until all attributes in a relation are fully determined by its primary key. Most designers try to reach third normal form (3NF) on all relationships before they denormalize for one reason or another. The three successive stages of relational database normalization are briefly described below.

- First normal form 1NF ( 1NF ). A relation is said to be in first normal form if it describes a single entity and does not contain arrays or repeating groups of values as attributes. For example, an order table that includes order items will not be in first normal form because it contains duplicate attributes for each order item.

- Second normal form 2NF (2NF). It is said that the relation is in second normal form if it is in first normal form and all non-key attributes are functionally fully dependent on primary key of the relationship.

- third normal form 3NF (3NF). It is said that the relation is in third normal form if it is in second normal form and its non-key attributes are completely independent of each other.

The normalization process results in the splitting of the original relation into several independent relations. Each relation in the database is answered by at least one table. While the relational model is more flexible in representing data, it can be more complex and difficult to understand. Also, a fully normalized model can be very inefficient to implement. Therefore, database designers, when converting a normalized logical model in the physical model allow significant denormalization. The main purpose of denormalization is to limit inter-table joins in queries.

Another reason for denormalizing the HD model is, just like for operating systems, performance and simplicity. Each query in a relational database has its own cost performance. The cost of executing queries is very high in the data warehouse due to the amount of data processed in the query (and inter-table joins, the number of which grows in proportion to the dimension of the model). Joining three small tables in an OLTP system can have an acceptable query cost, but data storage system making such a connection can take a very long time.

Static relationships in historical data

Denormalization is important process in data warehouse modeling: the relationship between attributes does not change for historical data. For example, in OLTP systems, a product could be part of another product in group "A" this month and part of a product in group "B" next month. In a normalized data model, to display this fact, it is necessary to include the attribute "group of goods" in the relation (entity) "product", but not in the relation (entity) "order", which generates orders for this product. Only the product identifier is included in the "order" entity. Relational theory would require a join between the "Order" and "Item" tables to determine the product group and other attributes of that product. This fact ( functional dependency) does not matter for the data warehouse, since the data stored refers to already completed orders, i.e. the belonging of the product to the group has already been fixed (in fact, the specified functional dependency is not supported). Even if the item belonged different groups at different times, the relationship between the product group and the product of each individual order is static. Thus, this is not a denormalization for a DW. In this case, the functional dependency of the OLTP system is not used in the data warehouse.

As another example, take the price of a commodity. Prices in the OLTP system can change constantly. Some of these price changes can be transferred to the data warehouse as periodic snapshots of the "Product price" table. In the data warehouse, the history of the product price list is fixed and is already linked to orders, i.e. there is no need to dynamically determine the price list when processing the order, since it has already been applied to the saved order. In relational databases, it is easier to maintain dynamic relationships between business entities, while a data warehouse contains relationships between domain entities at the given time.

Concept logical conversion source application data, discussed above, requires some effort to implement and is very useful when developing a data warehouse.

Physical transformation of source application data

An important point in data warehousing systems is the physical transformation of the data. These procedures in data warehousing are known as "data scrubbing", "data staging", or "data purge" processes. The data cleansing process is the most intensive and time consuming in any data warehouse project. Physical transformation includes the use of standard data domain terms and data standards. During the physical transformation process, the data is in some intermediate file before it is entered into the data warehouse. When data is collected from many applications, its integrity can be checked during the transformation process before being loaded into the data warehouse.

Terms and names entity attributes, used in OLTP systems, in the process of data conversion for data warehouses are converted into universal ones, standard terms accepted for this area of business. Applications may use abbreviations or difficult-to-understand terms for many different reasons. The software and hardware platform may limit the length and format of names, and business applications may use common terms in different subject areas. In a data warehouse, you need to use standard business terms that are self-explanatory for most users.

The customer (customer) identifier in the OLTP system can be named "Purchase", "purchase_id", or "purchase_to". Further, different applications of such systems may use different names (synonyms) when referring to the same entity attribute. The data warehouse designer chooses a simple standard business term such as "Client ID". So the names entity attributes from the supply systems must be unified for use in the CD.

Different subsystems of OLTP systems and external data sources may use different definitions of attribute domains on the physical presentation layer. Thus, an attribute of type "product identifier" in one system has a length of 12 characters, and in another - 18 characters. On the other hand, some existing software systems may have restrictions on defining attribute name lengths and a poor set of types for defining domains, while others may not have such restrictions and may provide a wide selection of attribute types.

When defining attributes in a physical model of a data warehouse, it is necessary to use such lengths and data types in defining an attribute domain that would allow taking into account both the requirements of the subject area and the capabilities of data source systems. Defining domain standards for DWs is one of the important tasks of DW designers. The rules for converting the domains of attributes of data source systems into domains of attributes of the data warehouse should be recorded in the metadata of the data warehouse.

All attributes in a data warehouse must consistently use predefined values. Different applications may adopt different conventions for predefined attribute values. These predefined values include default values, values that replace null values, and so on. For example, gender in various systems may have different values: in some, these are the character values "M" and "F", in others, the numeric values 0 and 1. A more unpleasant example is the case when one data value is used in an application for several purposes, i.e. the attribute actually represents a plural value. For example, when in the attribute "type of measurement method" the first two digits mean the method of measurement, and the second two - the method of physical control of the measurement. Such different values must be converted to a predefined value accepted by the DW before being loaded into the DW.

In some data source systems, values may be missing (missing value problem, "missing data") or the transformation for them cannot be performed ("corrupt data" - data for which the transformation cannot be performed). It is important that during the transformation process such data take on values in the data warehouse that would allow users to interpret them correctly. Some attributes can simply be assigned a reasonable default value in case of missing values or conflicts in the conversion, and other attributes can be assigned values from the values of other attributes. For example, let's say that in the "Order" entity, the value of the item's unit of measure attribute is omitted. This value can be obtained from the corresponding attribute of the Commodity entity of this source system. For some attributes, there are no suitable default values when their values are missing. For such missing values in the data warehouse, you should also define a default value, for example, as a null value.

The main differences in the use of data in

The desire to combine the capabilities of OLTP systems and analysis systems in one DSS architecture has led to the emergence of the concept XranAndlseekdbutnusX (HD).

The concept of HD was discussed in one way or another by specialists in the field of information

mation systems for a long time. The first articles dedicated specifically to HD appeared

in 1988, their authors were B. Devlin and P. Murphy. In 1992, W. Inmon described this concept in detail in his monograph "Building the Data Warehouse", second edition - QED Publishing Group, 1996.

The concept of data storage is based on the idea of separating the data used for

operational processing and for solving problems of analysis. This allows you to apply data structures that meet the requirements for their storage, taking into account use in OLTP systems and analysis systems. This separation allows you to optimize both online storage data structures (on-line databases, files, spreadsheets, etc.) for performing input, modification, deletion and search operations, and data structures used for analysis (for performing analytical queries). In DSS, these two types of data are called respectively about P e R but T And in n s m And And from T about h n And to but m And d but now X (OID) And XRbutneitherlAndSCHemdbutnnsX.

In his work, Inmon gave the following definition of HD.

X ra neither l And SCH e d but n n s X - domain-specific, integrated,

immutable, chronologically maintained set of data organized for decision support purposes.

Let's consider the properties of HD in more detail.

PRunitsmeTnoh ohrienTbutqiI. This is the fundamental difference between HD and OID.

Different OIDs may contain data describing the same subject area from different points of view (for example, from the point of view of accounting, warehouse management, planning department, etc.). A decision made on the basis of only one point of view may be ineffective or even incorrect. Data warehouses allow you to integrate information that reflects different points of view on the same subject area.

Subject orientation also allows you to store in the data warehouse only those data that

are needed for their analysis (for example, it makes no sense for analysis to store information about the numbers of sales documents, while their contents - the quantity, the price of the goods sold - are necessary). This significantly reduces the cost of storage media and increases the security of data access.

ANDnTegrbutqiI. OID, as a rule, are developed at different times by several teams with their own tools. This leads to the fact that the data reflecting the same object of the real world in different systems describe it differently. Mandatory integration of data in the data warehouse allows you to solve this problem by bringing the data to a single format. ByddeRwelltobutXRaboutnologee. The data in the OID is necessary to perform operations on them at the current time. Therefore, they may not be tied to time. For data analysis, it is often important to be able to track the chronology of changes in domain metrics. Therefore, all data stored in the data warehouse must correspond to consecutive time intervals. HeAndgpenIemofromTb. OID requirements impose time limitsstoring data in them. Those data that are not needed for online processing, as a rule, are removed from the OID to reduce the resources occupied. For analysis, on the contrary, data are required for the largest possible period of time. Therefore, unlike OID, the data in the HD after loading is only read. This allows you to significantly increase the speed of access to data, both due to the possible redundancy of the stored information, and due to the elimination of modification operations.

When implementing the DSS concept in the DSS, data from different OIDs are copied into a single storage. The collected data is brought to a single format, coordinated and summarized. Analytical requests are addressed to the data warehouse (Fig. 1).

Such a model inevitably leads to duplication of information in the OID and in the data warehouse.

However, Inmon argues in his work that the redundancy of data stored in

DSS does not exceed 1%! This can be explained by the following reasons.

When loading information from the OID to the data warehouse, the data is filtered. Many of them do not fall into the CD, because they are meaningless from the point of view of their use in analysis procedures.

The information in the OID is, as a rule, operational in nature, and data, having lost

relevance are removed. In contrast, historical information is stored in a data warehouse. From this point of view, the duplication of the contents of the CD by the data of the OID turns out to be very insignificant. The data warehouse stores generalized information that is not in the OID.

During loading into the data warehouse, the data is cleared (unnecessary information is removed), and

after such processing, they occupy a much smaller volume.

Input Subsystem(OLTP)

P o d s i s t e m

Analytical queries

P o d s i s t e m

O p e r a t o

Input Subsystem(OLTP)

and analysis

RAndfromatnaboutto7. DSS structure with a physical data warehouse

The redundancy of information can be reduced to zero using a virtual data warehouse. In this case, unlike the classical (physical) data warehouse, data from the OID is not copied to a single storage. They are extracted, transformed and integrated directly when executing analytical queries in the computer's RAM. In fact, such requests are directly addressed to the OID (Fig. 2). The main advantages of virtual storage are:

minimizing the amount of memory occupied by information on the media; working with current, detailed data.Input Subsystem(OLTP)

Input Subsystem(OLTP)

Input subsystem

Information storage subsystem

Analytical queries

Analysis subsystem (OLAP,

O p e r a t o

V i r t u a l n o e

R And from at n about to 8. S p r u c t u r e

However, this approach has many disadvantages.

The processing time of requests to the virtual storage system significantly exceeds the corresponding

Relevant indicators for physical storage. In addition, the structures of operational databases, designed for intensive updating of single records, are highly normalized. To execute an analytical query, a large number of tables must be joined, which also leads to a decrease in performance.

An integrated view of the virtual storage is possible only if the condition of constant availability of all OIDs is met. Thus, temporary unavailability of at least one of the sources can lead either to failure of the analytical query or to incorrect results.

The execution of complex analytical queries on the OID requires significant computer resources. This leads to a decrease in the performance of OLTP systems, which is unacceptable, since the execution time of operations in such systems is often very critical.

Different DIDs may support different data formats and encodings. Often

The same question can have multiple answers. This may be related to:

non-synchronization of data update moments in different OIDs; differences in the description of identical objects and events of the subject area; input errors; loss of archive fragments, etc.In this case, the goal - the formation of a single consistent view of the control object - may not be achieved.

The main disadvantage of virtual storage should be recognized as a practical

the impossibility of obtaining data over a long period of time. In the absence of physical storage, only the data that is in the DID at the time of the request is available. The main purpose of OLTP systems is the operational processing of current data, so they are not focused on storing data for a long period of time. As the data becomes obsolete, it is uploaded to the archive and removed from the operational database.

Despite the advantages of a physical HD over a virtual one, it is necessary to

recognize that its implementation is a rather laborious process.

Let's dwell on the main problems of creating a data warehouse:

the need to integrate data from heterogeneous sources into dis-limited environment;

the need for efficient storage and processing of very large amounts of information; the need for multi-level metadata directories; ABOUTincreased requirements for data security. Let's take a closer look at these issues.

in detail.

HeaboutXaboutdAndmofromTbinTegrbutqiAnddbutnnsAndhneaboutdnaboutRaboutdnsAndfromTabouthNickovinRbutfrom- etcfoodlennOhfromRfood. Data warehouses are created to integrate data that can come from heterogeneous OIDs physically located on different computers: databases, electronic archives, public and commercial electronic catalogs, directories, statistical collections. When creating a data warehouse, one has to solve the problem of building a system that functions in concert with heterogeneous software tools and solutions. When choosing a means of implementing a data warehouse, one has to take into account many factors, including the level of compatibility of various software components, the ease of their development and use, the efficiency of operation, etc.

ByTRebnaboutfromTbinuhffetoTAndinnohmXRbutneneitherAndAndaboutbRorTtoeaboutChenbbolbwAndXaboutbemovinfaboutRmacAndAnd. The property of the immutability of the HD implies the accumulation of information in it over a long period of time, which must be supported by a constant increase in the volume of disk memory. Orientation to the execution of analytical queries and the related denormalization of data lead to a non-linear increase in the amount of memory occupied by the HD with an increase in the amount of data. Studies based on the TPC-D test suite have shown that a 100 GB database requires 4.87 times the amount of memory needed to store useful data.

HeaboutXaboutdAndmofromTb mnaboutGOURovneexitfrometcavohNickov meTbutdbutnnsX. For analysis systems, the availability of advanced metadata (data about data) and the means of providing them to end users is one of the main conditions for the successful implementation of a data warehouse. Metadata is necessary for DSS users to understand the structure of information on the basis of which a decision is made. For example, before a corporate manager asks the system his question, he must understand what information is available, how up-to-date it is, whether it can be trusted, how long it can take to generate an answer, and so on. When creating a data warehouse, it is necessary to solve the problems of storing and conveniently presenting metadata to users.

PovyweneithereTRebovaneitherthtobezoPbutfromnaboutfromTAnddbutnnsX. Gathered together and

publicized information about the history of the corporation, its successes and failures, about relationships with suppliers and customers, about the history and state of the market, makes it possible to analyze the past and current activities of the corporation and build forecasts for the future. Obviously, such information is confidential and access to it is limited within the company itself, not to mention other companies. To ensure the security of data, it is necessary to solve the issues of user authentication, data protection when they are moved to storage

data from operational databases and external sources, data protection during their transmission over the network, etc.

You can reduce the cost of creating a data warehouse by creating its simplified

option - data mart (Data Mart).

IN And T R in but d but n us X (IND) - this is a simplified version of the HD, containing only the theme-

tically combined data.

VD is as close as possible to the end user and contains data,

thematically focused on it (for example, the PD for employees of the marketing department may contain the data necessary for marketing analysis). The IA is much smaller in volume than the CA, and its implementation does not require large expenditures. They can be implemented both independently and together with the HD.

Self-contained IAs (Figure 3) often appear in an organization historically and are found in large organizations with a large number of independent departments that solve their own analytical problems.

designing VD for answers to a certain range of questions; the rapid introduction of autonomous air traffic and the receipt of returns; simplification of procedures for filling in the VD and increasing their productivity by taking into account the needs of a certain range of users.Input Subsystem(OLTP)

Input Subsystem(OLTP)

Information storage subsystem

Analytical queries

Analytical queries

Analytical queries

Analysis subsystem (OLAP,

P o d s i s t e m

O p e r a t o

Input Subsystem(OLTP)

and analysis

RAndfromatnaboutto9. Structure of the DSS with independent VDs

The disadvantages of autonomous VD are:

multiple storage of data in different VDs, which leads to an increase in storage costs and potential problems associated with the need to maintain data consistency; lack of data consolidation at the subject area level, and,hence - the lack of a single picture.

Recently, the idea of combining HD and VD in

one system. In this case, the CD is used as the only source of integrated data for all IAs (Fig. 4).

The data warehouse is a single centralized source of information for the entire subject area, and the VD are subsets of data from the repository,

organized to present information on thematic sections of a given area. End users have the ability to access detailed warehouse data if there is not enough data in the storefront, as well as to get a more complete information picture.

The advantages of this approach are:

ease of creating and filling the VD, since the filling comes from a single standardized reliable source of purified data - from the data warehouse; ease of expansion of the DSS by adding new VDs; LOAD REDUCTION IN MAIN X D.Input Subsystem(OLTP)

Information storage subsystem

Analytical queries

P o d s i s t e m

O p e r a t o

Input Subsystem(OLTP)

Input Subsystem(OLTP)

A n a l and t and che with k and e

HD requests

VD

VD

and analysis

Analysis subsystem (OLAP,

RAndfromatnaboutto10. Structure of the DSS with CD and IA

The disadvantages include:

redundancy (data is stored both in the data warehouse and in the VD); additional costs for the development of DSS with HD and WD.Summing up the analysis of ways to implement DSS using the concept of data storage, we can distinguish the following architectures of such systems:

DSS with physical (classical) HD (see Fig. 1); DSS with virtual data warehouse (see Fig. 2); DSS with VD (see Fig. 3); DSS with physical HD and with VD (Fig. 4).In the case of architectures with a physical HD and/or VD, attention must be paid to

questions of the organization (architecture) of the data warehouse and the transfer of data from the OID to the data warehouse.

According to Forrester Research, most large companies are facing next problem: they accumulate great amount information that is never used. Practically in any organization, there are many transactional systems that are focused on online data processing (each for a specific class of tasks) and continuously replenish numerous Database. In addition, enterprises often own huge amounts of information stored in the so-called. legacy systems. All this data is distributed across networks personal computers, are stored on mainframes, workstations and servers. Thus, there is information, but it is dispersed, inconsistent, unstructured, often redundant and not always reliable. Therefore, in most organizations, this data still cannot be used to make critical business decisions. The concept of data warehouses (Data Warehouse) is aimed at resolving this contradiction.

Bill Inmon, the originator of the concept, in his classic article "What Are Data Stores" (D2K Incorporated, 1996) defines data stores as "domain-specific, integrated, immutable, historical datasets organized for the purpose of supporting governance." He views storage as "the single and only source of truth", the "center of the universe" of decision support systems (DSS). “From data warehouses,” he writes, “information flows to various departments, filtered in accordance with the given DSS settings. These separate decision-making databases are called shop windows data".

The concept of data warehousing is based on the idea of combining corporate data scattered across online data processing systems, historical archives and other external sources. These sources may contain data that is not used directly in the ODS, but is vital for the DSS: the legislative framework(including tax forecasts), development plans branches, statistical data, electronic directories. As practice shows, a decision made on the basis of only internal data most often turns out to be incorrect.

The purpose of the concept of data warehouses is to clarify the differences in the characteristics of data in operational and analytical systems, to determine the requirements for data placed in the warehouse, to determine the general principles and stages of its construction, the main sources of data, to give recommendations for solving potential problems that arise during their unloading, cleaning , reconciliation, transportation and loading into the target storage database.

Comparison of data characteristics in information systems oriented to operational and analytical data processing

Characteristic | Operating | Analytical |

Update frequency | High frequency, in small portions | Low frequency, large portions |

Data sources | Mainly internal | Mainly external |

Volumes of stored data | Hundreds of megabytes, gigabytes | gigabytes and terabytes |

Age of data | Current (for a period of several months to one year) | Current and historical (for a period of several years, decades) |

Purpose | Fixation, online search and data transformation | Storage of detailed and aggregated historical data, analytical processing, forecasting and modeling |

Basic requirements for data in a data warehouse

Subject orientation | All data about a certain subject (business object) is collected (usually from many different sources), cleaned, coordinated, supplemented, aggregated and presented in a single form convenient for their use in business analysis. |

Integration | All data about different business objects are mutually agreed upon and stored in a single corporate-wide storage |

immutability | Initial (historical) data, after they have been agreed, verified and included in the general corporate storage, remain unchanged and are used exclusively in read mode |

Timeline support | The data is chronologically structured and reflects the history for a sufficient period of time to complete the tasks of business analysis and forecasting. |

The subject of the concept of data warehouses is not the analysis of data, but the data itself, i.e. the concept of their preparation for further analysis. At the same time, the concept of a data warehouse defines not just a single logical view of corporate data, but the implementation of a single integrated data source.

Data Analysis Models

Despite the fact that in the concept of data warehouses formulated by B. Inmon, the emphasis is on the data itself and identifying its most common properties, characteristics and relationships, it is clear that these data should be used in the process of making business decisions at all levels, up to corporate and intercorporate. To date, two main models of data analysis have historically formed, on which the existing analytical DSS are based:

1. Static Analysis(DSS). The very concept of DSS (Decision Support Systems) actually translates as DSS. Until recently, this was the only analytical concept. The result of the operation of such systems was strictly regulated multi-page reports, for the formation of which long queries were executed that processed enormous amounts of data. Such requests could be executed for several hours, sometimes tens of hours and even days.

2. Operational data analysis (OLAP). The author of the concept of OLAP (On-Line Analytical Processing) is Dr. E. Codd, who in 1993 formulated 12 basic requirements for OLAP implementation tools. The fundamental difference This model from the traditional static DSS is a conceptual representation of data in the form of a multidimensional cube. At the same time, E. Codd showed the potential drawbacks of the relational approach in data analysis-oriented systems. The purpose of creating this concept was the fundamental possibility of providing the end user with the means of generating, processing and executing ad hoc analytical requests with a minimum system response time. The need for this new concept was predetermined by the fact that often after receiving a standard report using DSS, the analyst had a new question or the realization that the question itself was formulated incorrectly. As a result, he had to long time wait for the next result in order to then, possibly, return to the next iteration of this process.

Comparison of characteristics of static and dynamic analysis

Characteristic | Static Analysis | Dynamic Analysis |

Question Types | How? How? When? | Why? What happens if?.. |

Response time | Not regulated | |

Typical Operations | Regulated report, diagram | A sequence of interactive reports, charts, screen forms. Dynamic change of aggregation levels and data slices. |

Level of analytical requirements | ||

Display type | Basically predetermined, regulated | User Defined |

Data aggregation level | Detailed and summary | Basically total |

Age of data | Historical and current | Historical, current and forecast |

Request types | Mostly predictable | Unpredictable, case by case |

Purpose | Scheduled analytical processing | Multifunctional analysis, modeling and forecasting |

Today, the OLAP direction is perhaps the most promising for solving analytical management problems. By means of a specially created OLAP Report service, the 12 requirements originally formulated by Dr. Codd were partially revised and significantly supplemented with both basic and accessibility, such as the selection and processing of missing data, etc. But the core of the OLAP concept is still multidimensional representation data at the conceptual level.

Data marts

According to the classical definition, a Data Mart is a subset of a data warehouse that reflects the specifics of a department (business object) and provides increased productivity. Thus, the showcase is the link on which a specific analytical system to solve their range of problems. Nevertheless, a situation is possible when some area of the enterprise's activity practically does not correlate with others, and it is possible to build the corresponding data mart autonomously, without being tied to a corporate storage. Then the storefront will be replenished with data directly from the online transaction processing systems. Such data marts are called independent, in contrast to the classic data warehouse dependent and replenished from it marts.

In some cases, it seems appropriate to deploy a data mart instead of a fully formed warehouse. Data marts are less demanding, cheaper and easier to build, and based on cheaper servers rather than multiprocessor systems. With this approach, there is no need to use the whole information system corporations and support complex procedures for synchronously updating the data mart when updating the warehouse. At the same time, it is necessary to understand that with this approach, data marts can multiply into entire complexes of independent information databases, and naturally the task of managing individual search, maintenance and recovery strategies will be set. On the other hand, building a single corporate warehouse based on many independent data marts is much more profitable than relying on data dispersed throughout transaction processing systems.

So what makes sense to use: a single repository, stand-alone data marts, a repository with dependent marts, or other options? There is no universal answer to the question of the need to use one or another option. In every case best option determined by business requirements, demand intensity, network architecture, required responsiveness, and other conditions.

Data warehouse implementation technology

When building a data warehouse, it's natural to follow a phased development approach. Although no description of the process of building a data warehouse as a sequence of phases can cover all aspects feedback with its potential users, managers and analysts, however, there are some basic steps that apply to the process of building an enterprise architecture:

1. Determining the needs of end users and building a model of the business questions to be answered.

2. Identification of data from corporate and external sources that will power the data warehouse or data mart.

3. Analysis of data sources and modeling of the functions and processes that these sources cover. Learning the rules by which a business operates is one of the essential conditions building warehouses or data marts, since it is on its basis that the level of detail of elements in the data warehouse is set.

4. Determination of procedures for transformation, cleaning and logical integration of source data before they are placed in a data warehouse or data mart, as well as regulating the implementation of these procedures that update the data warehouse.

5. Creation of metadata describing the sources and methods of data transformation and the logic of the data warehouse. The metadata repository should include data definitions, business rules, and detailed logic to model the development of analytic systems.

6. Formation of physical tables of the data warehouse and its filling. This process may require several iterations, taking into account the possible redesign of data structures when analyzing the storage data schema.

7. Building a repository of data marts, which will include subsets of data from the warehouse and pre-aggregated data. The metadata part will describe how the raw warehouse data is transformed, aggregated, and cached in the data marts.

8. Installing OLAP tools, application systems, Web servers and all necessary tools And server programs required for data access, analysis, and reporting.

9. Installation on end user workstations of the client software(“thick” client) or browsers that support standard data formats and Java applets, as well as necessary extensions plug-in ("thin" client) for user access to data.

After completing the process of creating a data warehouse, it may seem that everything is already done. In fact, the formation of a warehouse is a process that also includes the necessary phases of ongoing supervision and maintenance of the data warehouse. Proper oversight involves not only maintaining the correctness of the data, but also ensuring its secrecy, especially if the access to the storage data is via the Web. “Because the data warehouse contains one of the greatest assets of an enterprise,” says R. Tenler, chairman of Information Advantage, “data must be secure. But to realize the potential value of a data warehouse, an organization will have to offer it to potential buyers.”

Maintaining a data warehouse in good condition for a long time is another critical task. This factor becomes especially important when the number of users accessing the system starts to grow. At the same time, if in the process of designing a data warehouse information services usually a thorough reconciliation data, over time, people's attention usually wanes, and the data warehouse can turn into a dump. To prevent this from happening, it is necessary to appoint responsible persons for maintaining data quality, who will constantly carry out verification information coming from transaction processing systems with data in a warehouse or mart.

In conclusion, it can be noted that the process of designing a data warehouse used to provide the necessary information in decision-making process corporate and intercorporate level, is critical to the life of the enterprise. At the stage of its implementation, one has to pay attention not only to the solution technical issues but also to the problems associated with the human factor. We should also not forget about the need for constant evaluation of the appropriateness of the efforts being made. In addition to the right project management chain, it is necessary to take into account at each stage both the needs of users and the presence of political aspects that can slow down the project. With a competent approach to solving this problem, the data warehouse may soon become part of commercial system enterprise by providing a part of third-party users for a fee the ability to use data from some subset of the repository. This approach will allow not only to recoup the work on the formation of a data warehouse, but also provide new channel receipt of income.