The compression of information in computer memory is such a transformation that leads to a reduction in the amount of memory occupied while maintaining the encoded content. Exist different ways compression for different types data. Only for compression graphic information about a dozen different methods are used. Here we will consider one of the ways to compress textual information.

In an eight-bit character encoding table (such as ASCII), each character is encoded with eight bits and therefore occupies 1 byte in memory. In section 1.3 of our textbook, it was said that the frequency of occurrence of different letters (signs) in the text is different. It was also shown there that the informational weight of symbols is the greater, the lower its frequency of occurrence. This circumstance is connected with the idea of text compression in computer memory: refuse to encode all characters with codes of the same length. Symbols with less information weight, i.e. frequently occurring, encode with a shorter code compared to less frequently occurring characters. With this approach, it is possible to significantly reduce the amount of general text code and, accordingly, the space it occupies in the computer's memory.

This approach has been known for a long time. It is used in the well-known Morse code, several codes of which are given in Table. 3.1, where "dot" is encoded by zero, and "dash" by one.

Table 3.1

|

Letter | |||||||

As can be seen from this example and Table. 3.1, more frequent letters have a shorter code.

Unlike codes equal length, which are used in the ASCII standard, in this case there is a problem of separation between codes of individual letters. In Morse code, this problem is solved with the help of a "pause" (space), which, in fact, is the third character of the Morse alphabet, i.e. Morse alphabet is not two-, but three-character.

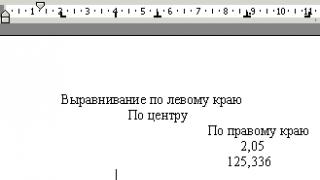

But what about computer encoding, where it is used binary alphabet? One of the simplest, but very effective ways to construct codes of different lengths that do not require a special separator is the D. Huffman algorithm (D.A. Huffman, 1952). Using this algorithm, a binary tree is built, which allows one to uniquely decode binary code, consisting of character codes of various lengths. A tree is called binary if each vertex has two branches. On the rice. 3.2 shows an example of such a tree, built for the English alphabet, taking into account the frequency of occurrence of its letters. The codes obtained in this way can be summarized in a table.

Table 3.2

|

Letter |

Huffman Code |

With the help of Table. 3.2 easy to encode text. So, for example, a string of 29 characters

WENEEDMOR ESNOWFORBE TTERSKIING is converted into code: 011101 100 1100 100 100 1101100011111010111100 It 1100 1110 011101 01001 1110 1011 011100 100 001001 100 10110110 110100011 1010 1010 1100 00001, which, when placed it in memory, will take a look at:

01110110 01100100 10011011 00011111 01011100 01101100 11100111 01010011 11010110 1110010000100110 01011011 01101000 11101010 10110000 001

Thus, text that takes up 29 bytes in ASCII encoding will only take up 16 bytes in Huffman encoding.

The reverse task - the transition from Huffman codes to letters of the English alphabet - is carried out using a binary tree (see figure). In this case, recoding occurs by scanning the text from left to right from the first digit, moving along the corresponding (having the same binary code) branches of the tree until we get to the end vertex with a letter. After selecting a letter in the code, the process of decoding the next letter starts again from the top of the binary tree.

It is easy to guess that the depicted tree is an abbreviated version of the Huffman code. In full, it must take into account all possible characters found in the text: spaces, punctuation marks, brackets, etc.

In programs that compress text - archivers, a table of the frequency of occurrence of characters is built for each processed text, and then codes of different lengths such as Huffman codes are formed. In this case, text compression becomes even more efficient, since the encoding is tuned specifically to this text. And the larger the text size, the greater the compression effect.

Short about main

Information compression is a transformation of information that leads to a reduction in the amount of memory occupied while maintaining the encoded content.

The idea of a text compression method: the length of a character code decreases with a decrease in its information weight, i.e. with increasing frequency of occurrence in the text.

The Huffman compression algorithm is represented as binary tree.

Archivers using the Huffman algorithm build their binary coding tree for each text.

Questions and tasks

What is the difference between constant and variable length codes?

How do variable length codes allow text to be "compressed"?

Encode the following text using ASCII codes and Huffman codes: HAPPYNEWYEAR. Calculate the required amount of memory in both cases.

4. Decode using a binary tree (see figure) the following code:

11110111 10111100 00011100 00101100 10010011 01110100 11001111 11101101 001100

Binary tree of the English alphabet used for Huffman coding

Block A

fixed costs

variable costs

Analyzing manufactured products, the main attention should be paid to the analysis of its cost. The cost price is the valuation of natural resources, raw materials, materials, fuel, energy, fixed assets, labor resources and other costs for its production and sale used in the production process of products (works, services). The definition of the cost of production is understood as the calculation of the costs attributable to the unit. products. The release of products can be considered justified if the proceeds from the sale of products will cover all the costs available at the enterprise. And if not, then the output is unprofitable. Those. the release will be justified when the enterprise reaches the volume of output and sales corresponding to the point of zero profit. TNP is the volume of sales from which the company makes a profit.

Also, in accordance with the Pareto optimality criterion for the output structure, output will be efficient if it simultaneously maximizes the utility of buyers and does not go beyond the available resources (i.e., it lies on the production possibilities curve). The output structure is effective if the producers and consumers are simultaneously in equilibrium.

What is the difference between the accounting profit of the enterprise and the marginal income received by it during the same time?

Marginal income calculated as the difference between the proceeds from the sale of products (excluding VAT and excises) and variable costs. In other words, MD is the sum of fixed costs and profits from sales. MD must cover the fixed costs of the enterprise and provide him with a profit from the sale of products, works, services. This figure is also called the coverage amount.

MD = Sales revenue - variable costs

MD = Fixed costs + Profit from sales

Accounting profit - total profit from entrepreneurial activity calculated from accounting. Accounting profit is calculated as the difference between the total income of the enterprise and accounting (explicit) costs. Accounting costs are the cost of the resources used by the firm at their actual acquisition prices. Explicit costs are fully reflected in accounting, so they are also called accounting costs. Explicit costs are determined by the amount of expenses of the enterprise for payment external resources, i.e. resources not owned by the firm. For example, raw materials, materials, fuel, labor, etc.

Accounting profit \u003d Total income of the enterprise - Accounting (Explicit) costs

(Thus, accounting profit is less than contribution margin by the amount of explicit fixed costs.)

What are the main factors influencing the value of marginal income of the enterprise?

An important role in substantiating management decisions is played by marginal (limiting) analysis, the methodology of which is based on studying the relationship between the three groups of the most important economic indicators"costs - volume of production (sales) of products - profit" and forecasting the critical and optimal value of each of these indicators when set value others. The main category of margin analysis is marginal income. Marginal income (profit) is the difference between sales proceeds (excluding VAT and excises) and variable costs. The change in marginal income can be influenced (together or separately) by two factors - an increase (decrease) in revenue and cost. An important factor, affecting the profitability of individual objects of marginal profit, is the value and structure of the incomplete cost. The value of marginal income (profit) directly depends on the value of the partial cost. The predominance of variable costs in the structure of the partial cost indicates the fact that in order to achieve break-even this object marginal income does not require significant sales volume. The predominance of direct fixed costs in the structure of the incomplete cost indicates the need to increase sales to compensate for them.

Block B

What is the difference between the reduced cost of a company's products in direct cost and its full cost in accounting?

The reduced cost of industrial products in direct cost is taken into account and planned only in terms of variable costs. Fixed costs are collected on a separate account and are debited directly to the debit of the financial results account at specified intervals, for example, “Profit and Loss”. Variable costs are also used to estimate the balances of SOEs in warehouses at the beginning and end of the year and WIP. The full cost price in accounting involves the inclusion of fixed and variable costs in the cost price.

Options for calculating the cost of mutually rendered works of the corresponding transfer prices.

The most accurate results of the distribution of mutually rendered services are provided by the solution of a system of linear cost equations for each in-plant subdivision. IN general view the system of equations of places and cost centers has the following form:

Where Rni- the amount of primary costs of places; qi- the volume of services provided to the unit; n- place of cost i-th division ( i = 1, ... n); k i , k j cost allocation ratios j th place of costs.

At one way method the cost of services of primary places refers only to the main production of the enterprise, the amount of costs associated with mutual exchange activity is not taken into account. Distribution coefficients are calculated by the formula:

Where TO - distribution coefficient; R pm – costs of primary seats; Q pm - the volume of services consumed in total by all divisions of the main production of the enterprise.

Using step distribution method the sequence in which the costs of the primary cost centers are written off is determined. Usually they start with auxiliary workshops and farms, the services of which are needed by everyone. The distribution coefficient in this case is calculated by the formula

Where R fix , R var - fixed and variable costs allocated by the previous cost center; Q pm - the volume of primary services transferred to the next division of the enterprise.

From the point of view of controlling the magnitude and cost-effectiveness of the costs of places and centers within the enterprise, a method is distinguished cost budgeting and method comparison of costs and performance. In the first case, for each place of expenditure or center of responsibility, a budget (estimate) of costs is compiled and, according to the accounting data of their actual value, they control its observance. When using the method of comparing costs and productivity in the context of each division of the enterprise, deviations are revealed caused by a change in the magnitude of productivity or the degree of utilization of production capacities and the level of costs of a place or center.

Block C

1. What determines and how is the capacity utilization of an enterprise measured?

Capacity utilization is the level of use of potential production opportunities, which are estimated by the ratio of actual output to the maximum possible.

Capacity utilization is generally measured by usage time this resource per day, week, month. It is possible to calculate the loading of PM as the sum of the products of the volume of manufactured products of each type by the time of manufacturing a unit of production of each type in the study area.

As a rule, PM is not fully used due to the influence of the following limiting factors: the level of demand for manufactured products, the amount of available material resources (materials, raw materials, fuel) required for production, limited opportunity the use of other capacities of the enterprise, affecting the maximum possible level of production within one cycle, etc.

2. What costs depend on the degree of utilization of the production capacities of the enterprise ?

The production capacity or capabilities of an enterprise is not a homogeneous, but a homogeneous value, consisting of the production capacities of individual divisions (workshops, sections) of an enterprise. For various reasons, including objective ones, these capacities are not fully connected with each other, for example, due to different performance machines, machines and other equipment. From this it is clear that the costs of material and labor resources should be taken into account based on a certain level of utilization of production capacities, as a rule, less than 100%. Underutilized capacity means unused opportunities to increase production and reduce production costs. It follows that the level of loading of the PM affects the amount of variable costs.

3. What is the size total amount business expenses?

The size of the total cost of the enterprise - the gross costs of the enterprise (?), which is a set of fixed, unchanging, costs and variable costs, depending on the volume of manufactured and sold products.

pros

The standard costing system can significantly reduce the amount of accounting work;

Provides a solid basis for identifying material variances in cost comparisons;

Helps to improve the efficiency of management and cost control, as it requires a detailed study of all production, administrative and marketing functions of the enterprise, resulting in the development of the most optimal approaches to management while reducing costs;

Standard costs serve the best criterion to estimate actual costs;

Provides users with information about the expected costs of producing and selling products;

Allows you to set prices based on a predetermined unit cost;

Allows you to draw up a report on income and expenses with the identification of deviations from the norms and the reasons for their occurrence.

Minuses

A lot of attention is focused on cost and labor productivity;

Does not provide the enterprise with sufficient information to find ways to improve its activities;

It does not cover all aspects of increasing production efficiency;

Applies to recurring costs;

The success of the application depends on the composition and quality of the regulatory framework;

Failure to set standards certain types costs.

Block D

Block A

What is the difference between fixed and variable business costs?

The criterion for dividing costs into fixed and variable is their dependence on the volume of production.

fixed costs do not change automatically with changes in production volumes or with changes in production capacity. Therefore, the concept of fixed costs is more applicable for periods within a year, when the composition and level of use of the enterprise's production capacities do not change significantly.

The nomenclature of fixed costs cannot be the same for all industries and must be specified taking into account the specifics of the enterprise. Fixed costs usually include interest on loans, rent, salaries of managerial employees, expenses for the protection of premises, maintenance and repair of buildings, expenses for testing, experiments and research, etc. Fixed costs also include depreciation charges (for the restoration of fixed capital). The level of fixed costs per unit of output tends to decrease relative to an increase in output, and vice versa.

The increase in constants is associated with the growth of production capacities and occurs due to capital investments and additional attraction of working capital. Decrease absolute value Fixed costs are achieved by rationalizing production, reducing management costs, selling surplus fixed assets.

variable costs increase or decrease in absolute amount depending on the change in the volume of production and are divided into proportional and non-proportional parts.

Proportional costs include the costs of raw materials, basic materials, semi-finished products, the wages of the main workers at piecework wages, the predominant part of the fuel and energy costs for technological purposes, the costs of packaging and packaging of products.

Disproportionate costs can be progressive (that is, increasing faster than output) and degressive (if their growth is less than the change in the quantity of production)

Variable length codes

ENCODING AND COMPRESSION OF INFORMATION

Encoding is a comparison of a message transmitted or recorded in memory by a certain sequence of characters, usually called a code word. Encoding can serve two purposes. The first goal is to reduce the length transmitted message(information compression), and the second - adding to the transmitted word additional information, which helps to detect and correct errors that occurred during transmission (noise-correcting coding). In this lecture, the first type of codes is considered.

Variable length codes

The first rule for constructing variable length codes is that short codes should be assigned to frequently occurring characters, and long characters to rarely occurring ones. In this case, the codes should be assigned so that it can be decoded unambiguously, and not ambiguously. For example, consider four characters a 1 , a 2 , a 3 , a 4 . If they appear in the message with equal probability ( p= 0.25), then we assign them four two-bit codes: 00, 01, 10, 11. All probabilities are equal, so variable length codes will not compress the data. For each character with a short code, there is a character with long code and the average number of bits per character will be at least 2. The data redundancy of equiprobable characters is 0, and a string of such characters cannot be compressed using variable length codes (or any other method).

Now let these four symbols appear with different probabilities (see Table 12.1). In this case, there is redundancy that can be removed with variable length codes and compress the data so that less than 2 bits per symbol is required. The smallest average number of bits per character is 1.57, which is the entropy of this set of characters.

Table 12.1 suggests code The code_ 1, which assigns the shortest code to the most frequently occurring character. The average number of bits per character is 1.77. This number is close to the theoretical minimum.

Table 12.1

Consider a sequence of 20 characters

a 1 a 3 a 2 a 1 a 3 a 3 a 4 a 2 a 1 a 1 a 2 a 2 a 1 a 1 a 3 a 1 a 1 a 3 a 1 ,

in which four characters appear with approximately the same frequencies. This string will correspond to a 37-bit codeword:

1|010|01|1|010|010|001|01|1|1|01|01|1|1|010|1|1|01|010|1.

The average number of bits per character is 1.85, which is not too far from the calculated minimum average length. However, if we try to decode the sequence, it turns out that The code _1 has significant disadvantage. First beat code word is 1, so the first character of the sequence can only be a 1 , since no other character's code starts with 1. The next character is 0, but the character codes a 2 , a 3 , a 4 all start at 0, so the decoder must read the next character. It is equal to 1, but the codes for a 2 and a 3 both have 01 at the beginning. Therefore, the decoder does not know how to proceed further: decode the string as 1|010|01…, i.e. a 1 a 3 a 2 …, or as 1|01|001…, i.e. a 1 a 2 a 4 .... Further bits of the sequence cannot correct positions. That's why Code _1 is ambiguous. free from this deficiency. The code _2.

The code _2 has the so-called prefix property, which can be formulated as follows: if a certain sequence of bits is chosen as the code of any character, then no code of any other character should have this sequence at the beginning (a character code cannot be a prefix of another code). character). If line 01 is the code for a 2 , then other codes must not start with 01. Therefore, codes for a 3 and a 4 must start with 00. It is natural to select 000 and 001 for this.

Therefore, when choosing a set of variable length codes, two principles must be observed: 1) shorter code sequences should be assigned to frequently occurring symbols; 2) the received codes must have a prefix property. Following these principles, one can construct short, uniquely decodable codes, but not necessarily the best (i.e. shortest) codes. In addition to these principles, an algorithm is needed that always generates the set of shortest codes (having the smallest average length). The input data of this algorithm should be the frequencies (or probabilities) of the characters of the alphabet. Such an algorithm is Huffman coding.

It should be noted that not only statistical compression methods use variable length codes to encode individual characters. This approach is used, in particular, in arithmetic coding.

Before describing the statistical encoding methods, let us dwell on the interaction of the encoder and decoder. Let's assume that some file (for example, a text file) has been compressed using variable length prefix codes. In order to perform decoding, the decoder must know prefix code each character. This can be achieved in three ways.

The first way is that the set of prefix codes is selected once and used by all encoders and decoders. This method is used, for example, in facsimile communication. The second way is that encoding is done in two passes. On the first pass, the encoder reads the file being encoded and collects the necessary statistics. On the second pass, compression is performed. Between passes, the encoder, based on the collected information, creates the best prefix code for this particular file. This method gives a very good compression ratio, but is usually too slow for practical use. Besides, in compressed file it is necessary to add a table of prefix codes in order for the decoder to know it. This degrades the overall performance of the algorithm. This approach in statistical compression is called semi-adaptive compression.

To represent quantities (data) in the program, constants and variables are used. Both have a name (identifier) and a value. The difference is that the value of a constant cannot be changed during program execution, but the value of a variable can.

We conditionally divide the variables into input (what is given), output (result: what needs to be obtained) and intermediate variables necessary in the calculation process. For example, for a program for calculating the greatest common divisor (Euclid's algorithm), the input variables are m and n, the intermediate variable is r. The output variable is also n. These variables must be of type "natural numbers". But there is no such type in Pascal. So you have to use some integer type. The size of the type determines the range of numbers for which the program can be used. If you describe variables like this:

the very a large number at the input, you can take 32767 \u003d 2 16 - 1.

Important! The meaning of the variables described in the section Var, undefined. Sometimes you can find the definition of a variable as a memory cell that contains the value of the variable. It is understood that the declaration of a variable associates the address of this cell with the name of the variable, that is, the name is used as the address of the memory cell where the value is contained. But the meaning itself has not yet been determined.

In Euclid's algorithm, the value of the remainder r is compared to zero. 0 is an integer constant. It is possible (but not required) to define a constant with a value of 0, like so:

During compilation, the identifier Zero will be replaced by its numerical value in the program text.

The type of the Zero constant is not obvious. Zero exists in any integer type. In the constants section, you can declare a typed constant by specifying both the type and the value:

Zero: integer = 0;

But now Zero has become a regular integer type variable, initial value which is defined and equal to zero.

Variable usage is controlled at compile time. The compiler will usually thwart attempts to set for variable value wrong type. Many programming languages automatically cast the value of a variable to desired type. As a rule, such implicit conversion type in novice programmers is a source of hard-to-find bugs. In Pascal, implicit type conversion is the exception rather than the rule. An exception is made only for constants and type variables INTEGER (integers), which are allowed to be used in expressions of type REAL (real). Ban on automatic conversion types does not mean that Pascal does not have data conversion tools. They are, but they must be used explicitly.