Structure and principles of the World Wide Web

Graphic representation of information on the World Wide Web

The World Wide Web is made up of millions of Internet web servers located around the world. A web server is a program that runs on a computer connected to a network and uses a hard disk protocol and sends it over the network to the requesting computer. More sophisticated web servers are capable of dynamically allocating resources in response to an HTTP request. To identify resources (often files or their parts) on the World Wide Web, uniform resource identifiers are used. Uniform Resource Identifier). Uniform resource locators are used to determine the location of resources in the network. Uniform Resource Locator). These URL locators combine URI identification technology and the English domain name system. Domain Name System) - domain name (or directly. The main function of a web browser is displaying hypertext. The World Wide Web is inextricably linked with the concepts of hypertext and hyperlinks. Most of the information on the Web is precisely hypertext. To facilitate the creation, storage and display of hypertext on the World Wide Web, it is traditionally used English language Hyper Text Markup Language), a hypertext markup language. The work of marking up hypertext is called layout, markup masters are called a webmaster or webmaster (without a hyphen). After HTML markup, the resulting hypertext is placed in a file, such an HTML file is the most common resource on the World Wide Web. Once an HTML file is made available to a web server, it is referred to as a "web page". A set of web pages forms a website. Hyperlinks are added to the hypertext of web pages. Hyperlinks help users of the World Wide Web easily navigate between resources (files), regardless of whether the resources are located on the local computer or on a remote server. Web hyperlinks are based on URL technology.

World Wide Web Technologies

In general, we can conclude that the World Wide Web stands on "three pillars": HTTP, HTML and URL. Although recently HTML has begun to lose ground somewhat and give way to more modern markup technologies: XML. xml (English) eXtensible Markup Language) is positioned as a foundation for other markup languages. To improve the visual perception of the web, CSS technology has become widely used, which allows you to set uniform design styles for many web pages. Another innovation worth paying attention to is the resource designation system in English. Uniform Resource Name).

A popular development concept for the World Wide Web is the creation of the Semantic Web. The Semantic Web is an add-on to the existing World Wide Web, which is designed to make the information posted on the network more understandable to computers. The Semantic Web is the concept of a network in which each resource in human language would be provided with a description that a computer can understand.. The Semantic Web opens up access to clearly structured information for any application, regardless of platform and regardless of programming languages. Programs will be able to find the necessary resources themselves, process information, classify data, identify logical relationships, draw conclusions, and even make decisions based on these conclusions. If widely adopted and implemented well, the Semantic Web has the potential to revolutionize the Internet. To create a computer-friendly description of a resource, the Semantic Web uses the RDF format (Eng. Resource Description Framework ), which is based on the syntax of the English. RDF Schema) and English. Protocol And RDF Query Language ) (pronounced like "sparkle"), a new query language for fast access to RDF data.

History of the World Wide Web

The inventors of the World Wide Web are considered Tim Berners-Lee to a lesser extent, Robert Cayo. Tim Berners-Lee is the author of HTTP, URI/URL and HTML technologies. In the year he worked in fr. Conseil Européen pour la Recherche Nucléaire, Geneva (Switzerland), he wrote the Enquire program for his own use. "Enquire", loosely translated as "Interrogator"), which used random associations to store data and laid the conceptual foundation for the World Wide Web.

There is also a popular concept Web 2.0, summarizing several directions of development of the World Wide Web at once.

Ways to actively display information on the World Wide Web

Information on the web can be displayed either passively (that is, the user can only read it) or actively - then the user can add information and edit it. Ways to actively display information on the World Wide Web include:

It should be noted that this division is very conditional. So, say, a blog or a guest book can be considered as a special case of a forum, which, in turn, is a special case of a content management system. Usually the difference is manifested in the purpose, approach and positioning one product or another.

Part of the information from websites can also be accessed through speech. India has already begun testing a system that makes the text content of pages accessible even to people who cannot read and write.

Organizations involved in the development of the World Wide Web and the Internet in general

Links

- Berners-Lee's famous book "Weaving the Web: The Origins and Future of the World Wide Web" online in English

Literature

- Fielding, R.; Gettys, J.; Mogul, J.; Frystick, G.; Mazinter, L.; Leach, P.; Berners-Lee, T. (June 1999). " Hypertext Transfer Protocol - http://1.1 Request For Comments 2616. Information Sciences Institute.

- Berners-Lee, Tim; Bray, Tim; Connolly, Dan; Cotton, Paul; Fielding, Roy; Jackle, Mario; Lilly, Chris; Mendelsohn, Noah; Orcard, David; Walsh, Norman; Williams, Stewart (December 15, 2004). " Architecture of the World Wide Web, Volume One". Version 20041215. W3C.

- Polo, Luciano World Wide Web Technology Architecture: A Conceptual Analysis. New Devices(2003). Retrieved July 31 2005.

Notes

Wikimedia Foundation. 2010 .

See what "World Wide Web" is in other dictionaries:

World Wide Web

world wide web- Ne doit pas être confondu avec Internet. Le World Wide Web, littéralement la "toile (d'araignée) mondiale", communément appelé le Web, parfois la Toile ou le WWW, est un système hypertexte public fonctionnant sur Internet et qui ... Wikipédia en Français

world wide web- ˌWorld ˌWide ˈWeb written abbreviation WWW noun the World Wide Web COMPUTING a system that allows computer users to easily find information that is available on the Internet, by providing links from one document to other documents, and to files… … Financial and business terms

Structure and principles of the World Wide Web

World Wide Web around Wikipedia

The World Wide Web is made up of millions of Internet web servers located around the world. A web server is a program that runs on a computer connected to a network and uses the HTTP protocol to transfer data. In its simplest form, such a program receives an HTTP request for a specific resource over the network, finds the corresponding file on the local hard drive, and sends it over the network to the requesting computer. More sophisticated web servers are capable of dynamically allocating resources in response to an HTTP request. To identify resources (often files or their parts) on the World Wide Web, uniform resource identifiers (URIs) are used. Uniform Resource Identifier). Uniform resource locators (URLs) are used to locate resources on the network. Uniform Resource Locator). These URL locators combine URI identification technology and the DNS domain name system. Domain Name System) - a domain name (or directly - an address in a numerical notation) is included in the URL to designate a computer (more precisely, one of its network interfaces) that executes the code of the desired web server.

To review the information received from the web server, a special program is used on the client computer - a web browser. The main function of a web browser is to display hypertext. The World Wide Web is inextricably linked with the concepts of hypertext and hyperlinks. Much of the information on the Web is hypertext. To facilitate the creation, storage and display of hypertext on the World Wide Web, the HTML language is traditionally used (Eng. Hyper Text Markup Language), a hypertext markup language. The work of marking up hypertext is called layout, markup masters are called a webmaster or webmaster (without a hyphen). After HTML markup, the resulting hypertext is placed in a file, such an HTML file is the main resource of the World Wide Web. Once an HTML file is made available to a web server, it is referred to as a "web page". A set of web pages forms a website. Hyperlinks are added to the hypertext of web pages. Hyperlinks help users of the World Wide Web easily navigate between resources (files), regardless of whether the resources are located on the local computer or on a remote server. Web hyperlinks are based on URL technology.

World Wide Web Technologies

To improve the visual perception of the web, CSS technology has become widely used, which allows you to set uniform design styles for many web pages. Another innovation worth paying attention to is the URN resource naming system (eng. Uniform Resource Name).

A popular development concept for the World Wide Web is the creation of the Semantic Web. The Semantic Web is an add-on to the existing World Wide Web, which is designed to make the information posted on the network more understandable to computers. The Semantic Web is the concept of a network in which each resource in human language would be provided with a description understandable to a computer. The Semantic Web opens up access to clearly structured information for any application, regardless of platform and regardless of programming languages. Programs will be able to find the necessary resources themselves, process information, classify data, identify logical relationships, draw conclusions, and even make decisions based on these conclusions. If widely adopted and implemented well, the Semantic Web has the potential to revolutionize the Internet. To create a computer-friendly description of a resource, the Semantic Web uses the RDF format (Eng. Resource Description Framework ), which is based on XML syntax and uses URIs to identify resources. New in this area is RDFS (English) Russian (English) RDF Schema) and SPARQL (eng. Protocol And RDF Query Language ) (pronounced "sparkle"), a new query language for fast access to RDF data.

History of the World Wide Web

Tim Berners-Lee and, to a lesser extent, Robert Cayo are considered the inventors of the World Wide Web. Tim Berners-Lee is the author of HTTP, URI/URL and HTML technologies. In 1980 he worked for the European Council for Nuclear Research (fr. Conseil Européen pour la Recherche Nucléaire, CERN ) software consultant. It was there, in Geneva (Switzerland), that he wrote the Enquire program for his own needs. Enquire, loosely translated as "Interrogator"), which used random associations to store data and laid the conceptual foundation for the World Wide Web.

The world's first website was hosted by Berners-Lee on August 6, 1991 on the first web server available at http://info.cern.ch/, (). Resource defined concept world wide web, contained instructions for setting up a web server, using a browser, etc. This site was also the world's first Internet directory because Tim Berners-Lee later hosted and maintained a list of links to other sites there.

The first photo on the World Wide Web was of the parody filk band Les Horribles Cernettes. Tim Bernes-Lee asked for their scans from the bandleader after the CERN Hardronic Festival.

Yet the theoretical foundations of the web were laid much earlier than Berners-Lee. Back in 1945 Vannaver Bush developed the concept of Memex (English) Russian - auxiliary mechanical means of "expansion of human memory". Memex is a device in which a person stores all his books and records (and ideally, all his knowledge that can be formally described) and which gives out the necessary information with sufficient speed and flexibility. It is an extension and addition to human memory. Bush also predicted a comprehensive indexing of texts and multimedia resources with the ability to quickly find the necessary information. The next significant step towards the World Wide Web was the creation of hypertext (a term coined by Ted Nelson in 1965).

- The Semantic Web involves improving the connectivity and relevance of information on the World Wide Web through the introduction of new metadata formats.

- The Social Web relies on the work of organizing the information available on the Web, performed by the users of the Web themselves. Within the second direction, developments that are part of the semantic web are actively used as tools (RSS and other web feed formats, OPML, XHTML microformats). Partially semantized sections of the Wikipedia Category Tree help users consciously navigate in the information space, however, very mild requirements for subcategories do not give reason to hope for an expansion of such sections. In this regard, attempts to compile Knowledge atlases may be of interest.

There is also a popular concept Web 2.0, summarizing several directions of development of the World Wide Web at once.

Ways to actively display information on the World Wide Web

Information on the web can be displayed either passively (that is, the user can only read it) or actively - then the user can add information and edit it. Ways to actively display information on the World Wide Web include:

It should be noted that this division is very conditional. So, say, a blog or a guest book can be considered as a special case of a forum, which, in turn, is a special case of a content management system. Usually the difference is manifested in the purpose, approach and positioning of a particular product.

Part of the information from websites can also be accessed through speech. India has already begun testing a system that makes the text content of pages accessible even to people who cannot read and write.

The World Wide Web is sometimes ironically called the Wild Wild Web (wild, wild Web) - by analogy with the title of the movie of the same name Wild Wild West (Wild, Wild West).

see also

Notes

Literature

- Fielding, R.; Gettys, J.; Mogul, J.; Frystick, G.; Mazinter, L.; Leach, P.; Berners-Lee, T. (June 1999). "Hypertext Transfer Protocol - http://1.1" (Information Sciences Institute).

- Berners-Lee, Tim; Bray, Tim; Connolly, Dan; Cotton, Paul; Fielding, Roy; Jackle, Mario; Lilly, Chris; Mendelsohn, Noah; Orcard, David; Walsh, Norman; Williams, Stewart (December 15, 2004). "Architecture of the World Wide Web, Volume One" (W3C).

- Polo, Luciano World Wide Web Technology Architecture: A Conceptual Analysis. New Devices(2003). Archived from the original on August 24, 2011. Retrieved July 31, 2005.

Links

| Protection of confidential data and anonymity on the Internet at Wikibooks |

- Official website of the World Wide Web Consortium (W3C) (English)

- Tim Berners-Lee, Mark Fischetti. Weaving the Web: The Origins and Future of the World Wide Web = Weaving the Web: The Original Design and Ultimate Destiny of the World Wide Web. - New York: HarperCollins Publishers (English) Russian . - 256p. - ISBN 0-06-251587-X, ISBN 978-0-06-251587-2(English)

| Web and websites | |

|---|---|

| globally | |

| Locally | |

| Site types and services |

|

| Creation and service |

|

| layout types, pages, sites |

|

| Technical | |

| Marketing | |

| Society and culture | |

| semantic web | |

|---|---|

| Basics | The World Wide Web · Internet · Hypertext · Database · Semantic networks · Ontologies · Description logic |

| Subsections | Linked Data · data web · Hyperdata · Dereferenceable URIs · Rule bases · Data Spaces |

| Applications | Semantic Wiki · semantic publishing · semantic search · semantic computing · semantic advertising · semantic reasoner · semantic matching · semantic mapper · semantic broker · semantic analytics · semantic service oriented architecture |

| Related topics | Folksonomy · Library 2.0 · Web 2.0 Links · Information architecture · Knowledge Management · collective intelligence · Thematic maps · Mindmapping · metadata · Geotagging · web science |

| Standards |

Syntax: RDF (RDF/XML · Notation 3 · Turtle · N-Triples) · SPARQL · URI · http · XML Schemes, ontologies:RDFS · OWL · Rule Interchange Format · Semantic Web Rule Language · common logic · |

When talking about the Internet, people often mean the World Wide Web. However, it is important to understand that these are not the same.

Structure and principles

The World Wide Web is made up of millions of Internet web servers located around the world. A web server is a computer program that runs on a computer connected to a network and uses the HTTP protocol to transfer data. In its simplest form, such a program receives an HTTP request for a specific resource over the network, finds the corresponding file on the local hard drive, and sends it over the network to the requesting computer. More sophisticated web servers are capable of generating documents dynamically in response to an HTTP request using templates and scripts.

To view information received from a web server, a special program is used on a client computer - a web browser. The main function of a web browser is to display hypertext. The World Wide Web is inextricably linked with the concepts of hypertext and hyperlinks. Much of the information on the Web is hypertext.

To create, store and display hypertext on the World Wide Web, the HTML language (English HyperText Markup Language "hypertext markup language") is traditionally used. The work of creating (marking up) hypertext documents is called layout, it is done by a webmaster or a separate markup specialist - a layout designer. After HTML markup, the resulting document is saved to a file, and such HTML files are the main type of World Wide Web resources. Once an HTML file is made available to a web server, it is referred to as a "web page". A set of web pages forms a website.

The hypertext of web pages contains hyperlinks. Hyperlinks help users of the World Wide Web easily navigate between resources (files), regardless of whether the resources are located on the local computer or on a remote server. To determine the location of resources on the World Wide Web, uniform resource locators URL (English Uniform Resource Locator) are used. For example, the full URL of the main page of the Russian section of Wikipedia looks like this: http://ru.wikipedia.org/wiki/Main_page. Such URL locators combine URI identification technology (English Uniform Resource Identifier "uniform resource identifier") and the DNS domain name system (English Domain Name System). The domain name (in this case, ru.wikipedia.org) as part of the URL denotes the computer (more precisely, one of its network interfaces) that executes the code of the desired web server. The URL of the current page can usually be seen in the address bar of the browser, although many modern browsers prefer to show only the domain name of the current site by default.

Technology

To improve the visual perception of the web, CSS technology has become widely used, which allows you to set uniform design styles for many web pages. Another innovation worth paying attention to is the URN (Uniform Resource Name) resource designation system.

A popular development concept for the World Wide Web is the creation of the Semantic Web. The Semantic Web is an add-on to the existing World Wide Web, which is designed to make the information posted on the network more understandable to computers. The Semantic Web is the concept of a network in which each resource in human language would be provided with a description understandable to a computer. The Semantic Web opens up access to clearly structured information for any application, regardless of platform and regardless of programming languages. Programs will be able to find the necessary resources themselves, process information, classify data, identify logical relationships, draw conclusions, and even make decisions based on these conclusions. If widely adopted and implemented well, the Semantic Web has the potential to revolutionize the Internet. To create a computer-friendly description of a resource, the Semantic Web uses the RDF format (Eng. Resource Description Framework), which is based on XML syntax and uses URIs to identify resources. New in this area are RDFS (eng. RDF Schema) and SPARQL (eng. Protocol And RDF Query Language) (pronounced "sparkle"), a new query language for fast access to RDF data.

History

Main article: History of the World Wide Web

Tim Berners-Lee and, to a lesser extent, Robert Cayo are considered the inventors of the World Wide Web. Tim Berners-Lee is the author of the HTTP, URI/URL, and HTML technologies. In 1980 he worked for the European Council for Nuclear Research (fr. conseil européen pour la recherche nucléaire, CERN) software consultant. It was there, in Geneva (Switzerland), for his own needs that he wrote the Enquire program (eng. Enquire, can be loosely translated as "Interrogator"), which used random associations to store data and laid the conceptual foundation for the World Wide Web.

As part of the project, Berners-Lee wrote the world's first web server, called "httpd", and the world's first hypertext web browser, called "WorldWideWeb". This browser was also a WYSIWYG editor (short for what you see is what you get - what you see is what you get), its development was started in October 1990 and completed in December of the same year. The program worked in the NeXTStep environment and began to spread over the Internet in the summer of 1991.

Mike Sendall buys a NeXT cube computer at this time in order to understand what the features of its architecture are, and then gives it to Tim [Berners-Lee]. Thanks to the sophistication of the NeXT cube software system, Tim wrote a prototype illustrating the main points of the project in a few months. It was an impressive result: the prototype offered users, among other things, advanced features such as WYSIWYG browsing/authoring!... . The only thing I insisted on was that the name should not be once again extracted from the same Greek mythology. Tim suggested "world wide web". I immediately liked everything about this title, only it is difficult to pronounce in French.

The world's first website was hosted by Berners-Lee on August 6, 1991 on the first web server, available at http://info.cern.ch/ , (). The resource defined the concept of " world wide web”, contained instructions for setting up a web server, using a browser, etc. This site was also the world's first Internet directory, because Tim Berners-Lee later posted and maintained a list of links to other sites there.

The first photo to appear on the World Wide Web was of the parody filk band Les Horribles Cernettes. Tim Berners-Lee asked the bandleader for scanned photos after the CERN hardronic festival.

Yet the theoretical foundations of the web were laid much earlier than Berners-Lee. Back in 1945, Vannaver Bush developed the concept of Memex - auxiliary mechanical means of " expanding human memory". Memex is a device in which a person stores all his books and records (and ideally, all his knowledge that can be formally described) and which gives out the necessary information with sufficient speed and flexibility. It is an extension and addition to human memory. Bush also predicted a comprehensive indexing of texts and multimedia resources with the ability to quickly find the necessary information. The next significant step towards the World Wide Web was the creation of hypertext (a term coined by Ted Nelson in 1965).

Since 1994, the World Wide Web Consortium (English world wide web consortium, in abbreviated form W3C), founded and still headed by Tim Berners-Lee, has taken on the main work on the development of the World Wide Web. This consortium is an organization that develops and implements technological standards for the Internet and the World Wide Web. Mission of the W3C: "Unleash the full potential of the World Wide Web by creating protocols and principles that guarantee the long-term development of the Web." The consortium's other two major goals are to ensure the complete "internationalization of the Web" and to make the Web accessible to people with disabilities.

W3C develops common principles and standards for the Internet (called "recommendations", eng. W3C recommendations), which are then implemented by software and hardware manufacturers. In this way, compatibility is achieved between software products and equipment from different companies, which makes the World Wide Web more perfect, versatile and convenient. All recommendations of the World Wide Web Consortium are open, that is, they are not protected by patents and can be implemented by anyone without any financial contributions to the consortium.

Development prospects

Currently, there are two directions in the development of the World Wide Web: the semantic web and the social web.

- The Semantic Web involves improving the connectivity and relevance of information on the World Wide Web through the introduction of new metadata formats.

- The social web relies on users to organize the information available on the network.

As part of the second direction, developments that are part of the semantic web are actively used as tools (RSS and other formats of web feeds, OPML, XHTML microformats). Partially semantized sections of the Wikipedia category tree help users consciously navigate in the information space, however, very mild requirements for subcategories do not give reason to hope for the expansion of such sections. In this regard, attempts to compile Knowledge atlases may be of interest.

There is also a popular concept Web 2.0, summarizing several directions of development of the World Wide Web at once.

Ways to actively display information

The information presented on the web can be accessed:

- read-only ("passive");

- for reading and adding/modifying ("active").

Ways to actively display information on the World Wide Web include:

This division is very conditional. So, say, a blog or a guest book can be considered as a special case of a forum, which, in turn, is a special case of a content management system. Usually the difference is manifested in the purpose, approach and positioning of a particular product.

Part of the information from websites can also be accessed through speech. India has already begun testing a system that makes the text content of pages accessible even to people who cannot read and write.

Security

Spreading

Between 2005 and 2010, the number of web users doubled to reach the two billion mark. According to early research and 1999, most of the existing websites were not indexed correctly by search engines, and the web itself was larger than expected. As of 2001, more than 550 million web documents had already been created, most of which, however, were within the invisible web. As of 2002, more than 2 billion web pages were created, 56.4% of all Internet content was in English, followed by German (7.7%), French (5.6%) and Japanese (4. nine %). According to research conducted at the end of January 2005, over 11.5 billion web pages were identified in 75 different languages and indexed on the open web. And as of March 2009, the number of pages increased to 25.21 billion. On July 25, 2008, Google software engineers Jesse Alpert and Nissan Hiai announced that Google search engine had detected over a billion unique URLs.

Monument

see also

Notes

- "The Web as the 'Next Step' (NextStep) of the Personal Computer Revolution".

- LHC: The first band on the web

- IBM developed voice internet

- Ben Itzhak, Yuval. Infosecurity 2008 – New defense strategy in battle against e-crime , ComputerWeekly, Reed Business Information (April 18, 2008). Retrieved April 20, 2008.

- Christey, Steve and Martin, Robert A. Vulnerability Type Distributions in CVE (version 1.1) (indefinite) . MITER Corporation (May 22, 2007). Retrieved June 7, 2008. Archived from the original on April 15, 2013.

- “Symantec Internet Security Threat Report: Trends for July–December 2007 (Executive Summary)” (PDF) . XIII. Symantec Corp. April 2008: 1-2 . Retrieved 11 May 2008.

- Google searches web "s dark side, BBC News (11 May 2007). Retrieved 26 April 2008.

- Security Threat Report (indefinite) (PDF). Sophos (Q1 2008). Retrieved April 24, 2008. Archived from the original on April 15, 2013.

- security threat report (indefinite) (PDF). Sophos (July 2008). Retrieved August 24, 2008. Archived from the original on April 15, 2013.

- Fogie, Seth, Jeremiah Grossman, Robert Hansen, and Anton Rager. Cross Site Scripting Attacks: XSS Exploits and Defense. - Syngress, Elsevier Science & Technology, 2007. - P. 68–69, 127. - ISBN 1-59749-154-3.

- O "Reilly, Tim. What Is Web 2.0 (indefinite) 4–5. O "Reilly Media (30 September 2005). Retrieved June 4, 2008. Archived from the original on April 15, 2013.

- Ritchie, Paul (March 2007). “The security risks of AJAX/web 2.0 applications” (PDF) . Infosecurity. Elsevier. Archived from the original (PDF) on 2008-06-25 . Retrieved 6 June 2008.

- Berinato, Scott. Software Vulnerability Disclosure: The Chilling Effect. CSO, CXO Media (1 January 2007), p. 7. Archived from the original on April 18, 2008. Retrieved June 7, 2008.

- Prince, Brian. McAfee Governance, Risk and Compliance Business Unit, eWEEK, Ziff Davis Enterprise Holdings (April 9, 2008). Retrieved April 25, 2008.

- Preston, Rob. Down To Business: It's Past Time To Elevate The Infosec Conversation, InformationWeek, United Business Media (April 12, 2008). Retrieved April 25, 2008.

- Claburn, Thomas. RSA's Coviello Predicts Security Consolidation, InformationWeek, United Business Media (6 February 2007). Retrieved April 25, 2008.

- boyd, danah; Hargittai, Eszter (July 2010). “Facebook privacy settings: Who cares?” . First Monday. University of Illinois at Chicago. 15 (8). Uses deprecated |month= parameter (help)

- Lynn, Jonathan. Internet users to exceed 2 billion … , Reuters (19 October 2010). Retrieved 9 February 2011.

- S. Lawrence, C.L. Giles, "Searching the World Wide Web," Science, 280(5360), 98-100, 1998.

- S. Lawrence, C.L. Giles, "Accessibility of Information on the Web," Nature, 400, 107-109, 1999.

- (indefinite) . Brightplanet.com. Retrieved July 27, 2009.

In computer science, much attention is paid to computer networks. Their most prominent representatives are the Internet and the World Wide Web. The Internet is a telecommunications network of computers. It is the basis of the World Wide Web (Network), a system of interconnected documents located on various computers connected to the Internet. If you want to emphasize the virtual nature of documents, their totality is characterized as hyperspace. It is quite obvious that the Internet, the World Wide Web and hyperspace are an inseparable trinity. Their subjects are not individuals, but network communication community. In accordance with this circumstance, the concepts communication, group discourse And social community of people. All these concepts were considered by philosophers long before the appearance in the 1980s. World Wide Web. The results of their analysis can shed light on the nature of the Internet and the Web1. We present them in the most economical form.

The concept of communication is the result of a complex process of understanding the nature of interactions between people. But it is not enough to say that people interact with each other: it is important to understand the conceptual content of such interaction. In acting as social beings, people seek to optimize their values. Communication is an exchange of values, the result of which is the achievement of agreement (consensus) or disagreement (dissensus). Hermeneutics (H.-G. Gadamer, J. Habermas) assign more ethical weight to consent than to disagreement. The post-structuralists (J. Derrida, J.-F. Lyotard) adhere to a directly opposite point of view. For them, dissensus is ethically more significant than consensus. Both arguing sides do not conceive of social reality without discourse - the exchange of judgments of value content. Discourse always testifies to the presence of a certain community of people: the participants in the discourse, by definition, are not atoms, claiming individual solitude.

So, in the future, we will have to constantly keep in mind the inseparable trinity of concepts: communication, discourse, community of people. Moreover, they all appear in different guises depending on the nature of the knowledge in question. The mentioned concepts are most often considered in the context of: 1) informatics; 2) management; 3) economy; 4) political science; 5) sociology; 6) psychology; 7) ordinary knowledge.

Researchers do not always distinguish between levels of knowledge. In this case, in the pursuit of universal values, they stray into superficial reasoning like "Network is good", "Internet is evil". This kind of reasoning is only at first glance meaningful. Upon closer examination, it turns out that they need specification, and it is impossible without reference to the conceptual richness of the sciences. Given this circumstance, let's consider the Internet and the Web in the context of various sciences, as well as non-scientific knowledge.

Network from the standpoint of computer science

Of course, the phenomena of interest to us have absorbed all the richness of computer science as a science. But five "pillars" played a decisive role in the formation and development of the Web: hypertext, HTML, URL, HTTP, and search engines.

Hypertext is a document that includes links to other texts. The term was coined and introduced into computer science by the American T. Nelson in 1969. The first feature of hypertext is its branching rather than linear character. Knowledge is realized in the form of cross-references. Consequently, there is a crossing of texts, and this, as you know, is a necessary sign of dialogue1. The remarkable achievement of the specialists who developed the concept of hypertext was to create a technological possibility of reproducing discourse in the form of intertextuality. Its peculiarity is that the initiative constantly passes from one person to another. Hypertext provides such an opportunity. At the beginning of the XX century. philosophers L. Wittgenstein and M. Heidegger initiated a linguistic turn under the motto "language is more important than mentality." In the process of its implementation, it was also realized that the dialogue is more important than the monologue. Intersecting texts are much richer in structural and semantic terms than a linear construction.

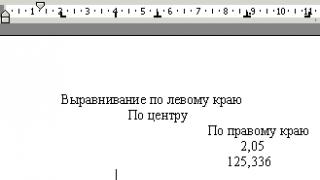

HTML(English) Hyper Text Markup Language) is a standard language for structuring and formatting documents on the Web. Text documents containing HTML code are processed and displayed in a formatted form by browsers.

URL(English) Uniform Resource Locator) - a uniform locator (locator) of a resource on the Internet. All resources are assigned names by which they are found on the Web and to which they respond.

http(English) Hyper Text Transfer Protocol) - Hypertext transfer protocol. The consumer (client) sends a request to the provider (server). He performs the necessary actions and returns back a message with the result. In the request and response, the resource is specified in accordance with a certain encoding method.

The concepts of HTML, URL, HTTP were developed by the creator of the World Wide Web, the Anglo-American scientist T. B. Lee in 1990-1992. The genius of T. B. Lee manifested itself primarily in a deep understanding of the conceptual structure of the Web.

A search engine is a software and hardware complex that provides the ability to search for documents on the Internet. The software part of a search engine that provides its functionality is called search engine. The main criterion for the quality of the search engine is relevance, those. the degree of matching the found query. According to numerous surveys, Google is by far the most popular search engine. There is, of course, no universal search engine. Various search strategies lead to new knowledge. It is always important to remember that any search is not carried out by chance, but in connection with the decision made. Thus, the search launches a mechanism for the synthesis of new knowledge, and this is impossible without communication with other subjects of the Network and, therefore, without the formation of one or another virtual community of people, for example, adherents of the Yandex search engine, which is so popular on the Runet. As we can see, the concepts of communication, discourse and community of people receive a specific form in computer science.

The conceptual foundations of the Internet and the Web have been discussed above. Of course, all of them have undergone and are undergoing numerous metamorphoses. HTML, URL, HTTP, search engines and browsers have numerous competitors. If you want to understand their history, you need to build the appropriate problem series and their interpretations. It was important for us to identify the main conceptual nodes of the Network, which are directly the property of informatics itself.

Hello, dear readers of the blog site. We all live in the era of the global Internet and use the terms site, web, www (World Wide Web - World Wide Web, global network) quite often and without really going into what it is.

I observe the same thing with other authors, and even ordinary interlocutors. “Website”, “Internet”, “network” or the abbreviation “WWW” have become so common concepts for us that it doesn’t even occur to think about their essence. However, the first website was born only some twenty years ago. What is the Internet?

After all, it has a rather long history, however, before the advent of the global network (WWW), 99.9% of the world's inhabitants did not even suspect its existence, because it was the lot of specialists and enthusiasts. Now even the Eskimos know about the World Wide Web, in whose language this word is identified with the ability of shamans to find answers in the layers of the universe. So let's discover for ourselves - what is the Internet, a website, the World Wide Web, and everything else.

What is the Internet and its difference from the global web WWW

The most remarkable fact that can now be stated is that The Internet has no owner. In fact, this is an association of individual locales (thanks to the once common standards adopted, namely the TCP / IP protocol), which is maintained by network providers.

It is believed that due to the ever-increasing media traffic (video and other heavy content moving in tons on the network), the Internet will soon collapse due to its currently limited bandwidth. The main problem in this regard is the upgrade of the network equipment that makes up the global web to a faster one, which is primarily constrained by the additional costs required. But I think that the problem will be solved as the collapse matures, and there are already separate network segments operating at high speeds.

In general, in light of the fact that the Internet is essentially nobody's, it should be mentioned that many states, trying to introduce censorship in the global network, want to identify it (namely, its most popular component of the WWW at the moment) with.

But there is really no ground under this desire, because The Internet is just a means of communication or, in other words, a storage medium comparable to a telephone or even plain paper. Try to sanction the paper or its distribution around the planet. Separate sanctions by individual states, in fact, can only be applied to sites (islands of information on the network) that become available to users through the World Wide Web.

The first prerequisites for the creation of the global web and the Internet were taken ... What year do you think? Surprisingly, but already in the dense 1957. Naturally, the military (and, of course, the United States, well, where without them) needed such a network for communication in the event of the deployment of hostilities using nuclear weapons. It took quite a long time to create a network (about 12 years), but this is quite understandable by the fact that at that time computers were in their infancy.

But nevertheless, their power was quite enough to get an opportunity between the military departments and leading US universities by 1971. Thus, the Email transfer protocol has become the first way to use the internet for user needs. After a couple more about what the Internet is already learned across the ocean. By the beginning of the 80s, the main data transfer protocols (mail, ) were standardized, as well as the protocol for the so-called Usenet news conferences, which was similar to mail, but allowed to organize something similar to forums.

A few years later, the idea of creating a domain name system (DNS - will play a crucial role in the development of the WWW) appeared and the world's first protocol for communicating over the Internet in real time - IRC (in Russian colloquial - irka) appeared. He allowed to chat online. Fiction, which was accessible and interesting to a very, very small number of inhabitants of the planet Earth. But that's just for now.

At the turn of the 80s and 90s, such significant events took place in the history of infrastructure development that they, in fact, predetermined its future fate. In general, such a spread of the global network in the minds of modern inhabitants of the planet is due to almost one single person - Tim Berners-Lee:

Berners-Lee is an Englishman born to two mathematicians who dedicated their lives to building one of the world's first computers. It was thanks to him that the world learned what the Internet, website, e-mail, etc. are. Initially, he created the World Wide Web WWW (World Wide Web) for the needs of nuclear research at CERN (the same collider is located with them). The challenge was to conveniently place all of the group's scientific information on their own network.

To solve this problem, he came up with everything that is now the fundamental elements of the WWW (what we consider the Internet, without understanding its essence a little). He took as a basis the principle of organizing information, called. What it is? This principle was invented long before that and consisted in organizing the text in such a way that the linearity of the narration was replaced by the ability to navigate through different links (links).

The Internet is hypertext, hyperlinks, URLs and hardware

Thanks to this, hypertext can be read in different sequences, thereby obtaining various variants of linear text (well, you, as Internet users with experience, should now be clear and obvious, but then it was a revolution). In the role of hypertext nodes should have been, which we now call simply links.

As a result, all the information that now exists in computers can be represented as one large hypertext, which includes countless nodes (hyperlinks). Everything that was developed by Tim Berners-Lee was transferred from the CERN local grid to what we today call the Internet, after which the Web (web) began to gain popularity at a frantic pace (the first fifty million users of the World Wide Web were registered in just over a first five years of existence).

But to implement the principle of hypertext and hyperlinks, it was necessary to create and develop several things from scratch at once. Firstly, a new data transfer protocol was needed, which has become known to all of you now HTTP protocol(at the beginning of the addresses of all web sites you will find a mention of it or its secure HTTPs version).

Secondly, it was developed from scratch, the abbreviation of which is now known to all webmasters in the world. So, we got tools for data transfer and creating sites (a set of web pages or web documents). But how to refer to these same documents?

The first one allowed to identify the document on a separate server (site), and the second one allowed to add a domain name (received and uniquely indicating that the document belongs to a website hosted on a specific server) or an IP address (a unique digital identifier of absolutely all devices in the global or local network) into the URI identifier. ). Read more about the link provided.

It remains to take just one step to ensure that the World Wide Web WWW finally works and becomes in demand by users. Do you know what?

Well, of course, a program was needed that could display the contents of any web page requested on the Internet (using a URL address) on the user's computer. It became such a program. If we talk about today, then there are not so many major players in this market, and I managed to write about all of them in a short review:

- (IE, MSIE) - the old guard is still in the ranks

- (Mazila Firefox) - another veteran is not going to give up positions without a fight

- (Google Chrome) - an ambitious newcomer who managed to take the lead in the shortest possible time

- - a browser loved by many in Runet, but gradually losing popularity

- - a messenger from the apple mill

Timothy John Berners-Lee independently wrote the program for the world's first Internet browser and called it, without further ado, the World Wide Web. Although this was not the limit of perfection, however, it was with this browser that the victorious march of the World Wide Web WWW around the planet began.

In general, of course, it is striking that all the necessary tools for the modern Internet (meaning its most popular component) were created by just one person in such a short time. Bravo.

A little later, the first graphical browser Mosaic appeared, from which many of the modern browsers (Mazila and Explorer) also originate. It was the Mosaic that became the drop that was not enough to interest in the internet(namely, to the World Wide Web) from ordinary inhabitants of the planet Earth. A graphical browser is a completely different matter than a text browser. Everyone loves to look at pictures and only a few like to read.

Remarkably, Berners-Lee did not receive any terribly large amounts of money, which, for example, he received or received as a result, although he probably did more for the global network.

Yes, over time, in addition to the Html language developed by Berners-Lee, . Thanks to this, some of the operators in Html were no longer needed, and they were replaced by much more flexible cascading style sheet tools, which made it possible to significantly increase the attractiveness and design flexibility of the sites being created now. Although in learning the rules of CSS are, of course, more complex than the markup language. However, beauty requires sacrifice.

How are the Internet and the global network arranged from the inside?

But let's see what is web (www) and how information is posted on the Internet. Here we will come face to face with the very phenomenon called website (web is a grid, and site is a place). So, what is a “place on the web” (an analogue of a place under the sun in real life) and how to actually get it.

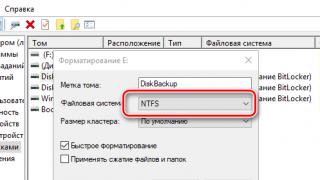

What is inte? So, it consists of channel-forming devices (routers, switches) that are invisible and not of great importance to users. The WWW network (what we call the Web or the World Wide Web) consists of millions of web servers, which are programs running on slightly modified computers, which in turn must be connected (24/7) to the global web. and use the HTTP protocol for communication.

The web server (program) receives a request (most often from the browser of the user who opens the link or entered the Url in the address bar) to open a document hosted on this server itself. The document in the simplest case is a physical file (with the html extension, for example), which lies on the server's hard drive.

In a more complex case (when using ), the requested document will be generated programmatically on the fly.

To view the requested page of the site, special software is used on the client (user) side called a browser, which can render the downloaded hypertext fragment in a digestible form on the display device where this same browser is installed (PC, phone, tablet, etc.). ). In general, everything is simple, if you do not go into details.

Previously, each individual website was hosted physically on a separate computer. This was mainly due to the weak computing power of the PCs available at that time. But in any case, a computer with a web server program and a website hosted on it must be connected to the Internet around the clock. It is quite difficult and expensive to do this at home, so they usually use the services of specialized hosting companies to store web sites.

Hosting service due to the popularity of the WWW is now quite in demand. Thanks to the growing power of modern PCs over time, hosters have the opportunity to host many websites on one physical computer (virtual hosting), and hosting one site on one physical PC has become known as a service.

When using virtual hosting, all websites hosted on a computer (the one called the server) can be assigned one IP address, or there can be a separate one for each. This does not change the essence and only indirectly can affect the Website hosted there (a bad neighborhood on the same IP can have a bad effect on - search engines sometimes treat everyone with the same brush).

Now let's talk a little about website domain names and their importance on the World Wide Web. Every resource on the Internet has its own domain name. Moreover, a situation may arise when the same site may have several domain names (as a result, mirrors or aliases are obtained), and also, for example, the same domain name may be used for many resources.

Also for some serious resources there is such a thing as mirrors. In this case, the site files may be located on different physical computers, and the resources themselves may have different domain names. But these are all the nuances that only confuse novice users.