Good mood to all readers - site!

I present to your attention the main Google filters along with ways to eliminate them.

The Google search engine, like Yandex, has a number of filters that are applied to optimizer Web resources. In general, Google and Yandex filters are based on similar principles. However, the methods for removing a site from one filter or another still differ.

So let's get started. Let's try to figure out what filters Google has and what to do when a site falls under pessimism.

1. The most common Google search engine filter is “Sandbox”, the so-called “sandbox”. This filter is automatically applied to young sites. In this case, the Web resource may be out of index for up to 3 months.

Methods of output from the Sandbox filter. – you need to link the pages of the site to each other. You can carefully purchase links, but only very carefully so as not to fall under other sanctions (“Many links at once”).

In this case, you need to very carefully select donor sites, paying attention to such an indicator as Trust Rank (the degree of trust Google has in Internet resources). Or you can simply wait until Google itself deems the site “suitable”.

2. The next Google filter is “Domain Name Age” (domain name age). This filter can be applied to sites with domain names up to 1 year old, which have virtually no trust from search engines. Google starts such resources poorly, which prevents them from artificially inflating the site’s position.

Methods of output from the “Domain Name Age” filter. You can try purchasing links from old sites and trust resources. You can also try changing the domain to one that is already more than 1 year old.

3. “Supplementary Results” (auxiliary results). This filter is applied when the site has pages that duplicate each other. Google simply moves such sites into a separate group called “Additional Search Results,” so users almost never end up on these pages.

Methods of output from the “Supplementary Results” filter. You need to either remove duplicate pages or place unique and useful content on each page.

4. Filter “Bombing” (bombing). As a rule, this filter is applied to a site due to a large number of links from other resources with the same anchors. These links are simply not taken into account by the search engine, so the site’s position does not grow.

Methods of withdrawal from the “Bombing” filter. You just need to remove some of the links with the same anchors. Instead, it is better to buy links with unique anchors that the search engine has not yet taken into account before.

5. Filter “Bowling” (bowling). If the optimizer itself was mainly involved in the use of the above-described filters, then the “Bowling” filter is often associated with unscrupulous competitors who steal content (), write letters of complaints, cheat behavioral factors, place links on banned sites, etc. This filter is very dangerous for young sites that do not have much trust from the search engine (low Trust Rank).

Methods of output from the “Bowling” filter. There is only one way out - you need to contact Google specialists with an explanatory letter, in which you need to explain the whole situation and try to prove that you are right.

6. Filter “Broken Links” (so-called “”). Such a filter is applied when there are a large number of links that generate a 404 error. In this case, the resource almost always loses its position in search results.

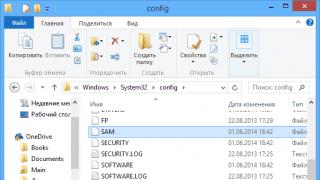

Methods of withdrawal from the “Broken Links” filter. You need to check all the links on the site and find these same links from. You can detect them using Xenu Link Sleuth software or in the Yandex panel. Webmaster.

7. Filter “Too Many Links at once” (the filter is also called “many links at once”). If during this period of time the search engine detects a lot of links to a certain Web resource, then, most likely, the “Too Many Links at once” filter will be applied to this site. In this case, Google may completely ignore all purchased links.

Methods of output from the “Too Many Links at once” filter. You need to start gradually, starting with the minimum number, gradually increasing it.

8. Filter “Links” (so-called “link washers”). If there are a lot of outgoing links on one page of the site, then Google will definitely apply this filter. Additionally, it will simply ignore all links. Thus, not only will the positions of partners not grow, the donor site itself may also greatly lose its position in Google search.

The methods of output from the “Links” filter are very simple. If there are more than 25 outgoing links on a page, you simply need to delete such pages or hide them from indexing.

9. Filter “Page Load Time” (site loading time). This filter is applied to pages that take a very long time to load when a search robot accesses them. The site simply begins to lose position in the search results.

Methods of output from the “Page Load Time” filter. We need to get rid of “heavy” website elements that overload the source code of the pages.

You can also use additional modules that are provided for the site.

10. Filter “CO-citation Linking Filter” (quoting). This filter is used if many non-thematic sites link to an Internet resource. Google simply lowers the rankings of such sites.

Methods of output from the CO-citation Linking Filter. You need to find such links and ask site owners to remove them. In this case, you need only from thematic resources.

11. Filter “Omitted Results” (omitted results). If the site has non-unique content, there are duplicate pages, a small number of outgoing links and weak linking, then the site is likely to fall under this filter. Such pages will only appear in advanced search results.

Methods of output from the “Omitted Results” filter. You need to remove “duplicates”, implement it, upload unique content to the site and buy links from thematic resources.

12. Filter “-30”. Such sanctions are imposed on a site in the presence of hidden redirects, doorways (sites directing to this site) and cloaking. The name of the filter says a lot: the site is lowered in search results by 30 positions.

Methods of output from the “-30” filter. We need to try to eliminate all these sites that provide the search engine with various false information.

13. Filter “Duplicate Content” (duplicate content). Naturally, this filter is applied to non-unique content. Such website pages are pessimized by Google or simply removed from the search results.

Methods of output from the “Duplicate Content” filter. You only need to post it on the website. Using various scripts, you can try to protect it from theft, although this method does not always help.

14. Filter “Over Optimization” (excessive optimization). The filter is applied when the site is oversaturated with keywords. Such pages are removed from search results or subject to severe pessimization.

Methods of output from the “Over Optimization” filter. It is necessary to ensure that the pages do not exceed 5-7%.

15. Filter “Panda” (panda). As a rule, this filter is applied to Web resources for low-quality content: low text uniqueness, the presence of irrelevant advertising, a large number of keywords, duplicate pages, etc.

Methods of output from the “Panda” filter. It is necessary to remove some unnecessary links, add unique content to the site, remove irrelevant advertising and “duplicates”.

16. Filter “Penguin” (penguin). If the site’s keyword density exceeds 7%, there is an exact occurrence of keywords in link anchors, there are hidden links and duplicate pages, and there is a high rate of growth in link building, then such a filter is simply inevitable.

Methods of output from the Penguin filter. It is necessary to remove some keywords, dilute anchors with synonyms, remove hidden links and “duplicates”, and, in general, work on the “naturalness of the site”.

But do not forget that search engines regularly update their filters and also add new ones. You need to follow the updates, although the search engines themselves do not actively report them.

That's all for today.

If you know of other Google filters, be sure to let me know and I will add them to the existing list.

Until new articles...

Best regards, Denis Chernikov!

Today I would like to draw your attention to filters from the Google search engine.

When I first created my blog, I had no idea that search engine algorithms are created so intelligently that if they come across a low-quality site, then they can impose some restrictions on it.

Of course, if I turned back time, I would have developed my resource in a completely different way and would not have made the mistakes that led me to a disastrous result.

As they say, if you are aware, you are armed.

Types of Google filters

1) "Sandbox" or Sandbox. This filter is applied to young sites at the moment of their birth on the Internet.

This is a kind of test for sites so that they do not get into top places, short-lived doorways, one-day blogs, etc.

Think for yourself why Google would put unverified sites in the top search results, if after a certain time such a site may cease to exist altogether.

Exit from under the filter

- wait;

— increase natural link mass;

- write regularly;

— buy an old domain;

2) Google Florida. This filter is applied to sites that have over-optimized pages.

It monitors the abuse of meta tags: strong, h1-h6, rich keywords and their density.

Exit from under the filter

— replace SEO optimization with normal one;

3) Google Bombing. Lowering a specific page in the search results due to a large number of identical anchors linking to it.

If you promote a page using external links with the same anchor, then the result will be completely opposite.

Exit from under the filter

4) “Minus 30”. This Google filter includes sites that use “black hat” optimization for promotion. Google demotes such resources by 30 positions.

Reasons for falling under the filter

— a sharp increase in the link mass from low-quality sites (catalogues, guest books and comments);

- link dumps;

- redirect using java script, as well as ;

Exit from under the filter

— do not use the above points to promote the site;

— remove the redirect;

5) "Additional results" or Supplemental Results. If the pages fall under such filters, then they will be displayed in the search only if there are not enough “quality” sites by clicking on the “additional results” link.

Exit from under the filter

— unique meta tags and title on the page;

— purchase several trust links to this page;

— increase the volume of unique page content;

Exit from under the filter

— get rid of broken links;

7) “Non-unique content”. If a site uses stolen content (copy-paste), then this filter will lower it in the search results.

Although there are times when Google filter is imposed not on the thief, but on the one from whom it was stolen. but plays a very important role.

Exit from under the filter

- do not steal other people's material;

— speed up page indexing;

— remove non-unique material;

8) “Loading time of site pages”. When a robot visits a site and does not gain access to its content (due to poor hosting or server), then it will skip such pages and they will not be able to get into the index.

Exit from under the filter

— change hosting to a high-quality one, which has 99.95%;

9) "Quotation" or Co-citation Linking Filter. Its essence is that you should only link to high-quality sites, which in turn should also link to high-quality sites.

Basically, only websites can fall under such a filter.

Exit from under the filter

Exit from under the filter

— link to sites in a chain 1 to 2, 2 to 3, 3 to 1.

11) “Many links at once”. This Google filter lowers sites in search results, and sometimes even imposes a ban, due to the site’s fate in link trash, large purchases of links, etc.

Exit from under the filter

12) “Many pages at once”. If there is a huge amount of content on a blog in a short period of time, then Google may consider that it is not natural and impose a filter.

Exit from under the filter

- keep it natural;

- if you use, then set a limit on the amount of added material;

13) “Degree of trust” or Trust Rank. The more trust you get from Google, the higher your position in the TOP results will be. This filter includes everything listed above.

— domain age;

— quality of incoming links;

— number of outgoing links;

- quality ;

14) Google Panda. This filter appeared not so long ago, but has already frayed the nerves of many SEOs.

Its essence is that shitty sites should not be included in the index; I talked about it in detail in the article “”.

Exit from under the filter

— create an SDL website using all the above recommendations;

15) Google Penguin. The most recent of the filters. Its essence is to lower the rankings of sites that use purchased links for promotion.

During his work, traffic on many blogs decreased, while on others, on the contrary, it increased. I talked about it in great detail in the previous article “”.

Exit from under the filter

I would also like to add that if you create a website for people (SDL), which is frequently updated, contains unique content and is useful, it will never fall under Google filters, and the TIC, PR and Trust indicators will grow by themselves.

If, after all, the filter did not bypass you, then do not despair and develop your project further.

The world's largest search engine has long used a number of algorithms aimed at improving search results. Google has a larger number of filters than Yandex. But despite the fact that Google is the undisputed leader in Ukraine and the second largest search volume in Russia, some of its filters are not applicable to the Russian-speaking segment. However, Google has been planning a large-scale entry into the CIS market for several years now and, perhaps, many filters that are currently inactive in our country will be introduced over a certain period of time.

"Duplicate Content"

Every day the number of documents on the Internet is growing rapidly. It is difficult for search engines to process and store such volumes of information, especially since many resources duplicate each other. Mainly because of this, this filter for duplicate content was introduced. Unfortunately, it still does not work perfectly, because often the original source of texts is ranked lower than the site that stole it.

What's happening to the site:

The resource is reduced in search results. You can enter part of the text from the pages (about 10 words), the number of hits from which has fallen, into the search engine and view the results. If your site is not in first place, this means that Google does not consider it the primary source.

How to avoid being filtered:

Use content that is not found on the Internet. If this method is expensive and time-consuming for you due to the large number of pages, then:

– You can uniqueize the content by adding original comments and parts of texts

– Add a dynamic script that would display on your pages any changing information that is not repeated on the network, for example, a user chat window. In this case, the text from the window should be indexed.

Replace (or, in extreme cases, uniqueize) content on the site

Filter “ – 30 ”

Applicable to sites that use hidden redirects and doorways. It is designed to combat outright search spam in the SERPs.

What's happening to the site:

Pages in search results are lowered by 30 points for queries, thus limiting the number of transitions to such sites (the vast majority of users do not reach the 3rd page in search)

How to avoid being filtered:

Do not use “black” methods - redirects and doorways.

Options for removing a site from the filter:

You need to exclude the already mentioned methods for which the site was caught by the filter.

"Link growth is too fast"

The search engine may suspect you of trying to influence search results by increasing the external link mass to the site. Most often, young sites are susceptible to it.

What's happening to the site:

The site may drop significantly in search results, or this may even lead to its “ban”.

How to avoid being filtered:

Options for removing a site from the filter:

Gradually remove the link mass, try to buy or place several links on authoritative resources.

"Bombing"

The filter is applied to the site for a large number of links with the same link text. Google considers this behavior to be search spam and sees in this the desire of the site owner to increase its ranking in search results for a specific request.

What's happening to the site:

In most cases, the site “flies” from the search results and a BAN is imposed.

How to avoid being filtered:

When promoting a site, do not place a large number of links with the same text.

Options for removing a site from the filter:

Initially, try to dilute the texts of links that come to you from other sites (either buy new ones, or change some of the existing ones, if possible). Then add your site to the application for indexing.

"Sandbox" - sandbox

Google is wary of sites that have recently appeared on the Internet. This is due to the search engine’s desire to fight spam. This filter prevents young sites from getting to the top positions with popular queries (especially commercial ones).

What's happening to the site:

Despite the work done on promotion, the resource does not reach the TOP for popular queries, although there is traffic for low-frequency search phrases.

How to avoid being filtered:

Buy a domain “with age” and history. In this case, you need to check what was placed on it before you, since it is possible that the new domain name may be subject to some kind of filter.

Options for removing a site from the filter:

It is impossible to get out of the “sandbox” right away. According to observations, this filter is not removed from each site individually, but from groups of sites at once (by topic). You can only speed up the process of getting out from under this filter by placing several links to high-quality resources with a history that have high performance.

Previously, an effective way to promote was to use link exchange. At the same time, special pages were created on the sites with a large number of “links” to other sites. Later, Google began to crack down on such methods designed to influence search results.

What's happening to the site:

Demotion in search results

How to avoid being filtered:

Do not use “link dumps” (pages that link to a very large number of sites). Do not use links.html in the title of a page on a website.

Options for removing a site from the filter:

Remove or block a page with a large number of links from indexing.

"Co-citation"

It is logical for a search engine to rank sites that are linked by authoritative resources from its own niche (topic). If your resource is linked to by many sites with “undesirable” topics (often casinos, pornography) or next to the link to you in the block there are “links” to such sites, then Google considers that you also have similar content as these sites . This harms the theme of your site.

What's happening to the site:

The resource loses positions in search results for “its” queries.

How to avoid being filtered:

Options for removing a site from the filter:

Revision of the reference mass. Try to remove unwanted “links” to your resource (which have the parameters already described) leading from other sites.

“Degree of trust in the site”

This filter can be called a prefabricated filter, and it’s difficult to call it a filter; rather, it is a parameter for ranking. It takes into account such main factors as:

– Which sites link to you (their authority and how difficult it is to get a link from them)

– What resources do you link to (it is not advisable to put links to low-quality and dubious sites).

– Age and history of the domain name (site). The older, the better.

– The quality of the site’s pages, its “linking”.

– The number of links (both incoming and outgoing, along with their quality indicators mentioned).

And also much more...

How to get more trust in a site:

Try to ensure that the site complies with the factors already listed.

"Over-optimization"

In order to put site owners on an equal footing and minimize the possibility of significantly influencing search results using internal optimization methods, this filter was introduced. It takes into account the number of key phrases on site pages in text, headings and meta tags. The filter begins to operate if the page is oversaturated with keywords.

What's happening to the site:

The position of website pages for keywords for which it is optimized decreases.

How to avoid being filtered:

Do not try to overly saturate texts, headings and meta tags with keywords.

Options for removing a site from the filter:

Correct the information on the website pages that you suspect are subject to the filter. Reduce the number of keywords.

"Load time"

Google tries to rank higher quality sites that would not only be useful and interesting to the user, but also would not cause inconvenience in working with them. Based on this, the search engine began to take into account the loading time of web pages

What's happening to the site

Your web resource ranks worse in the search engine.

How not to fall under the filter

Use quality hosting. If the load on the site increases (increase in visitors, installation of additional “heavy” modules, etc.), change the hosting plan to one more suitable for these purposes.

Options for removing a site from the filter:

If you suspect that your site falls under this filter, change the hosting or hosting plan for the resource.

"Additional Results"

This filter is applied to some pages of your site that the Google search engine considers less significant and useful for users. Often it affects those documents that contain duplicate content, there are no incoming links to pages, and meta tags are repeated.

What's happening to the site:

The pages are not completely excluded from the search, but they can only be found by clicking on the “show additional results” link. Also, they rank very poorly.

How to avoid being filtered:

Place several incoming links to such pages, do not publish a large percentage of duplicate materials, write original titles for web documents.

Options for removing a site from the filter:

Eliminate all the negative factors listed in the section for which this filter is applied.

There are a sufficient number of reasons why a site may fall under. This is, for example, aggressive advertising on the site, over-optimization of content, or low quality of the resource as a whole. But this is not the whole list. Let's look at how to determine if your site is under filters below.

The emergence of new search engine algorithms in most cases simply scares webmasters. For example, your site may be perceived aggressively by search engines if:

- there is content disguised as guest spam;

- a large number of SEO anchors;

- non-unique content;

- there are spam mailings in blocks and comments;

- There are links hidden from users and more.

If a resource falls under the sanctions of a search engine, the site’s position in the system may be completely lost, which means that all the work that was carried out before will simply be lost. Agree that no webmaster wants such a fate for his online project.

How to recognize that your site is under a search engine filter?

The search engine officially reports about some applied filters in the Google Webmaster Tools section. These messages mean that action has been taken against your site due to possible violations:

- the presence of artificial links to your online project;

- there are artificial links on your site;

- Your resource has been hacked;

- spam and spam from users;

- hidden redirection;

- excessive number of keywords or hidden text on the site;

- free hosting that distributes spam.

But what sites does Google “love”? Considered in more detail.

Let's look at each of the filters and how to get out of its effect.

“Sandbox” filter.

This filter is applied to young sites up to 1 year old. During this period, the search engine monitors how the site behaves. It’s easy to check the presence of a filter - the site is not in the index. Getting into the sandbox can happen due to the rapid build-up of the link mass; this seems very suspicious to Google and it imposes sanctions on the young resource, because such behavior is regarded as spam.

In order not to fall under this filter, you need to purchase links very carefully, in no case allow a “link explosion” and fill the site with unique useful content. Choose site donors carefully to avoid falling into the sandbox.

Filter Domain name age.

This filter is also applied to young sites that are less than a year old. But the restrictions are lifted when the site's domain reaches a certain trust level. To avoid the filter, you can buy an old domain with a positive history or purchase trust links, but very carefully.

Filter “Too many links at once” (many links at once).

When this filter is set up, the webmaster notices a sharp drop in traffic from Google, and external links to the resource no longer have any weight. This happens due to a sharp link explosion, the search engine considers it unnatural and applies sanctions to the site.

If your site falls under this filter, then you should significantly reduce link building, and those that already exist should not be touched. After 1-3 months the filter will be removed.

Filter “Bombing”.

It is not the entire site that is subject to punishment, but individual pages that the search engine perceives as spam. Reason: the filter penalizes a site that has many external links with the same anchor or text. The search engine considers this not natural. In order to get rid of this, you need to either delete all links or change anchors, and then just wait for the result.

This filter is applied to sites that have many pages with 404 errors. These links irritate not only site visitors, but also the search engine, which cannot index the page. Therefore, remove broken links to avoid being filtered.

Filter “Links”.

Previously, it was popular for webmasters to exchange links, but today Google penalizes such exchanges. Therefore, place no more than 4 links to partner sites, and if you still fall under the filters, then such links must be removed from the site immediately.

Filter “Page load time” (page loading time).

Google doesn't like sites that have low site loading speed. Therefore, he can lower the resource in the search results for this very reason. It is recommended that the site loading speed should not exceed 7 seconds, the faster the better. This parameter can be checked using a special service – PageSpeed.

Filter “Omitted results”.

The action of this filter is determined when your site is not listed in the main search results. It can only be seen when you open hidden results in the search. This filter is applied to the site for duplicates and poor linking of pages.

If the resource is already under the filter, you need to rewrite the content to make it unique and improve internal linking.

Filter “-30”.

This method of sanctions was created to combat “black SEO”. If the filter is applied, the site's position is reduced by -30.What the filter punishes for: hidden redirect, cloaking, doorways. If the causes are eliminated, the filter stops working.

Filter “Over-optimization”.

The site may drop in rankings or be completely excluded from the index. All this is the effect of this filter. It can be imposed due to excessive optimization of pages, for example, excessive number of keywords, high level of keyword density, over-optimization of meta tags and images. In order for the filter to stop affecting your site, you need to properly optimize your site pages.

Filter “Panda”.

One of the most dangerous filters. It covers several filters at once and in order to get out from under it you need a lot of time and effort. This filter can be applied for many reasons, including: duplicate content, excessive optimization, selling links or aggressive advertising on the site, boosting behavioral factors. In order to get out from under its influence, it is necessary to carry out a whole range of actions aimed at improving the site. This can take quite a long time, in some cases up to 1.5 years.

Penguin filter.

Just like the previous filter, it is very problematic for the site owner. The algorithm is directly related to link promotion. Sanctions may hit your online resource if you purchase too many links at a time; if these links have the same anchor; if the links are not thematic or you are exchanging links. It is very difficult to get out from under the “Penguin”, this process is also long-term.

By the way, the “Panda” and “Penguin” filters are described in more detail in my article “”.

3401 times 3 Viewed times today

Filters for site age:

DNA

Filter for recently registered DNA domains (Domain Name Age). Superimposed on “young” domains. In order for a website on a newly registered domain to rise to the top of Google, you need to gain “trust” from the Google system. Output from the filter:

- Just wait;

- Type the so-called trust, put links to yourself from old thematic sites.

Sandbox (or scientifically SandBox)

Young sites fall under the sandbox filter. The main reason is the search engine’s low trust in the site. SandBox differs from DomainNameAge in that sites on old domains can fall under it if these sites are poorly or not updated at all, do not have backlinks and have a low level of traffic.

Output from the filter:

- Building natural links from thematic sites;

- Develop the project further.

Google filters for manipulation of internal factors

Google Florida

Filter for excessive use of page optimization tools. One of the oldest filters that uses many parameters for analysis.

Reason for applying the filter- incorrect (too frequent use of “keywords” in the text, excessive highlighting of keywords with tags, etc.).

Consequences- the site significantly loses position in the search results.

Exit from under the filter- replace all SEO-optimized text with easy-to-read text (reduce the density of occurrences of keywords and phrases). If it doesn’t help, then on the pages that fall under the filter, leave only one direct occurrence of the key. This is what Florida's algorithm looked like:

Very scary and incomprehensible :) Now this filter as such does not exist, it existed 7 - 5 years ago, after which Google closed it, realizing its inconsistency, because the filter was applied to certain pages, not sites, and therefore optimizers created several versions of the site, one part was re-optimized, and the other is under-optimized in order to fool the algorithm of this filter. Now a more loyal modification of this filter is working, which already evaluates the contents of the page to the highest standards.

Google Supplemental Results or simply Snot

Moving the site from the main section to additional search sections. You can check this way - View the main results for such queries:

site:site/& or site:site/*

then look at the entire output:

The difference between requests 2 and 1 will be equal to the number of pages under the filter.

Output from the filter:

- Removing duplicate pages;

- Uniqueness of each individual page;

- Purchasing on filtered pages of trust links;

- Unique title and meta tags; * - the most important

- Write completely unique content; * - the most important

- Remove broken links.

Shakin, for example, has half of his pages under snot and despite this, there is good traffic from Google.

Google Omitted Results

Moving a site from the main section of the search results to lowered search results. It is adjusted to pages that, in their structure and content, are very similar to pages of other sites or pages of the same site. Can be used simultaneously with the Supplemental Results filter.

Signs- the site is not in the main search results, but it is found after clicking on the “repeat search, including omitted results” link. This miracle looks like this:

Output from the filter:

- Make promoted pages unique (content, meta tags);

- Remove all duplicates.

Google Duplicate Content

Google filters for link manipulation

Google Bombing

Filter for identical link anchors. If many sites link to your site and the link anchors are the same everywhere, then the site falls under the filter and the backing begins to transfer much less weight.

Signs- subsidence of positions or the site getting stuck in the same positions for a long time, there is no result from increasing links to your site.

Exit and protection methods:

- add new, diluted words to the anchor list.

- Increase the number of natural links (here, click, etc.)

Google -30

Penalized for using cloaking, redirects and other black hat optimization methods. Signs: the site dropped by about 30 positions.

Exit and protection methods:

- Try not to use black promotion methods;

- If you have already fallen under the filter, then most likely you will be banned further.

ReciprocalLinksFilter and LinkFarming Filter

Reducing filters for artificial growth. They are imposed for participation in link exchange networks, the presence of link scrapers and the purchase of links on the site. At the same time, the site experiences a decrease in positions or a ban.

Exit and protection methods:

- work carefully with buying, selling and placing links;

- eliminate the reasons for the filter.

Co-Citation Filter

Filter for backing from sites linking to “bad” sites. In general, if a site links to you and at the same time it links to some GS (prohibited topics, etc.), then this is bad.

Exit and protection methods:- try to get links only from good and thematic sites.

To Many Links at Once Filter

Filter for rapid growth of backlinks. It is necessary to observe naturalness in everything and the set of link mass should also be natural, especially for a young site.

PS: I will be very grateful for your retweet.

UP:01/08/2017

In recent years, Google's main filters have become Panda and . The first pessimizes sites for using low-quality content, and the second, accordingly, for promotion using SEO links.