VOLOGDIN E.I.

DYNAMIC RANGE

DIGITAL AUDIO PATH

Lecture notes

Saint Petersburg

Dynamic range of sounds and music .............................................................. ......................... | ||

Dynamic range of phonograms ............................................................... ................................. | ||

Dynamic range of the digital audio path............................................................... ............ | ||

Expanding the dynamic range using Dithering technology ..... | ||

Dynamic Range Expansion with Noise Shaping Technology |

||

....................................................................................................................................................... | ||

Bibliography................................................ ................................................. ............. | ||

1. Dynamic range of sounds and music

A person hears sound in an extremely wide range of sound pressures. This range extends from the absolute hearing threshold to a pain threshold of 140 dB SPL relative to the zero level, which is taken as a pressure of 0.00002 Pa (Fig. 1.). The risk zone in this figure indicates the area of sound pressures, which, when

Absolute threshold of hearing

Frequency of tonal sounds, kHz

Rice. 1. Areas of hearing hearing

prolonged exposure can lead to complete hearing loss. The pain threshold for tonal sounds depends on the frequency; for sounds with an arbitrary spectrum, the pressure level of 120 dB SPL is taken as the pain threshold. The graph of the absolute hearing threshold is quite accurately described by the empirical equality

In silence, the sensitivity of a person's hearing increases, and in an atmosphere of loud sounds it decreases, hearing adapts to the surrounding sound environment, therefore hearing dynamic range not so big - about 70..80 dB. It is limited from above by a pressure of 100 dB SPL, and from below by noise with a level of -30 ... 35 dB SPL. This dynamic range can be shifted up and down by up to 20 dB. For comfortable perception of music, it is recommended that the sound pressure does not exceed 104 dB SPL at home and 112 dB SPL in specially equipped rooms.

Music dynamic range is defined as the ratio in decibels of the loudest sound (fortissimo) and the quietest sound (pianissimo). Dynamic range of symphonic music is 65 ... 75 dB, and at rock music concerts it increases

up to 105 dB, while the peaks of sound pressure can reach 122 ... 130 dB SPL. |

|||||||||||||||||||||

The dynamic range of vocal performers does not exceed 35 ... 45 dB (Table 1). | |||||||||||||||||||||

The dynamic range of music depends significantly on the choice of maximum |

|||||||||||||||||||||

sound pressure P max , since it is limited from below by an absolute threshold |

|||||||||||||||||||||

audibility. This dependence is most pronounced at the edges of the audio range. |

|||||||||||||||||||||

On fig. 2 | examples of changing the dynamic range of tonal sounds are given. IN |

||||||||||||||||||||

120dB pain threshold | |||||||||||||||||||||

Pmax | 80dB | ||||||||||||||||||||

DR 40 dB | 50dB | ||||||||||||||||||||

80dB | |||||||||||||||||||||

50dB | |||||||||||||||||||||

Absolute Threshold | |||||||||||||||||||||

audibility | |||||||||||||||||||||

Frequency of tonal sounds, kHz | |||||||||||||||||||||

Rice. 2. Music dynamic range and hearing thresholds | |||||||||||||||||||||

depending on the choice of P max | and tonal frequencies | dynamic | range | ||||||||||||||||||

80 dB reduced at the edges | sound range up to 40 | That's why |

|||||||||||||||||||

it is customary to measure the dynamic range of sounds at a frequency of 1 kHz, where he can |

|||||||||||||||||||||

reach 117 dB. | |||||||||||||||||||||

room masks the sound and thereby reduces its dynamic range |

|||||||||||||||||||||

music from below. Fig.3. shown as when the sound pressure is reduced from 120 to 80 dB |

|||||||||||||||||||||

SPL dynamic range of music due to room noise is reduced from 90 to 50 dB. |

|||||||||||||||||||||

120dB SPL | DR 90 dB | Influence | fully |

||||||||||||||||||

neglect | only when | ||||||||||||||||||||

90dB | |||||||||||||||||||||

90dB | minimum level of musical sounds. |

||||||||||||||||||||

70dB | Noise levels in recording studios |

||||||||||||||||||||

90dB | exceeds | ||||||||||||||||||||

apartments | |||||||||||||||||||||

50dB | |||||||||||||||||||||

talk |

|||||||||||||||||||||

increases the level | noise up to 60dB SPL. |

||||||||||||||||||||

That's why quiet music often drowns |

|||||||||||||||||||||

Noise in the apartment | premises | auditions | |||||||||||||||||||

involuntarily | arises | increase |

|||||||||||||||||||

volume. | |||||||||||||||||||||

Quantization noise being white |

|||||||||||||||||||||

noise, noticeable by ear when it |

|||||||||||||||||||||

Noise in the studio | intensity as low as 4 dB SPL, even when |

||||||||||||||||||||

general noise of audio equipment in the room |

|||||||||||||||||||||

reaches | |||||||||||||||||||||

Rice. 3. Music's dynamic range | |||||||||||||||||||||

needs to be weighed against the fact that |

|||||||||||||||||||||

FS scale of a digital level meter |

|||||||||||||||||||||

correspond to a level between 105 and 112 dB SPL. Therefore, for | household premises |

||||||||||||||||||||

the dynamic range of music should not exceed 101 - 108 dB. | |||||||||||||||||||||

Microphone dynamic range is defined in the same way as it is usually done in electrical paths. The upper limit is limited by the permissible value of non-linear distortions, and the lower limit - by the level of intrinsic noise. Modern studio microphones allow a maximum sound pressure of 125 ... 145 dB SPL, while non-linear distortion does not exceed 0.5% ... 3%. The noise level of the microphones is 15 ... 20 dBA, the dynamic range is from 90 to 112 dBA, and the signal-to-noise ratio is 70 to 80 dBA. These microphones cover the entire human hearing range from 120dB SPL to 20dB SPL studio noise levels. In modern studios, recording is done using 22 or 24 bit ADCs, sometimes floating point quantization is used, so there are no problems with dynamic range. Such equipment is extremely expensive.

2. Dynamic range of phonograms

Musical and speech signals are a sequence of rapidly increasing and more slowly decaying sound pulses (Fig. 4.). This signal is characterized rms and peak levels, the difference between these levels is called the crest factor. A square wave (square wave) has a unit crest factor of 0 dB, a sinusoid crest factor of 3 dB. Phonograms of musical and speech signals have a crest factor of up to 20 dB or more. Determination time The crest factor is related to the integration time when calculating the RMS value of the signal, and is typically 50 ms.

The dynamic range and crest factor of a musical phonogram are determined by statistical processing of the instantaneous values of the signals. The most detailed statistical characteristics are calculated in the Audition 3 audio editor (Fig. 4).

Fig.4. Fragments of phonograms of musical fragments of various durations

Of these, the main ones are the following: Peak Amplitude (Lpic), Maximum RMS Power (L max), Minimum RMS Power (L min) and Average RMS Power (L avr) (levels of maximum,

minimum and mean RMS (effective) signal strength).

The dynamic range of a phonogram according to this table is defined as

DR mL pic L min ,

crest factor is calculated by the formula

PF mL picL avr

The dynamic range can also be determined by the histogram of the distribution of levels of the phonogram, shown in Fig.5. It is convenient to perform such operations quickly before and after dynamic processing of a phonogram.

Fig.4. Statistical characteristics of the phonogram of Beethoven's music "Elise"

Fig.5. Distribution histogram of Beethoven's music "Elise"

depending on the task of the study. If, for example, the dynamic range of instantaneous values of the phonogram levels is important, then the integration time should be 1-5 ms. If the dynamic range of music is being measured taking into account auditory perception, then the integration time is chosen to be 60 ms, this is the time constant of hearing.

allows you to determine the dynamic range and crest factor with a given probability at a selected integration time. The sound editor Adobe Audition 3 uses histogram normalization, in which the maximum probability of events always corresponds to the value 100. Such a histogram describes the probability distribution of phonogram signal levels relative to the maximum value. When it is built, the scale along the X axis is automatically selected, so it is difficult to compare the histograms of different phonograms.

Practical use. Who needs statistical information and a histogram of a soundtrack and why. First of all, these data provide invaluable assistance in the dynamic processing of a phonogram, since they allow you to reasonably select the characteristics of the compressor and expander. Statistical results of processing phonograms with music of various genres make it possible to determine the required dynamic range of the electro-acoustic path, to form requirements for the peak and average power of the acoustic system heads. They play an essential role in the development of audio signal compression algorithms.

Emotional music with a wide dynamic range and high peak |

||||||||

factor can only be listened to on high-quality expensive equipment with good |

||||||||

acoustic | aggregates. | |||||||

headphones and in cars due to dynamic noise |

||||||||

15 the range is shrinking and she | just disgusting. |

|||||||

Therefore, such records are not in wide demand and, |

||||||||

inevitably, every year the dynamic range and peak- |

||||||||

Fig.7. Soundtrack of the song “I`ll Be There For You” | ||||||||

Rice. 6. Crest factor for CDs | ||||||||

phonograms are deliberately reduced by manufacturers (Fig. 6). On modern CDs |

|||||||||||||

disks in most cases, the dynamic range does not exceed 20 dB, and the crest factor is |

|||||||||||||

a little over 3 dB, which is quite enough for dance music. In Fig.7. given |

|||||||||||||

picture of a modern soundtrack from a CD. | |||||||||||||

3. Dynamic range of the digital audio path | |||||||||||||

Conventional digital path | includes ADC and DAC. | The first carries out |

|||||||||||

quantization of analog signals, and their conversion into a digital stream. Second |

|||||||||||||

performs the inverse conversion of a digital stream into an analog signal. | |||||||||||||

Quantization | rounding | ||||||||||||

sample sequences | to integer binary |

||||||||||||

values. With pulse code modulation (PCM), this |

|||||||||||||

operation | carried out | linear |

|||||||||||

quantizer, called in the technical literature Mid- |

|||||||||||||

thread. At | him gear | has the form |

|||||||||||

"stairs" with the same steps | necessarily, |

||||||||||||

an odd number of quantization levels. rounding |

|||||||||||||

digital data in this quantizer produced | |||||||||||||

nearest | binary value ( fig. 8). | This algorithm |

|||||||||||

called rounding. | |||||||||||||

algorithm | day off | quantizer |

|||||||||||

is symmetrical about the time axis, and quantization |

|||||||||||||

Rice. 8. Gear | carried out with a threshold equal to | 0.5 quantization steps |

|||||||||||

quantizer functions | As long as the input signal is less than this threshold, the output |

||||||||||||

Mid-Tread and Mid-Riser |

|||||||||||||

the quantizer signal is zero, which means that |

|||||||||||||

quantization is carried out with a central cutoff. | |||||||||||||

input signal slightly above the quantization threshold, the output signal has the form |

|||||||||||||

a sequence of pulses with a duty cycle depending | from the level | ||||||||||||

a further increase in the level of the SL forms an output signal of a stepped shape. |

|||||||||||||

Rounding of digital data in the Mid-Riser quantizer is performed to the nearest smaller value (Fig. 8), therefore this algorithm is commonly called truncating. The Mid-Riser quantizer differs in that it does not have a quantization threshold, so it transmits audio signals at very low levels, even below the level

noise. However, in the absence of ZS, any insignificant noise generates at the output a sequence of random pulses with an amplitude of 1 quantum, which means that such a quantizer amplifies the noise.

ADC Dynamic Range with Mid-Tread Quantizer is determined through the logarithm of the ratio of the maximum and minimum values of the sinusoidal signal at the input of the quantizer

DR A 20 logA max ,

Amin

Q 2 (q 1), A | Q is the quantization step, q is the number of digits. That's why |

||||||

DRA | Q 2 (q 1) | ) 6.02q (1) |

|||||

Q/2 |

|||||||

At q = 8 this dynamic range is 48 dB, and at q = 16 it increases to 96 dB. The value of DR A defines the lower limit of the dynamic range for the level of input signals of the Mid-Tread type quantizer.

DAC dynamic range measured in accordance with EIAJ recommendations in terms of the ratio of the maximum RMS value of the signal

sinusoidal form A max at its output to the rms value of the quantization noise, measured in the band from 0 to the Nyquist frequency F N

Amax | |||||||||||||||||||

Q 2 (q 1) | |||||||||||||||||||

Amax | |||||||||||||||||||

q 1.76;q | |||||||||||||||||||

For q = 16 | DR R = 98 dB, which | decibel | dynamic range |

||||||||||||||||

quantizer defined by formula (1). The dynamic range of the DAC measured in this way is identified with its SNR value.

If the upper frequency range is limited by the value F max F N , then the calculation formula for SNR and DR R becomes

SNR R DR R 6.02q 1.76 10log | |||

2Fmax |

|||

where f s - sampling frequency, F max - the maximum frequency of the audio range. At

f s = 44.1 kHz and F max = 20 kHz and SNR R =DR R = 98.5 dB. As you can see, the signal-to-noise ratio is only 2 dB more than the dynamic range. It should be noted that the value of SNR depends on the frequencies f s and F max , while DR does not depend on these parameters.

However, most technical publications equate dynamic range with signal-to-noise ratio. This is confirmed by both AES 17 and

IEC 61606.

The IEC 61606 standard recommends measuring SNR and DR when a sinusoidal signal with a frequency of 997 Hz and a level of minus 60 dB FS is applied to the input of the ADC with the obligatory use of TPDF Dithering technology. In this case, the calculated ratio for SNR due to the introduced additional noise is proposed in the form

SNR T DR T 6.02q 3.01 10log | |||

2Fmax |

|||

Under previous conditions, DR = SNR = 93.7 dB, not 96 dB, as is often found in the technical literature. Consequently, the calculated dynamic range also decreases. Instead of SNR, its reciprocal is often used, which determines the integral level of quantization noise

L nTSNR T.

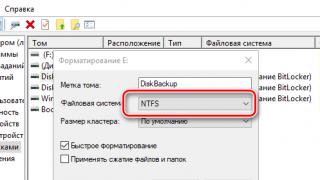

In accordance with the IEC 61606 standard, the measurement of the dynamic range DR R is made in accordance with the scheme shown in Fig. 9. In this scheme, the test

Fig..9. DAC dynamic range measurement circuit

a digital signal with a frequency of 1 kHz and a level of minus 60 dB, formed using TPDF Dithering technology, is fed to the DAC input. The analog signal from the DAC is input to a low-pass filter with a cutoff frequency of 20 kHz, which limits the quantization noise spectrum. Next, filtering is performed using a type A weighting filter, which takes into account the peculiarities of the auditory perception of quantization noise, which increases the dynamic range by 2-3 dB. The test signal and noise are amplified by 60 dB and fed into a THD+N level meter. In this meter, the tone signal is suppressed by a notch filter and the noise level is measured in decibels with an RMS voltmeter. This measured noise level is identified, with the opposite sign, with the dynamic range of the DAC.

When quantizing signals of the minimum level, huge distortions occur, reaching 100% (Fig. 10). In this regard, in practice, one has to be guided by real dynamic range ADC. When determining this range, it is necessary to take into account: the crest factor of musical signals, reaching 12 ... prevent accidental overload.

As a result, the real dynamic range | recording 16-bit ADC PCM |

|||||

does not exceed 48…54 dB. This |

||||||

not even close enough for good |

||||||

studio | sound recordings. | |||||

automatic level control |

||||||

takes place when burning CDs, |

||||||

range can be extended up to 74 |

||||||

16 bit, 1000 Hz, 93 dB | conspicuous | worsening |

||||

sound quality of low level signals. |

||||||

Fig.10. Sequence of samples distorted | Headroom on top |

|||||

sinusoidal waveforms | protects against the possibility of overload, |

|||||

exceed |

||||||

expected value. When recording dance music, a headroom of 6 dB is sufficient.

When recording symphonic music, sometimes you have to have a margin of up to 20 ... 30 dB. The dynamic range margin at the bottom prevents the possibility of quiet passages being below the noise level and, moreover, below the threshold of audibility.

In digital paths upper limit of dynamic range limited to 0 dB signal level FS . Without the use of technology Dithering the lower limit of the dynamic range limited by the level

LA1/DRA.

With q = 8 bits, it is equal to minus 48 dB, and with q = 16 bits, it is minus 96 dB. The inevitable path noise increases this level.

The integrated noise level minus 93.7 dB is a lot or a little. It is important how much this level exceeds the threshold of audibility. Noise Dithering

Fig.11. Audibility thresholds for quantization noise depending on the number of bits

quantization becomes white noise, the threshold of hearing is 4 dB SPL. This means that near 3 kHz, the quantization noise at q = 16 bits will exceed the hearing threshold by 22.3 dB (Fig. 11). As you can see from this figure, 20-bit quantization is required in order for the quantization noise to be inaudible.

4. Expanding the dynamic range using Dithering technology

To expand the dynamic range PCM path with quantizer type MeadTread without increasing the number of digits and the sampling frequency, many

analog signals, a small amount of analog noise is added to the ES. More often this technology is used for requantization of digital APs when produced

done with 24 bits, and then requantized, usually to 16 bits, as is customary in the CD standard. At the same time, the quality of such a CD in terms of noise corresponds to a 20-bit recording.

In the process of requantization, the truncating operation is more often used, in which the least significant bits of code words are simply discarded. In this case, the output signal

Dynamic Audio Processing on PC

(c) Yuri Petelin

http://www.petelin.ru/

In a previous article, I talked about software tools for removing noise and sound distortion, including listing those “sound cleaning” operations that need to be done with recording a song, starting with fixing mistakes in microphone installation and ending with mastering, done so that a group of songs, recorded on a disc, from an aesthetic point of view, was a single whole. This topic is so serious that it is worth devoting the next few articles to it.

I'll start, like last time, with the main thesis: the sound recorded by an amateur in a home computer studio, although, of course, cannot be compared in quality with the results of professional studios, but can be close to them.

I write, and out of the corner of my ear I listen to what the TV is mumbling there. Here is a film recommended in the announcement as a "super project". Tsar Peter is dying, fighting for the throne. Passions are raging... Through other channels, investigator Turetsky is looking for stolen rare tomes, connoisseurs have shaken the old days and are again conducting their investigation, because, it turns out, "someone here and there sometimes does not want to live honestly" ... Such different stories but they have something in common. This is common - sound. Bad sound. Terrible sound recorded by professionals in professional studios. Especially in the "superproject": when the groans of the dying tsar and the cries of those close to him subside for a moment, the background sounds come through clearly, you can even hear how the tape drive mechanisms of the cameras work.

The following conclusions arise:

1. It is clear that in our country films have not been dubbed in a sound studio for a long time. Probably there is no money for it. The way the sound is recorded on the set is the way it goes into the edited tape.

2. Some professionals do not use computer noise reduction. It's not very clear why. Don't know about them? No time to read special literature? But even the elementary information that is contained on the five pages of my previous article would be enough for a start.

3. Some of the people who record sound for TV movies don't know how to use dynamics.

We'll talk about dynamic processing now. This topic is complex, but if you focus, you will definitely understand everything, and the sound in your projects will become professional. Well, not professional, but amateur, but such that everyone will listen to them. For doubters, I propose to evaluate the work of readers recorded on the disk that accompanies the new book "Sonar. Secrets of Mastery". By the way, nothing prevents you from trying your hand. Your composition may well be in the music collection on the next such disc.

So, dynamic processing. Formally, it consists in changing the dynamic range of audio signals. But to use it for the benefit of the sound quality of this phrase is clearly not enough. Therefore, let's start from the beginning.

Audio level and dynamic range

The source of sound vibrations radiates energy into the surrounding space. The amount of sound energy passing per second through an area of 1 m2, located perpendicular to the direction of propagation of sound vibrations, is called the intensity (strength) of sound.

When we have a normal conversation, the power flow of energy is approximately 10 microwatts. The power of the loudest violin sounds can be 60 microwatts, and the power of organ sounds is from 140 to 3200 microwatts.

A person hears sound in an extremely wide range of sound pressures (intensities). One of the reference values of this range is the standard threshold of hearing - the effective value of the sound pressure created by a harmonic sound vibration of a frequency of 1000 Hz, barely audible to a person with average hearing sensitivity.

The threshold of hearing corresponds to the sound intensity Iv0 = 10-12 W/m2 or sound pressure psv0 = 2×10-5 Pa.

The upper limit is determined by the values of Iv. Max. = 1 W/m2 or psv. Max. = 20 Pa. When a sound of such intensity is perceived, a person experiences pain.

In the area of sound pressures significantly exceeding the standard hearing threshold, the magnitude of the sensation is proportional not to the amplitude of the sound pressure psv, but to the logarithm of the ratio psv / psv0. Therefore, sound pressure and sound intensity are often measured in logarithmic units of decibels (dB) relative to the standard hearing threshold.

The range of change in sound pressure from the absolute threshold of hearing to the pain threshold is for different frequencies from 90 dB to 130 dB.

If the human ear simultaneously perceives two or more sounds of different loudness, then a louder sound drowns out (absorbs) weak sounds. There is a so-called masking of sounds, and the ear perceives only one, louder, sound. Immediately after exposure to a loud sound, hearing sensitivity to weak sounds is reduced. This ability is called hearing adaptation.

Thus, the threshold of audibility largely depends on the listening conditions: in silence or against the background of noise (or other disturbing sound). In the latter case, the hearing threshold is increased. This indicates that the interference masks the useful signal.

The human hearing aid has a certain inertia: the sensation of the appearance of a sound, as well as its termination, does not appear immediately.

The audio signal is a random process. Its acoustic or electrical characteristics change continuously over time. Trying to keep track of random changes in the implementations of this chaos is an exercise that does not make much sense. It is possible to curb his majesty the case, to give it the features of determinism, using averaged parameters, such as the level of the audio signal.

The level of the audio signal characterizes the signal at a certain moment and is expressed in decibels, rectified and averaged over a certain previous period of time, the voltage of the audio signal.

The dynamic range of an audio signal is understood as the ratio of the maximum sound pressure to the minimum or the ratio of the corresponding voltages. In this definition, there is no information about what pressure and stress are considered maximum and minimum. This is probably why the dynamic range of the signal determined in this way is called theoretical. Along with this, the dynamic range of an audio signal can also be determined experimentally as the difference between the maximum and minimum levels for a sufficiently long period. This value greatly depends on the selected measurement time and the type of level meter.

The dynamic ranges of musical and speech acoustic signals of various types, measured using instruments, average:

80 dB for symphony orchestra

45 dB for choir

35 dB for pop music and vocal soloists

25 dB for speaker speech

When recording, the levels need to be adjusted. This is explained by the fact that the original (unprocessed) signals often have a large dynamic range (for example, up to 80 dB for symphonic music), while at home audio programs are heard in the range of about 40 dB.

There is a disadvantage to manually adjusting levels. The reaction time of the sound engineer is about 2 s, even if the score of the composition is known to him in advance. This leads to an error in maintaining the maximum levels of music programs up to 4 dB in both directions.

Amplifiers, acoustic systems and even human ears need to be protected from overloads caused by sudden jumps in the amplitude of the audio signal - to limit the signal in amplitude.

The dynamic range of the signal must be coordinated with the dynamic ranges of recording, amplifying, and transmitting devices.

To increase the range of FM radio stations, the dynamic range of the audio signal must be compressed. To reduce the noise level in pauses, it is desirable to increase the dynamic range.

In the end, fashion, which dictates its conditions in all spheres of human activity, including sound recording, requires a rich, dense sound of modern music, which is achieved by a sharp narrowing of its dynamic range.

Sound wave (loudness envelope) of a fragment of S. Rachmaninov's opera "Aleko",

and contemporary dance music.

In classical music, nuances are important, dance music should be "powerful".

This dictates the need to use devices for automatic processing of signal levels.

Dynamics devices

Devices for automatic processing of signal levels can be classified according to a number of criteria, the most important of which are response inertia and the function performed.

According to the response inertia criterion, there are non-inertial (instantaneous action) and inertial (with a variable transmission coefficient) automatic level controllers:

When the signal level exceeds the nominal value at the input of the non-inertia autoregulator, the output is trapezoidal instead of a sinusoidal signal. Although free-running auto-regulators are simple, their use leads to strong distortions.

Inertial is such an automatic level control, in which the transmission coefficient automatically changes depending on the signal level at the input. These autolevellers distort the waveform only for a small amount of time. By selecting the optimal response time, such distortions can be made hardly perceptible by ear.

Depending on the functions performed, inertial automatic level controllers are divided into:

Quasi Peak Limiters

Level stabilizers

Dynamic Range Compressors

Dynamic range expanders

Compander squelch

Noise gates (gates)

Devices with complex dynamic range conversion

The main characteristic of the dynamics processing device is the amplitude characteristic - the dependence of the output signal level on the signal level at the input.

The level limiter (limiter) is an autoregulator, in which the transmission coefficient changes so that when the input signal exceeds the nominal level, the signal levels at its output remain practically constant, close to the nominal value. With input signals that do not exceed the nominal value, the level limiter works like a normal linear amplifier. The limiter should respond to level changes instantly.

Limiter amplitude response

The auto level stabilizer is designed to stabilize signal levels. This may be necessary to equalize the volume of the sound of individual fragments of the phonogram. The principle of operation of the autostabilizer is similar to the principle of operation of the limiter. The difference is that the rated output voltage of the auto stabilizer is approximately 5 dB less than the rated output level of the limiter.

A compressor is a device whose gain increases as the input signal level decreases. The action of the compressor leads to an increase in the average power and, consequently, the volume of the sound of the processed signal, as well as to compress its dynamic range.

Compressor amplitude characteristic

The expander has an amplitude characteristic inverse to the compressor. It is used when it is necessary to restore the dynamic range converted by the compressor.

Amplitude characteristic of the expander

A compander is a system consisting of a compressor and an expander connected in series. It is used to reduce the noise level in the recording or transmission paths of audio signals.

Threshold squelch (gate) is an auto-regulator, in which the gain is changed so that when the input signal levels are less than the threshold, the output signal amplitude is close to zero. For input signals above the threshold, the squelch works like a conventional linear amplifier.

Auto-regulators for complex dynamic range conversion, have multiple control channels. For example, a combination of a limiter, an auto-stabilizer, an expander, and a threshold noise suppressor allows you to stabilize the sound volume of various fragments of a composition, maintain maximum signal levels, and suppress noise in pauses.

Structure of dynamics processing devices

The inertial level controller has a main channel and a control channel. If the signal is fed into the control channel from the input of the main channel, we are dealing with direct adjustment, and if from the output - with the reverse.

The main channel in a direct control circuit includes audio amplifiers, a delay line, and an adjustable element. The latter, under the influence of a control voltage, is able to change its transmission coefficient. The main channel in the circuit with reverse regulation contains all of the listed elements with the exception of the delay line.

The fundamentally important elements of the control channel are the detector and the integrating (smoothing) circuit. As long as the voltage at the input of the circuit does not exceed the threshold (reference), the control channel does not generate a control signal, and the transmission coefficient of the regulated element does not change. When the threshold is exceeded, the detector generates a pulsed voltage proportional to the difference between the current signal value and the reference voltage. The integrating circuit averages the difference voltage and generates a control voltage proportional to the signal level at the input of the control channel.

The delay line present in the main channel of the direct control circuit allows the control channel to work with some lead. A surge in signal level will be detected by it before the signal reaches the adjustable element. Therefore, there is a fundamental possibility of eliminating unwanted transients. Level differences can be handled almost perfectly. However, the phase response of an analog delay line is not linear. The difference in phase shifts for different spectral components of the signal leads to distortion of the broadband signal shape when passing through the delay line. Digital delay lines do not have this drawback, but in order to use them, the signal must first be digitized. In virtual processing devices, the signal is processed digitally, and there are no problems with the algorithmic implementation of functional elements.

All rights in this document belong to the author. Reproduction of this text or part of it is allowed only with the written permission of the author.

Bits, hertz... What is hidden behind these concepts? When developing the audio compact disc standard, values were taken 44 kHz, 16 bit. Why exactly so many? What is the reason for the choice, and also why are attempts being made to increase these values to, say, 96 kHz and 24 or even 32 bits...

Let's deal first with the resolution of sampling - that is, with the bit depth. It just so happens that you have to choose between the numbers 16, 24 and 32. Intermediate values \u200b\u200bwould, of course, be more convenient in terms of sound, but too much unpleasant for use in digital technology.

What is this parameter responsible for? In a nutshell - for the dynamic range. The range of simultaneously reproduced volumes is from the maximum amplitude (0 dB) to the smallest amplitude that the resolution allows, for example, approximately -93 dB for 16-bit audio. Oddly enough, this is strongly related to the noise level of the phonogram. In principle, for, for example, 16-bit audio, it is quite possible to transmit signals with a power of -120 dB, however, these signals will be difficult to apply in practice due to such a fundamental concept as sampling noise. The fact is that when taking digital values, we make mistakes all the time, rounding the real analog value to the nearest possible digital value. The smallest possible error is zero, but the maximum error is half the last digit (bit, hereinafter the term LSB will be abbreviated to MB). This error gives us the so-called sampling noise - a random discrepancy between the digitized signal and the original. This noise is permanent and has a maximum amplitude of 0.5MB. This can be thought of as random values mixed into a digital signal. This is sometimes referred to as rounding or quantizing noise.

Let us dwell in more detail on what is meant by signal power, measured in bits. The strongest signal in digital sound processing is usually taken as 0 dB, which corresponds to all bits set to 1. If the most significant bit (hereinafter referred to as SB) is set to zero, the resulting digital value will be half as much, which corresponds to a level loss of 6 dB. No bits other than SB can achieve a level higher than -6 dB. Accordingly, the most significant bit is, as it were, responsible for the presence of a signal level from -6 to 0 dB, so SB is a 0 dB bit. The previous bit is responsible for the level of -6 dB, and the lowest bit, thus, for the level of (number_bit-1) * 6 dB. In the case of 16 bit audio, MB corresponds to a level of -90 dB. When we say 0.5MB, we don't mean -90/2, but half a step to the next bit - that is, another 3 dB lower, -93 dB.

We return to the choice of digitization resolution. As already mentioned, digitization introduces noise at the level of 0.5MB, which means that a record digitized in 16 bits constantly making noise at -93 dB. It can transmit signals even quieter, but the noise still remains at -93 dB. On this basis, the dynamic range of digital sound is determined - where the signal-to-noise ratio turns into noise / signal (there is more noise than the useful signal), the bottom limit of this range is located. In this way, main digitization criterion - how much noise can we afford in a restored signal? The answer to this question depends in part on how much noise was in the original recording. An important takeaway is that if we are digitizing something with -80 dB noise - there is absolutely no reason to digitize it at more than 16 bits, since on the one hand, -93 dB noise adds very little to the already huge (comparatively) -80 noise. dB, and on the other hand - quieter than -80 dB in the phonogram itself, noise / signal already begins, and it is simply not necessary to digitize and transmit such a signal.

Theoretically, this is the only criterion for choosing a digitization resolution. More we do not contribute absolutely no distortions or inaccuracies. Practice, oddly enough, almost completely repeats the theory. This is what guided those people who chose 16-bit resolution for audio CDs. Noise -93 dB is a fairly good condition, which almost exactly corresponds to the conditions of our perception: the difference between the pain threshold (140 dB) and the usual background noise in the city (30-50 dB) is just about a hundred dB, and given that on at a volume level that brings pain, they don’t listen to music - which narrows the range even more - it turns out that the real noise of the room or even the equipment is much stronger than the sampling noise. If we can hear a level under -90 dB in a digital recording, we will hear and perceive sampling noise, otherwise we will simply never determine whether this audio is digitized or live. There is simply no other difference in terms of dynamic range. But in principle, a person can meaningfully hear in the 120 dB range, and it would be nice to keep the entire range, which 16 bits seem to be unable to cope with.

But this is only at first glance: with the help of a special technique called shaped dithering, you can change the frequency spectrum of sampling noise, almost completely move them to the region of more than 7-15 kHz. We seem to be changing the frequency resolution (refusing to reproduce quiet high frequencies) for an additional dynamic range in the remaining frequency range. In combination with the peculiarities of our hearing - our sensitivity to the kicked-out high-frequency region is tens of dB lower than in the main region (2-4 kHz) - this makes it possible to transmit relatively noiseless useful signals an additional 10-20 dB quieter than -93 dB - thus, the dynamic range of 16-bit audio for a human is about 110 dB. And in general - at the same time, a person simply cannot hear sounds 110 dB quieter than the loud sound just heard. The ear, like the eye, adjusts to the loudness of the surrounding reality, so the simultaneous range of our hearing is relatively small - about 80 dB. Let's talk about dithring in more detail after discussing frequency aspects.

For CDs, the sample rate is 44100 Hz. There is an opinion that this means that all frequencies up to 22.05 kHz are reproduced, but this is not entirely true. We can only say unequivocally that there are no frequencies above 22.05 kHz in the digitized signal. The real picture of the reproduction of digitized sound always depends on specific technique and is always not as perfect as we would like, and as consistent with theory. It all depends on the specific DAC.

Let's figure out first what we would like to receive. A middle-aged person (rather young) can feel sounds from 10 Hz to 20 kHz, it is meaningful to hear - from 30 Hz to 16 kHz. Sounds above and below are perceived, but do not constitute an acoustic sensation. Sounds above 16 kHz are felt as an annoying unpleasant factor - pressure on the head, pain, especially loud sounds bring such sharp discomfort that you want to leave the room. Unpleasant sensations are so strong that the action of security devices is based on this - a few minutes of a very loud high-frequency sound will drive anyone crazy, and it becomes absolutely impossible to steal anything in such an environment. Sounds below 30 - 40 Hz with sufficient amplitude are perceived as vibration coming from objects (speakers). Rather, it would even be said so - just a vibration. A person acoustically almost does not determine the spatial position of such low sounds, therefore other sense organs are already in use - tactile, we feel such sounds with our body.

To transmit sound as it is, it would be nice to keep the entire perceived range from 10

Hz to 20

kHz. In theory, there are absolutely no problems with low frequencies in digital recording. In practice, all DACs using delta technology have a potential source of problems. There are now 99% of such devices, so the problem is one way or another, although there are almost no frankly bad devices (only the cheapest circuits). We can assume that everything is fine with low frequencies - after all, this is only a completely solvable playback problem that well-designed DACs costing more than $1 successfully cope with.

With high frequencies, everything is a little worse, at least for sure more difficult. Almost the whole essence of the improvements and complications of DACs and ADCs is aimed precisely at a more reliable transmission of high frequencies. By "high" we mean frequencies comparable to the sampling frequency - that is, in the case of 44.1 kHz, this is 7-10 kHz and higher. Explanatory drawing:

The figure shows a frequency of 14 kHz, digitized with a sampling rate of 44.1 kHz. The dots indicate the moments of taking the signal amplitude. It can be seen that there are about three points for one period of the sinusoid, and in order to restore the original frequency in the form of a sinusoid, one must show some imagination. The sine wave itself was drawn by the CoolEdit program, and it showed imagination - it restored the data. A similar process occurs in the DAC, this is done by the recovery filter. And if relatively low frequencies are almost ready-made sinusoids, then the form and, accordingly, the quality of high-frequency restoration lies entirely on the conscience of the DAC restoration system. CoolEdit has a very good recovery filter, but it also fails in an extreme case - for example, a frequency of 21 kHz:

It can be seen that the form of vibrations (blue lines) is far from correct, and properties have appeared that were not there before. This is the main problem when reproducing high frequencies. The problem, however, is not as bad as it might seem. All modern DACs use multirate technology, which consists in digitally restoring to a several times higher sampling rate, and then converting it to an analog signal at an increased frequency. Thus, the problem of restoring high frequencies is shifted to the shoulders of digital filters, which can be of very high quality. So high quality that in the case of expensive devices, the problem fully removed - provides undistorted reproduction of frequencies up to 19-20 kHz. Resampling is also used in not very expensive devices, so in principle this problem can also be considered solved. Devices in the region of $30 - $60 (sound cards) or music centers up to $600, usually similar in DAC to these sound cards, perfectly reproduce frequencies up to 10 kHz, tolerably up to 14 - 15, and somehow the rest. This quite enough for most real musical applications, and if someone needs more quality - he will find it in professional-grade devices that are not that much more expensive - they are just smartly made.

Back to dithering, let's see how we can usefully increase the dynamic range beyond 16 bits.

The idea of dithering is to mix into the signal noise. Strange as it may sound, in order to reduce noise and unpleasant quantization effects, we add your noise. Let's consider an example - let's use CoolEdit's ability to work in 32 bits. 32 bits is 65 thousand times more accurate than 16 bits, so in our case, 32 bits can be considered an analog original, and converting it to 16 bits is digitization. The image shows 32-bit audio - music recorded at such a quiet level that the loudest moments reach only -110 dB:

This is far quieter than the dynamic range of 16-bit audio (1MB of 16-bit representation equals one on the scale on the right), so if we simply round the data to 16-bit, we get complete digital silence.

Let's add white noise to the signal with a level of 1 MB - this is -90 dB (approximately corresponding to the level of quantization noise):

Let's convert to 16 bits (only integer values are possible - 0, 1, -1, ...):

(Ignore the blue line, which also takes intermediate values - this is the CoolEdit filter simulating the real amplitude after the restoration filter. The amplitude sampling points are located only at values 0 and 1)

As you can see, some data remains. Where the original signal had a higher level, there are more values of 1, where the lower one is zero. To hear what we got, we amplify the signal by 14 bits (by 78 dB). The result can be downloaded and listened to (dithwht.zip, 183 kb).

We hear this sound with a huge noise of -90 dB (before listening gain), while the useful signal is only -110 dB. We already have -110 dB audio transmission at 16 bits. In principle, this is the standard way to expand the dynamic range, which often turns out almost by itself - there is enough noise everywhere. However, this in itself is rather meaningless - the level of sampling noise remains at the same level, and transmitting a signal weaker than noise is not a very clear task from the point of view of logic ...

More complicated way - shaped dithering. The idea is that since we still don't hear high frequencies in very quiet sounds, then we should direct the main power of the noise to these frequencies, while you can even use a lot of noise - I'll use a 4MB level (that's two bits of noise). Enhanced result after filtering high frequencies (we wouldn't hear them at the normal volume of this sound) - ditshpfl.zip , 1023 kb (unfortunately, the sound is no longer archived). This is already quite good (for an extremely low volume) sound transmission, the noise is approximately equal in power to the sound itself with a level -110 dB! Important note: we raised real sampling noise from 0.5MB (-93dB) to 4MB (-84dB), downgrading audible sampling noise from -93dB to about -110dB. Signal to noise ratio worsened, but the noise went into the high-frequency region and ceased to be audible, which gave significant improvement in real(human-perceptible) signal-to-noise ratio. In practice, this is already the noise level of 20-bit audio sampling. The only condition of this technology is the presence of frequencies for noise. 44.1kHz audio makes it possible to place noise at frequencies of 10-20kHz that are inaudible at quiet volumes. But if you digitize at 96 kHz, the frequency domain for noise (inaudible to humans) will be so large that when using shaped dithering 16 bits really turn into all 24.

[Note: The PC Speaker is a one-bit device, but with a fairly high maximum sampling rate (on/off of that single bit). Using a process similar in essence to dithering, called rather pulse-width modulation, quite high-quality digital sound was played on it - 5-8 bits of low frequency were pulled out of one bit and a high sampling rate, and the inability of the equipment to reproduce such high frequencies, as well as our inability to hear them. A slight high frequency whistle, however - the audible part of this noise - was audible.]

Thus, shaped dithering allows you to significantly reduce the already low sampling noise of 16-bit audio, thus quietly expanding the useful (noiseless) dynamic range by all area of human hearing. Since now shaped dithering is always used when translating from a working format of 32 bits to a final 16 bit for a CD, our 16 bits are completely sufficient for a complete transfer of a sound picture.

The only thing is that this technology works only at the last stage - preparing the material for playback. When processing high-quality sound, simply necessary stay at 32 bits to avoid dithering after each operation, better encoding results back to 16 bits. But if the noise level of the phonogram is more than -60 dB, you can, without the slightest scruples of conscience, carry out all the processing in 16 bits. Intermediate dithering will ensure that there are no rounding distortions, and the noise added by it will hundreds of times weaker than the existing one and therefore completely indifferent.

| Why is it said that 32-bit sound is better than 16-bit? | |

| A1: | They are wrong. |

| A2: | [They mean a little different: when processing or recording sound necessary use higher resolution. They use it always. But in the sound as in the finished product, a resolution of more than 16 bits is not required.] |

| Q: | Does it make sense to increase the sampling rate (eg up to 48 kHz or up to 96)? |

| A1: | Doesn't have. With at least how competent approach in the design of the DAC 44 kHz transmit the whole desired frequency range. |

| A2: | [They mean a little different: it makes sense, but only when processing or recording sound.] |

| Q: | Why is the introduction of high frequencies and bitness still going on? |

| A1: | It is important for progress to move. Where and why - is not so important ... |

| A2: | Many processes in this case are easier. If, for example, the device is going to process the sound, it will be easier for him to do this in 96 kHz / 32 bits. Almost all DSPs use 32 bits for sound processing, and the ability to forget about conversions is an easier development and still a slight increase in quality. And in general - the sound for further processing It has meaning to store in a higher resolution than 16 bits. For hi-end devices that only play sound, this is absolutely indifferent. |

| Q: | Are 32x or 24x or even 18 bit DACs better than 16 bit ones? |

| A: | In general - No. The quality of the conversion does not depend at all on the bit depth. The AC "97 codec (a modern sound card under $50) uses an 18-bit codec, and $500 cards, the sound of which cannot even be compared with this nonsense, use 16-bit. It makes absolutely no difference to playing 16 bit audio.. It's also worth bearing in mind that most DACs typically actually play back fewer bits than they take on. For example, the real noise level of a typical cheap codec is -90 dB, which is 15 bits, and even if it is 24 bits itself - you will not get any return on the "extra" 9 bits - the result of their work, even if it was available, will drown in their own noise. Most cheap devices are just ignore additional bits - they just don't really count in their sound synthesis process, although they go to the digital input of the DAC. |

| Q: | And for the record? |

| A: | For recording, it is better to have an ADC with a larger capacity. Again, more real bit depth. The bit depth of the DAC should correspond to the noise level of the original phonogram, or simply be sufficient to achieve the desired low level. noise. It's also handy to have a little more bit depth to use the higher dynamic range for less precise recording level control. But remember - you must always hit real codec range. In reality, a 32-bit ADC, for example, is almost completely meaningless, since the low ten bits will just make noise continuously - so low noise (under -200 dB) just can not be in an analog music source. |

It is not worth demanding from the sound of increased bit depth or sampling frequency, in comparison with CD, better quality. 16bit/44kHz pushed to the limit with shaped dithering is quite capable fully convey the information we are interested in, if it is not about the sound processing process. Don't waste space on extra data in your finished material, just as don't expect the superior sound quality from DVD-Audio with its 96kHz/24bit. With a competent approach, when creating sound in a standard CD format, we will have a quality that just doesn't need in further improvement, and the responsibility for the correct sound recording of the final data has long been assumed by the developed algorithms and people who know how to use them correctly. In the past few years, you will not find a new disc without shaped dithering and other techniques for pushing sound quality to the limit. Yes, it will be more convenient for lazy or just clumsy people to give ready-made material in 32 bits and 96 kHz, but in theory - is it worth several times more audio data?...

My name is Louis Philippe Dion, I'm the sound designer for Rainbow Six: Siege and have been with Ubisoft for seven years. Previously I did sound design for Prince of Persia and Splinter Cell. I also worked as a product manager for Ubisoft's own sound engine.

Before joining the gaming industry, I worked as a sound engineer on the set of several series and films. In my spare time, as far as I can remember, I was involved in music, cultivating a love for synthesizers, guitars, and in general for everything that can produce sound.

Having a strong interest in the technical aspects of sound, I moved into the gaming industry with enthusiasm. I felt that, compared to TV and movies, games offered a wider scope for innovation and technological breakthroughs. Right now, we've barely scratched the surface of the potential of interactive audio, real-time mixing, and new positioning algorithms, and I'm very curious to see what the future holds for us.

Dynamic Sound Propagation in Destructible Environments

Three basic aspects of physics are associated with sound propagation: reflection (when sound bounces off surfaces), absorption (when sound passes through a surface but loses some frequencies), and diffraction (when sound bends around objects). Your ear notices these phenomena daily. In real life, there are many other factors that govern the intended position of a sound source, but I will focus specifically on the physics of sound propagation and how we simulate it.

The main innovation in Siege was the abundant use of diffraction - we use the term "obstruction" for this. By strategically placing "distribution nodes" on the map, we could calculate the simplest path for sound from source to listener. The ease of the path depends on several factors, namely, the length of the path, the total amount of rounding corners and the penalties for the degree of destruction at certain nodes.

For example, if the wall is not damaged, the node inside it is not taken into account by the algorithm (infinite penalty). But if there is a hole in it, the node will be available to choose the propagation path. Then we virtually shift the sound source in accordance with the direction of such paths, which ultimately acts as an analogue of diffraction.

We also use several strategies to simulate absorption, calling it "occlusion". Depending on the source, we either play a pre-prepared muted version of the audio (such as footsteps on the top floor) or we play the audio directly from the source with real-time filtering. The second option increases the load on the processor, so it is mostly reserved for weapon sounds. In real life, you can hear the absorbed and deflected versions of the sound at the same time, and we also combine them, giving more information about the real location of the source.

Finally, for reflection (in our "reverb" terminology), we use an impulse reverb. This is a special reverb that "scans" the acoustic properties of a real room, and then plays the sounds from our game in it. In my opinion, this method is light years ahead of traditional parametric reverbs - at least for simulation purposes. The only negative is that due to the load on the processor, we cannot use it in a large number of cases. To get around this limitation, we “attach” the reverb to a weapon and play it back towards that weapon, which provides the player with more accurate information about the location of the enemy.

What is it all for?

Destructible environments were a major difficulty during the development of the sound distribution system. It's one thing to lead the sound along the shortest path, and quite another when the level changes during the game - something we've never done before. It was not easy to keep the sound quality high while keeping performance in mind. We placed several nodes in a destructible environment, and they remained closed until the object was damaged. We experimented over and over again with a different number of possible propagation paths until we found a happy medium between accuracy and speed.

Interestingly, sound propagation modifiers work not only in one direction: nodes can both open and close. By barricading and strengthening the walls, players also change the path of the sound. Such barriers do not have to completely cover the node - depending on the properties of the material (wood, glass, concrete, etc.), the sound can still pass through, but with a certain penalty. For example, wooden and metal barricades have different mute settings.

With a level of destructibility like in Siege, it would be a disaster if we just relied on occlusion without the use of obstruction. Occlusion in this case would be too powerful a "wallhack". Playing defense, you could just destroy as many walls as possible and listen for exactly where the attackers are going - they would not have a chance. We're trying to keep the audio as accurate as possible, but the 'real physics' simulation also adds an extra layer of guesswork into the game about the location of the enemy, which evens out both sides. Of course, in some situations this moment can be very upsetting, but such is real life.

Hereford Map

Hearing the player's actions

Silence and inactivity are key principles of the game, and even with a three-minute round timer, players prefer to listen to their opponents. In fact, when we started development, we thought that the game environment would sound rather uninteresting. Waiting quietly in the bedroom of a suburban house - this is not a battle in the thick of the battle and not a space battle, right?

At that time, not all sounds had been added to the game, and the system for their distribution was only at an early stage of development. But as all the pieces of the puzzle began to slowly come together, we realized that we could achieve something more serious than “fake tension”. The threat you are hearing is real and is heading towards you. By ditching heavy ambient, we were able to both increase the suspense of the atmosphere and create space for players to get more accurate information about the enemy.

Sound propagation diagram on Hereford map

We paid special attention to movement sounds, which allow you to simply listen in order to understand the location of the enemy - it is quite possible to determine the weight, armor and speed of the operator from sound prompts. Barricades, gadgets and other devices are also equipped with specific sounds.

The sounds that the player character makes are amplified for two important reasons: firstly, the player understands that he is making a lot of noise and that this can give him away; secondly, it makes it clear that you need to slow down if you want to listen. This is the basis of sound design in Siege: by moving more slowly and listening to your surroundings, you can gather more information and play better.

Distribution nodes close-up

Results

When we started working on the project, we aimed to create an unsettling atmosphere. At some point, we added music and effects for this, but, as already mentioned, the best idea was to use the players themselves as sound sources. So we removed all the "fake" sounds, focusing on what really matters.

Today, after a long time, all this seems obvious, but I see that rare games abandon the classic artificial tension of the atmosphere. Getting rid of the effects, in my opinion, gave Siege a distinctive sound that is not only pleasing to the ear, but also affects the gameplay in many ways.

The source of sound vibrations radiates energy into the surrounding space. The amount of sound energy passing per second through an area of 1 m2, located perpendicular to the direction of propagation of sound vibrations, is called the intensity (strength) of sound.

When we have a normal conversation, the power flow of energy is approximately 10 microwatts. The power of the loudest violin sounds can be 60 microwatts, and the power of organ sounds is from 140 to 3200 microwatts.

A person hears sound in an extremely wide range of sound pressures (intensities). One of the reference values of this range is the standard threshold of hearing - the effective value of the sound pressure created by a harmonic sound vibration of a frequency of 1000 Hz, barely audible to a person with average hearing sensitivity.

The threshold of hearing corresponds to the sound intensity Iv0 = 10-12 W/m2 or sound pressure psv0 = 2×10-5 Pa.

The upper limit is determined by the values of Iv. Max. = 1 W/m2 or psv. Max. = 20 Pa. When a sound of such intensity is perceived, a person experiences pain.

In the area of sound pressures significantly exceeding the standard hearing threshold, the magnitude of the sensation is proportional not to the amplitude of the sound pressure psv, but to the logarithm of the ratio psv / psv0. Therefore, sound pressure and sound intensity are often measured in logarithmic units of decibels (dB) relative to the standard hearing threshold.

The range of change in sound pressure from the absolute threshold of hearing to the pain threshold is for different frequencies from 90 dB to 130 dB.

If the human ear simultaneously perceives two or more sounds of different loudness, then a louder sound drowns out (absorbs) weak sounds. There is a so-called masking of sounds, and the ear perceives only one, louder, sound. Immediately after exposure to a loud sound, hearing sensitivity to weak sounds is reduced. This ability is called hearing adaptation.

Thus, the threshold of audibility largely depends on the listening conditions: in silence or against the background of noise (or other disturbing sound). In the latter case, the hearing threshold is increased. This indicates that the interference masks the useful signal.

The human hearing aid has a certain inertia: the sensation of the appearance of a sound, as well as its termination, does not appear immediately.

The audio signal is a random process. Its acoustic or electrical characteristics change continuously over time. Trying to keep track of random changes in the implementations of this chaos is an exercise that does not make much sense. It is possible to curb his majesty the case, to give it the features of determinism, using averaged parameters, such as the level of the audio signal.

The level of the audio signal characterizes the signal at a certain moment and is expressed in decibels, rectified and averaged over a certain previous period of time, the voltage of the audio signal.

The dynamic range of an audio signal is understood as the ratio of the maximum sound pressure to the minimum or the ratio of the corresponding voltages. In this definition, there is no information about what pressure and stress are considered maximum and minimum. This is probably why the dynamic range of the signal determined in this way is called theoretical. Along with this, the dynamic range of an audio signal can also be determined experimentally as the difference between the maximum and minimum levels for a sufficiently long period. This value greatly depends on the selected measurement time and the type of level meter.

The dynamic ranges of musical and speech acoustic signals of various types, measured using instruments, average:

80 dB for symphony orchestra

45 dB for choir

35 dB for pop music and vocal soloists

25 dB for speaker speech

When recording, the levels need to be adjusted. This is explained by the fact that the original (unprocessed) signals often have a large dynamic range (for example, up to 80 dB for symphonic music), while at home audio programs are heard in the range of about 40 dB.

There is a disadvantage to manually adjusting levels. The reaction time of the sound engineer is about 2 s, even if the score of the composition is known to him in advance. This leads to an error in maintaining the maximum levels of music programs up to 4 dB in both directions.

Amplifiers, acoustic systems and even human ears need to be protected from overloads caused by sudden jumps in the amplitude of the audio signal - to limit the signal in amplitude.

The dynamic range of the signal must be coordinated with the dynamic ranges of recording, amplifying, and transmitting devices.

To increase the range of FM radio stations, the dynamic range of the audio signal must be compressed. To reduce the noise level in pauses, it is desirable to increase the dynamic range.

In the end, fashion, which dictates its conditions in all spheres of human activity, including sound recording, requires a rich, dense sound of modern music, which is achieved by a sharp narrowing of its dynamic range.

Sound wave (loudness envelope) of a fragment of S. Rachmaninov's opera "Aleko",

and contemporary dance music.

In classical music, nuances are important, dance music should be "powerful".

This dictates the need to use devices for automatic processing of signal levels.