This article may be useful if you have a website that uses a content management system (Joomla, WordPress, OpenCart, etc.) and you decide to move it to another server. This requires not only transferring site files, but also transferring . Before you start transferring a website, you need to pick up a good one and order it in order to get access to a new hosting account. We, for example, offer standalone, Joomla and PrestaShop. If you want to transfer your site to a new name and need to, take your time. Our S4 shared hosting plan will provide you with a free domain as well. If your site does not have enough virtual hosting, you can always order VPS or .

To transfer a database, you must first create a dump, that is, place the contents in a separate sql file. This is done in the menu. phpMyAdmin on the hosting from where you are transferring the site. Go to phpMyAdmin, select the database you want to transfer on the left and click on the " Export» in the top menu.

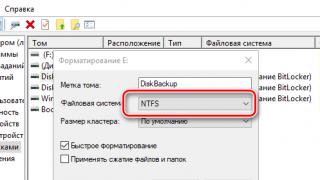

A dialog box will open, in which be sure to select the type of SQL database, then click the button OK. Wait for the database file to download to your computer.

Now this file must be correctly placed on our hosting. To do this, you must first create a MySQL database in the cPanel account, add a user to it and assign privileges to it. Below is the order of how to create a database in MySQL.

Go to cPanel and find the section " Database”, select “”.

A new window will open. In step 1, enter a database name. Please note that the database name will always be prefixed, the first half of the name will contain the cPanel account name.

After entering the name, click on the " Next step».

At step 2, you need to create a MySQL user for the database and set a password for it. After entering all the data, click the " Create user».

When setting the privileges of the created user, select the option " ALL RIGHTS” and go to the next step.

If everything was done correctly in the previous steps, the database wizard will notify you of the successful completion of creating a database on the hosting.

You can now find the created database in the list of available MySQL databases in the control panel menu of the same name.

Now, to restore the MySQL database, we import into the created database the contents of the file saved from the old hosting with the extension .sql. To do this, on the main page of cPanel, select the menu item “ phpMyAdmin” in the same section “ Database". In the window that opens, in the menu on the left, select the newly created database and click on the tab " Import» in the top menu.

Click the button select a file”, in the dialog box, select the previously saved sql file and upload it to the hosting. Make sure the encoding type is utf-8. After clicking OK, wait for a message that the database import was successful.

On this, all the steps necessary to transfer the database to another are completed.

Doing a dump (backup) of the database is very important. So I made some comments with examples on such a great utility as mysqldump.

mysqldump - the utility allows you to get a dump of the contents of a database or a set of databases to create a backup copy or transfer data to another SQL database server (not necessarily a MySQL server). The dump will contain a set of SQL commands to create and/or populate tables.

mysqldump-u root -p -f name_database >

With this command, we make a backup of the database under the name name_database to drive C in the file mydb_backup_name_database.txt

You don't have to create the file, MySQL will create it for you.

mysql-u root -p -f name_database< C:\mydb_backup_name_database.txt

with this command we import backup data from the file C:\mydb_backup_name_database.txt

Note: -f, --force is an option that tells you to continue even if you receive an SQL error, i.e. ignore errors. For example, if the table already has an identical row in everything.

In order not to be asked for a password, you need to write it immediately after -p, that is, without spaces. If the password pwd, then the example looks like this:

mysqldump-u root -p pwd-f name_database > C:\mydb_backup_name_database.txt

If you often use this command, then it is better to make a separate user with the necessary rights in order to reduce the root password

Consider more subtle mysqldump settings:

--databases allows mysqldump to include the CREATE DATABASE /*!33333 IF NOT EXISTS*/ DBNAME and USE DBNAME commands in the restore script. This will allow you to create working databases from scratch. That is, without using --databases, it is assumed that the user is restoring one database and explicitly specifies where the data to be restored should be placed. If the backup is created to make a fully working copy of the data, for example, on another MySQL server, then this key must be used;

--all-databases allows you to make copies of all databases that exist on a given MySQL server. If you need to make copies of only some databases, you just need to specify them separated by a space when calling mysqldump from the command line (see above);

Key --help. The mysqldump program has many versions. You can see what features are supported specifically by your version using this key;

--add-drop-table- a key that will cause mysqldump to add the drop table command to the final script before creating the tables. This will avoid some errors when restoring the database from a backup. Of course, you need to take into account that the tables in the working copy (if tables with the same name exist in the backup) will be deleted from the main database and recreated from the backup before being restored from the backup;

--no data. With this key, you can quickly make a copy of the structure of the table / databases without the data itself. For example, you have created a complex table and would like to save its structure for the future, but you do not need the data that is in this table in a backup copy;

--result-file=...- this switch can be used to redirect output to a file. You can use the usual unix redirection with the ">" command, or you can use this key. Who likes what;

Another very useful tip for using mysqldump in a hosting environment. As a rule, when using hosting, some restrictions are imposed on the user. For example, you cannot use more than a certain amount of physical memory (RAM, RAM). mysqldump puts all the data received from the MySQL server into memory by default, and then writes it all to disk. Accordingly, if the provider allows you to take, for example, 30 MB of memory, and the database that you make a copy of using mysqldump occupies 50 MB, of course, an error will occur here - mysqldump will not be able to work correctly and will end abnormally, which will inform you. To "force" mysqldump to write data directly to disk instead of storing it, even temporarily, in memory, use the switch --quick. This will solve the problem.

Here are a couple more useful examples:

mysqldump -u root -p pwd-f --default-character-set=cp1251 dbname| gzip -c > filename.txt .gz

You can unpack such an archive with the command:

gunzip filename.txt .gz

To know for what date the backup was made, you can write the following command:

mysqldump -uLOGIN -PPORT -hHOST -pPASS DBNAME | gzip -c > `date "+%Y-%m-%d"`.gz

and if you have to dump with different encodings, then it is convenient to use variables:

set DBCHARACTER=utf8

set dbname= breach

mysqldump -u root -p pwd-f --default-character-set=$ DBCHARACTER $dbname| bzip2 -c > sql. $DBNAME.`date "+%Y-%m-%d"`.bz2

If you want to automate the deletion of old archives, try using cron and the find command that is commonly found on unix. Running periodically

find~/archives-dir -name "*.gz" -mtime +7 -exec rm -f () \;

This way you will delete archives that are "older" than seven days.

Today I want to talk about database dumps. This phrase itself was once frightening, although in general there is nothing terrible in it. So the first and main question is: what is a database dump? The answer to it is unexpectedly simple - a database dump is a file with its contents, which allows you to restore the database from scratch. Those. it's just a copy of it. So why isn't the database dump called "db copy"? The bottom line is that the dump itself is not a database, it only allows you to recreate it. The fact is that the database can be stored in different files depending on the version of the subd, on the type of operating system and other factors. Those. it is very difficult to find all the files of one database in the system and transfer them to another system. To facilitate this task, the database dump serves just as well.

Why is it necessary to migrate the database?

Firstly, to create backups, or restore points before making important changes to the structure - in order to be able to roll back in case of any problems. For example, you installed a third-party component, and as a result, the site turned out to be inoperable and you need to quickly restore its work. This is where the need to use a database dump arises.

Secondly, when creating a site, it is usually first done on a local machine under a virtual web server and only then transferred to a hosting. And if everything is more or less clear with the transfer of files, then the transfer of the database is not so obviously a solvable task. Here, too, you can not do without a database dump.

So, what is a database dump figured out. The question remains how to use it. Let's consider a standard task in web programming - transferring a site created on a local machine to a web host. I will not describe in detail the transfer of files, I will focus on databases. So, we have a MySql database and the task is to transfer it to a web hosting. In order not to reinvent the wheel, we will use the widespread phpMyAdmin utility, which is usually always installed by your provider, and it is also included in Denver. In the end, the utility is also free and available for download on the official website. It works on a web server and is written in php, which allows you to use it directly in your browser. Those. there should not be any problems here, if they still arise, write in the comments - we'll figure it out.

Let's start creating a database dump. Open phpMyAdmin, select the database we need and click on the tab " Export". As a result, you should get something like this:

Here you need to select all tables (well, or those in which you want to roll back changes) and set the radio button to the SQL value. In the Structure section, you can mark the first field "Add DROP TABLE / VIEW / PROCEDURE / FUNCTION / EVENT" in order to delete the corresponding tables in the destination database before importing the dump, if it is transferred for the first time - you can not mark the checkbox. In the same window below we see the "Save as file" section:

Actually mark "Save as file" and write the desired file name. A database dump can be archived, but it is usually not large enough to make it worthwhile to compress the file. We press the "GO" button, and we get the standard window for saving the file in the browser:  . The saved file is the database dump. If you open it in the same notepad, you will see at the beginning of the line like

. The saved file is the database dump. If you open it in the same notepad, you will see at the beginning of the line like

PhpMyAdmin SQL Dump

-- version 3.2.3

-- http://www.phpmyadmin.net

The file itself consists of queries to create and insert values into a table written in SQL. There is absolutely no shamanism, everything is very obvious. Now the next task is to import the dump. With her, too, nothing complicated. Go to the hosting control panel, find phpMyAdmin there, run it. You may have to specify a username / password - depending on the settings of the hoster. Well, then the actions are similar - select the desired database, click the "Import" tab, we see the following window:

In the "File to import" section, using the "Browse" button, specify the database dump file, below we put down the encoding - in joomla it is utf-8 by default. Below is the "Partial import" section and the "Number of records (queries) to skip from star" field where you can enter a numerical value - the number of rows processed in one pass, in case the entire database does not have time to be imported during the lifetime of the script. The default value "0" corresponds to the import of the entire database in one run of the script. In my practice, I have not had to deal with the fact that the base does not have time to fill up in one pass, although this does not mean that such cases do not happen. The values of the remaining fields do not need to be changed, just press the "GO" button. That's all, the database dump is imported.

And finally, a few clarifications.

1) Why did I describe the way to import / export all tables and not the entire database? The fact is that most often you have to deal with the import / export procedure when transferring data from a web server to a local machine and vice versa. But very often on the local machine, access is used by the "root" account without a password. We can't afford this with hosting. And the database just does contain not only data, but also "privileges" - the rights to access certain users to it. In order not to have to create / delete users every time and assign access rights to them, an approach is used when only the structure of tables and the data in it are written to the database dump. If just a backup is created for the same server, it is quite possible to dump the entire database.

2) Why phpMyAdmin? The point here is not at all that there is no alternative to it - there are just a lot of them, there are more functional things and simpler ones. It's just that phpMyAdmin is included in the standard set for most virtual hosting, i.e. you don't have to do anything to install it. Those. we just open it and dump the database. And of course, using phpMyAdmin can be useful for a lot of tasks - the same query testing, viewing the structure of tables, data in them.

Well, that's actually all that I wanted to tell in this article. Comments are welcome as always.

This utility allows you to get a dump (``snapshot"") of the contents of a database or collection of databases to create a backup copy or transfer data to another SQL database server (not necessarily a MySQL server). The dump will contain a set of SQL commands to create and/or populate tables.

If the backup is created on the server, then instead of the described utility, you should use mysqlhotcopy . See section 4.8.6 mysqlhotcopy , Copying MySQL databases and tables.

Shell> mysqldump database or mysqldump --databases DB1 or mysqldump --all-databases

If you do not specify table names or use the --databases or --all-databases options, then a dump of the database as a whole (respectively, all databases) will be obtained.

A list of options supported by your particular version of the mysqldump utility can be obtained by running the mysqldump --help command.

Note that the mysqldump utility, used without the --quick or --opt options, will load the entire result into memory before dumping the result of the information fetch. This can create problems when dumping a large database.

Note that you should not use the --opt or -e options if you are going to use a new copy of mysqldump to get the dump and then replay it on a very old MySQL server.

The mysqldump utility supports the following options:

Add-locks Add LOCK TABLES before execution and UNLOCK TABLE after every table dump (to speed up MySQL access). --add-drop-table Add a DROP TABLE command before each CREATE TABLE command. -A, --all-databases Dump all databases. Similar to the --databases option, specifying all databases. -a, --all Enable all MySQL-specific object creation options. --allow-keywords Allow creating column names that match keywords. The absence of conflicts is ensured by adding the table name as a prefix to each column name. -c, --complete-insert Use complete INSERT commands (with column names). -C, --compress Compress all information between client and server if they both support compression. -B, --databases Dump multiple databases. Note the difference in usage: in this case no tables are specified. All argument names are treated as database names. USE db_name statement; included in the output before each new database. --delayed Use the INSERT DELAYED command when inserting rows. -e, --extended-insert Use INSERT command with new multi-line syntax (improves compactness and performance of input statements). -#, --debug[=option_string] Track program progress (for debugging). --help Display help information and exit the program. --fields-terminated-by=... --fields-enclosed-by=... --fields-optionally-enclosed-by=... --fields-escaped-by=... --lines- terminated-by=... These options are used in conjunction with the -T option and have the same meaning as the corresponding statements for LOAD DATA INFILE . See section 6.4.9 Syntax of the LOAD DATA INFILE statement . -F, --flush-logs Flush the system log data from the MySQL server buffer to disk before starting the dump. -f, --force, Continue even if you get an SQL error while dumping a table. -h, --host=.. Dump MySQL server data on the specified host. The default host value is localhost . -l, --lock-tables. Lock all tables before starting the dump. Tables are locked with a READ LOCAL statement to allow parallel writes for MyISAM tables. Note that when dumping a collection of databases, the --lock-tables option locks the tables in each database individually. Thus, using this option does not guarantee that tables will be logically consistent within these databases. In different databases, when a dump is performed, tables can be in completely different states. -K, --disable-keys Add the expression /*!40000 ALTER TABLE tb_name DISABLE KEYS */; and /*!40000 ALTER TABLE tb_name ENABLE KEYS */; in the result output. This will speed up the loading of data into the MySQL 4.0 server, since indexes are created after all the data has been entered. -n, --no-create-db CREATE DATABASE /*!32312 IF NOT EXISTS*/ db_name; will be missing. This line will be added in any case when using the --databases or --all-databases options. -t, --no-create-info Do not record table creation information (CREATE TABLE command). -d, --no-data Do not write information from table rows. This is very useful for dumping the structure of a table! --opt Same as --quick --add-drop-table --add-locks --extended-insert --lock-tables . Should give the fastest read dump on the MySQL server. -pyour_pass, --password[=your_pass] The password to use when connecting to the server. If the =your_pass argument is not provided, mysqldump will prompt you for a password. -P port_num, --port=port_num The TCP/IP port number used to connect to the host (used when connecting to hosts other than localhost , which uses Unix sockets). -q, --quick Dump directly to stdout without buffering the request. The mysql_use_result() function is used for this. -Q, --quote-names Quote table and column names without ``" characters. -r, --result-file=... Output the specified file directly. This option should be used in MS DOS as it prevents converting the newline character "\n" to the sequence "\n\r" (newline + carriage return). --single-transaction This option issues a BEGIN SQL command before dumping data from the server. It is most commonly used with InnoDB tables and the READ_COMMITTED transaction isolation level, as it is in this mode that you can get a dump with a consistent database state after executing the BEGIN command without blocking any applications. When using this option, be aware that only transactional tables will be in a consistent state when dumping. some MyISAM - or HEAP - tables may still change their state when using this option. The --single-transaction option was added in version 4.0.2. It is mutually exclusive with the --lock-tables option because the LOCK TABLES command aborts the previous transaction. -S /path/to/socket, --socket=/path/to/socket Socket file to connect to localhost (default host). --tables Overrides the --databases (-B) option. -T, --tab=path-to-some-directory For each given table, creates a file a `table_name.sql" containing the SQL CREATE commands for creating the table, and a file `table_name.txt" with the table data. The `.txt" file is formatted according to the --fields-xxx and --lines--xxx options. Note: This option only works if the mysqldump utility is running on the same machine as the mysqld daemon and the user/group that started this mysqld thread (usually the mysql user and mysql group) must have create/write file permission on the specified address. -u user_name, --user=user_name MySQL server username used when connecting to the server. The default value is the Unix username. -O var=option, --set-variable var=option Set variable values. The variables available for use are listed below. -v, --verbose Extended output mode. Displaying more detailed information about the program. -V, --version Display version information and exit the program. -w, --where="where-condition" Dump only selected entries. Note that the quotes are required. "--where=user="jimf"" "-wuserid>1" "-wuserid -X, --xml Dump the database as XML. -x, --first-slave Lock all tables in all databases. -O net_buffer_length=#, where # The most common use of the mysqldump utility is to take a backup of all databases. 4.4.1 Backing up databases. mysqldump --opt database > backup-file.sql

mysql database

Mysql -e "source /patch-to-backup/backup-file.sql" database

This utility is often used to transfer information from a database to another MySQL server:

mysqldump --opt database | mysql --host=remote-host -C database

It is quite possible to dump multiple databases with a single command:

mysqldump --databases database1 > my_databases.sql

If you need a dump of all databases, you can use:

mysqldump --all-databases > all_databases.sql