Comparison with mobile and desktop processors

In mid-January, we conducted the first study of the system on the new platform Intel Sandy Bridge. In that test, a Toshiba A665-3D prototype laptop with a new NVIDIA video adapter and NVIDIA technology Optimus. However, as they say, they were too smart: external graphics were not included on the laptop. Therefore, applications that use graphics (first of all, games) simply did not make sense to test. And in general, some things cannot be adequately tested on an early and poorly performing sample.

Therefore, it was decided to re-test another system, and the case did not take long. We tested another laptop, Hewlett-Packard DV7, on a new platform and with a new generation of graphics from AMD. True, when the tests were already completed, information appeared about the infamous error in the south bridge, due to which the sold devices (including mobile ones) are subject to recall. So here, too, the results in the strict sense of the word are not quite official (according to at least, Hewlett-Packard asked to return the laptop), but we understand that the error (and even so “theoretical”) cannot affect the test results.

Nevertheless, it was not worth releasing a separate material only to repeat the measurements once again and call them final. Therefore, in this review, we have set ourselves several tasks:

- check the results of the new system in the "mobile" methodology;

- check system operation overclocking intel Turbo Boost on a different system with different cooling;

- compare mobile and desktop versions of the processor Sandy Bridge in desktop methodology for testing computer systems.

Well, let's move on to testing.

Configuration of test participants according to the methodology for mobile systems

As already noted, comparing the performance of subsystems mobile computers much more difficult, because they are provided for testing in the form of finished products. It is difficult to draw conclusions, because more than one component can influence the difference in performance.

Let's look at the competitors, more precisely, at the change in their composition compared to the previous testing. First, we decided to remove the Core i5-540M model from the comparison. It belongs to a weaker dual-core line, and other models will correspond to it in the Sandy Bridge line. If the results of this processor are so important, they can be taken from the previous article. Instead, the comparison includes Hewlett-Packard Elitebook 8740w, also on a Core i7-720QM processor, and the main test system for today has been added - Hewlett-Packard Pavillon DV7 on a Sandy Bridge 2630QM processor.

Thus, two models on the Core i7-720QM processor and two models on the Core i7 2630QM processor participate in the test. This will not only allow you to compare the performance of systems on an older and newer processor, but also make sure that the performance level is the same for two systems on the same processor.

Well, we are moving on to analyzing the configurations of laptops participating in testing.

| Notebook name | HP 8740w | ASUS N53Jq | Toshiba A665-3D | HP DV7 |

|---|---|---|---|---|

| CPU | Core i7-720QM | Core i7-720QM | Core i7-2630QM | Core i7-2630QM |

| Number of Cores | 4 (8 streams) | 4 (8 streams) | 4 (8 streams) | 4 (8 streams) |

| Rated frequency | 1.6 GHz | 1.6 GHz | 2 GHz | 2 GHz |

| Max. Turbo boost frequency | 2.6* GHz | 2.6* GHz | 2.9* GHz | 2.9* GHz |

| LLC cache size | 6 MB | 6 MB | 6 MB | 6 MB |

| RAM | 10 GB | 10 GB | 4 GB | 4 GB |

| Video subsystem | NVIDIA QUADROFX 2800M | NVIDIA GT 425M | Intel integr. | ATI 6570 |

* the frequency of automatic overclocking is indicated if the processor has all four cores under load. If there are two cores under load, then the frequency can still increase (from 2.6 GHz to 2.8 GHz), and if one - then rise to the maximum mark (from 2.6 GHz to 2.9 GHz).

We analyze the data on processors necessary for comparison. Firstly, the manufacturer claims that the internal architecture of the processor has been optimized in the Sandy Bridge line, this should bring some increase in overall performance.

The number of hypertrading cores and threads is the same for all participants. However, the clock speed is different: the 720QM has only 1.6 GHz, while the new processors run at 2 GHz. The maximum clock frequency, however, differs not so much. The fact is that for 720QM the frequency is indicated when four cores are involved, and for 2630QM - when one is involved. If it has four cores loaded, then maximum frequency is the same 2.6 GHz. In other words, in the "overclocked" state, the processors should work on the same frequency(until the temperature control kicks in). But Sandy Bridge has more advanced Intel Turbo Boost overclocking technology, which can keep the increased frequency longer, so it may have an advantage. But it is impossible to predict exactly how overclocking will behave, because there are too many dependencies on external factors.

Let's go directly to the tests.

Compare the performance of the Sandy Bridge processor line with the previous generation in the mobile performance research methodology application suite. Determination of repeatability of results

For tests, we used the laptop testing methodology in real applications sample of 2010. Compared to the desktop, it has a reduced set of applications, but the rest are launched with the same settings (except for games, the settings in this group have been seriously changed, and the test task parameters for Photoshop programs). Therefore, the results of individual tests can be compared with the results of desktop processors.

Rating results individual groups applications from this material cannot be directly compared with the desktop rating data. When testing the performance of laptops, not all applications of the methodology are launched, respectively, the rating is considered differently. The results of the ratings of the desktop systems participating in the testing have been recalculated.

I’ll make a reservation right away that for each system the tests were carried out twice, and between the runs the system was reinstalled and configured again. In other words, if the test results seem strange, they are at least repeatable: on two different freshly installed systems with an up-to-date set of drivers.

Let's start with professional applications.

3D visualization

This group contains applications that are demanding on both processor performance and graphics.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| Lightwave - work | 20,53 | 22,97 | 24,87 | 16,17 |

|---|---|---|---|---|

| Solidworks - work | 52,5 | 58,83 | 133,12 | 60,45 |

| Lightwave - rating | 122 | 109 | 101 | 155 |

| Solidworks - rating | 129 | 115 | 51 | 112 |

| Group - rating | 126 | 112 | 76 | 134 |

Interestingly, both systems of the “second wave” significantly outperform the systems tested a month and a half ago. I wonder what it is - the influence of the drivers? Another, significantly more powerful graphics in both cases? Even aside from the old Sandy Bridge scores, the same correlation is observed when comparing two Core i7s.

Now it's safe to say that the new generation is faster. With the exception of the strange results of SolidWorks, but we will return to them in a discussion of the results of the desktop technique.

3D rendering

Let's see how things stand in the rendering of the final scene. Such rendering is performed by the CPU.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| light wave | 138,58 | 131,56 | 269,89 | 90,22 |

|---|---|---|---|---|

| 3Ds Max | 0:10:04 | 0:10:06 | 00:21:56 | 0:07:45 |

| Lightwave - rating | 95 | 101 | 49 | 146 |

| 3Ds Max rating | 113 | 112 | 52 | 147 |

| Group - rating | 104 | 107 | 51 | 147 |

Let me remind you that Toshiba's sample showed very poor results in this test. But in a fully functional system, the Sandy Bridge processor allows you to achieve significant superiority in both graphics packages. In Lightwave, as you can see, there is a difference between the two Core i7-720QMs, but in 3Ds MAX there is almost no difference.

But in both tests it is clear that the Core i7-2630QM processor is significantly faster, significantly outperforming the representatives of the previous generation.

Computing

Let's look at the performance of processors in applications related to mathematical calculations.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| Solidworks | 46,36 | 45,88 | 44,02 | 38,42 |

|---|---|---|---|---|

| MATLAB | 0,0494 | 0,0494 | 0,0352 | 0,0365 |

| Solidworks - rating | 111 | 112 | 117 | 134 |

| MATLAB - ranking | 113 | 113 | 159 | 153 |

| Group - rating | 112 | 113 | 138 | 144 |

Well, the math tests don't feel the difference between the two Core i7-720QM. From this we can draw a preliminary conclusion that these applications are minimally responsive to other components of the system and the software part.

The new generation processor is faster, but the gap here is not so big, this is especially evident from the rating numbers. Somehow the performance of DV7 in MATLAB test is slightly lower than A660.

Let's see if in other tests the gap between the new generation and the old one will be approximately the same.

Compilation

Program compilation speed test using the Microsoft Visual Studio 2008 compiler. This test responds well to processor speed and cache, and it can also use multi-core.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| Compile | 0:06:29 | 0:06:24 | 0:04:56 | 0:04:54 |

|---|---|---|---|---|

| Compile - rating | 123 | 125 | 162 | 163 |

The difference in the results is small, I think it can be attributed to the error. The performance difference between the two generations is significant.

Java application performance

This benchmark represents the execution speed of a set of Java applications. The test is critical to processor speed and reacts very positively to additional cores.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| Java | 79,32 | 83,64 | 111,8 | 105,45 |

|---|---|---|---|---|

| Java - rating | 90 | 94 | 126 | 119 |

And here the results are slightly but noticeably lower for the newer laptops tested. We will not guess why this happened, but I emphasize that the results were repeated twice. The difference between processors of different generations is about the same as in the previous test.

Let's move on to productive household tasks: working with video, sound and photos.

2D graphics

Let me remind you that only two tests remained in this group, quite diverse. ACDSee converts a set of photos from RAW format in JPEG, and Photoshop performs a series of image processing operations - filter overlay, etc. Applications depend on the speed of the processor, but multi-core is involved in so far as.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| ACDSee | 0:07:01 | 0:06:55 | 0:05:11 | 0:04:52 |

|---|---|---|---|---|

| photoshop | 0:01:17 | 0:01:17 | 0:00:49 | 0:00:51 |

| ACDSee - rating | 108 | 110 | 146 | 156 |

| Photoshop - rating | 426 | 426 | 669 | 643 |

| Group - rating | 267 | 268 | 408 | 400 |

ACDSee shows some instability of results, but in general, the difference between generations is in line with the trend, it is even slightly larger.

Photoshop ratings are not worth paying attention to due to the modified test task. These same ratings spoil and overall rating groups. But if you look at the execution time, you can see that the advantage is about the same.

Audio encoding in various formats

Encoding audio into various audio formats is a fairly simple task for modern processors. The dBPowerAmp wrapper is used for encoding. She knows how to use multi-core (additional encoding streams are launched). The result of the test is her own points, they are the inverse of the time spent on coding, i.e. the more, the better the result.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| apple | 148 | 159 | 241 | 238 |

|---|---|---|---|---|

| flac | 199 | 214 | 340 | 343 |

| monkey | 143 | 155 | 239 | 235 |

| mp3 | 89 | 96 | 150 | 152 |

| nero | 85 | 91 | 135 | 142 |

| ogg | 60 | 65 | 92 | 90 |

| apple - rating | 90 | 97 | 147 | 145 |

| flac-rating | 99 | 106 | 169 | 171 |

| monkey rating | 97 | 105 | 163 | 160 |

| mp3 rating | 103 | 112 | 174 | 177 |

| nero-rating | 104 | 111 | 165 | 173 |

| ogg-rating | 103 | 112 | 159 | 155 |

| Group - rating | 99 | 107 | 163 | 164 |

The test is quite simple, but at the same time visual. Quite unexpectedly, the difference between the two Core i7-720QM processors appeared here, and not in favor of the recently tested system. Sandy Bridge processors showed almost the same performance. As you can see, the advantage of the new processors is very significant, more than in the previous groups of tests.

Video encoding

Three tests out of four are encoding a video clip into a specific video format. The Premiere test stands apart, in this application the script provides for the creation of a video, including the imposition of effects, and not just coding. Unfortunately, Sony Vegas did not work on some systems, so we removed its results for this article.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| DivX | 0:05:02 | 0:05:23 | 0:04:26 | 0:04:18 |

|---|---|---|---|---|

| Premiere | 0:05:04 | 0:04:47 | 0:03:38 | 0:03:35 |

| x264 | 0:10:29 | 0:10:01 | 0:07:45 | 0:07:35 |

| Xvid | 0:03:31 | 0:03:34 | 0:02:34 | 0:02:30 |

| DivX rating | 86 | 80 | 98 | 101 |

| Premiere - rating | 101 | 107 | 140 | 142 |

| x264 - rating | 100 | 105 | 135 | 138 |

| XviD - rating | 87 | 86 | 119 | 123 |

| Group - rating | 94 | 95 | 123 | 126 |

The results of encoding in DivX stand apart. For some reason, in this test, there is a very large difference in systems with 720QM and a very small difference between the old and new generations.

In other tests, the difference is significant, and the difference between generations roughly corresponds to the general trend. Interestingly, in Premiere the difference is about the same as in simple encoding. By the way, in this test, the big difference between the two systems based on 720QM also attracts attention.

And finally, several types of household tasks.

Archiving

Archiving is a fairly simple mathematical problem in which all processor components are actively working. 7z is more advanced, because it can use any number of cores, and generally works more efficiently with the processor. Winrar uses up to two cores.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| 7zip | 0:01:57 | 0:01:55 | 0:01:30 | 0:01:27 |

|---|---|---|---|---|

| WinRAR | 0:01:50 | 0:01:48 | 0:01:25 | 0:01:25 |

| Unpack (RAR) | 0:00:50 | 0:00:49 | 0:00:42 | 0:00:41 |

| 7-zip - rating | 115 | 117 | 149 | 154 |

| WinRAR - rating | 135 | 138 | 175 | 175 |

| Unpack (RAR) - rating | 140 | 143 | 167 | 171 |

| Group - rating | 130 | 133 | 164 | 167 |

The difference between identical processors is very small. Again, we can see that in comparison of two systems based on 720QM, the 8740 is not much, but consistently faster. The new generation processors are significantly faster, the difference between the two generations is generally the same as in most other groups.

Performance in Browser Tests

Pretty simple tests too. Both measure performance in Javascript, which is perhaps the most performance-demanding part of the browser engine. The trick is that the V8 test has a result in points, while the Sunspider has a result in milliseconds. Accordingly, in the first case, the higher the number, the better, in the second - vice versa.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| Googlev8-chrome | 6216 | 6262 | 7414 | 7366 |

|---|---|---|---|---|

| googlev8-firefox | 556 | 555 | 662 | 654 |

| Googlev8-ie | 122 | 123 | 152 | 147 |

| Googlev8-opera | 3753 | 3729 | 4680 | 4552 |

| Googlev8-safari | 2608 | 2580 | 3129 | 3103 |

| sunspider-firefox | 760 | 747 | 627 | 646 |

| Sunspider-ie | 4989 | 5237 | 4167 | 4087 |

| Sunspider Opera | 321 | 322 | 275 | 275 |

| sunspider safari | 422 | 421 | 353 | 354 |

| Googlev8 - rating | 134 | 134 | 162 | 160 |

| Sunspider - rating | 144 | 143 | 172 | 172 |

| Group - rating | 139 | 139 | 167 | 166 |

Comparison in HD Play

This test has been removed from the standings for desktop systems, but it is still relevant for mobile. Even if the system copes with the decoding of a complex video, in a laptop it is still very important how many resources are required to complete this task, because both the heating of the system and the battery life depend on it ...

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| H.264 hardware | 2,6 | 2,5 | 2,3 | 1,2 |

|---|---|---|---|---|

| H.264 software | 19,7 | 18,9 | 13,4 | 14 |

| H.264 hardware rating | 631 | 656 | 713 | 1367 |

| H.264 software rating | 173 | 180 | 254 | 243 |

In absolute terms, the difference between the two 720QMs is not very big, although in the ratings it may seem significant. It is interesting to look at the difference between the two Core i7-2630QM processors in mode using hardware acceleration. The system with AMD graphics shows lower load, but the results were very good with the Intel adapter. IN program mode both systems do a good job of decoding, CPU usage is low. For Sandy Bridge processors, the system load is predictably lower.

Let's look at the average score of the systems that participated in the tests.

| HP 8740w Core i7-720QM | ASUS N53Jq Core i7-720QM | Toshiba A665-3D Core i7-2630QM | HP DV7 Core i7-2630QM |

|

| Overall rating of the system | 128 | 129 | 158 | 173 |

|---|

Although in some tests the difference between the two systems with processors Intel Core i7-720QM was noticeable, in general they showed almost identical results.

The performance of a fully functional and functional system with a Core i7-2630QM processor is much higher than that of the sample we tested earlier. Based on these results, it is already possible to draw conclusions about the performance of the platform.

And these conclusions are that the performance new platform Sandy Bridge is somewhere around 35% (depending on the applications used) higher than the previous generation platform used. Of course, the conclusions are still not final. At a minimum, the chips have different frequencies. And in general, in relation to the new Intel processors, such a concept as “clock frequency” has become quite illusory, because we have Intel Turbo Boost technology.

Checking the operation of the Intel Turbo Boost system

The processors of the Sandy Bridge series implemented a new version Intel Turbo Boost technology, which has much more control over the processor clock speed. The control and management system has become much more complex and intelligent. Now it can take into account many parameters: which cores and how loaded, processor temperature and individual components(i.e. the system can monitor and prevent localized overheating).

Since temperature and load control has become more efficient, the processor needs a smaller margin of safety in order to work stably and efficiently under any external conditions (primarily temperature). This allows you to use its capabilities more efficiently. In fact, this system is a controlled overclocking: the frequency of work increases, and the control does not allow the processor to go beyond the safe operating conditions and lose stability or break down. If the processor running at an increased frequency gets too hot, the monitoring system will automatically lower the frequency and supply voltage to safe limits.

Furthermore, new system acceleration control is able to take into account the "effect of inertia". When the processor is cold, the frequency can rise very high for a short time, the processor may even go beyond the manufacturer's specified heat dissipation limit. If the load is short-term, the processor will not have time to warm up to extreme temperatures, and if the load lasts longer, the processor will heat up and the system will reduce the temperature to safe limits.

Thus, the Sandy Bridge processor has three operating positions:

Power-saving mechanisms are activated, the processor operates at a low frequency and a reduced supply voltage. The Intel Turbo Boost system is activated, the processor accelerates to the maximum allowed overclocking frequency (it depends, among other things, on how many cores and how loaded), the supply voltage increases. The processor runs at this clock speed as long as the core temperature allows. The processor, when the thresholds for load or heating are exceeded, returns to the clock frequency at which it is guaranteed to work stably. For example, for 2630QM this frequency is specified as 2 GHz, this frequency is specified in the specifications, and the manufacturer guarantees that the processor will be able to maintain this frequency indefinitely, subject to the specified external conditions. Intel Turbo Boost allows you to increase the frequency of operation, but the parameters of its operation and the frequency of operation depend on external conditions, so the manufacturer cannot guarantee that this system will always work the same way.However, this information can be gleaned from the first review. Let me remind you that in the first test, the processor in idle time worked with the following parameters:

- Idle: 800 MHz, supply voltage 0.771 V.

- Load (all cores, maximum): frequency 2594 MHz (multiplier 26), supply voltage 1.231 V.

- Load (after about 5 minutes of operation) - either 2594 MHz (multiplier 26) or 2494 MHz (multiplier 25).

- Load (after about 7-8 minutes of work) - 1995 MHz (multiplier 20). The voltage is 1.071 V. The system returned to the stable operating parameters set by the manufacturer.

Let's see how long the overclocked Hewlett-Packard DV7 will last.

We launch programs for monitoring the state of the processor.

The operating frequency and voltage are the same as in the previous test. Let's look at the temperature readings.

Everything is quiet, the temperatures are relatively low - 49 degrees. For a high-performance processor, this is not much. Notice the temperature difference between the first and fourth cores.

We launch load test. Let me remind you that it loads all the cores at once, so we will not see the maximum numbers (2.9 GHz) in Intel Turbo Boost.

As you can see, the voltage has risen to 1.211 Volts, the frequency has become 2594 MHz due to the changed multiplier, now it is 26. The processor starts to rapidly gain temperature, the cooling system fan starts to sound louder and louder.

Well, let's see how long the processor will last when it switches to the stock frequency.

A minute has passed, it is clear that the temperatures are beginning to stabilize.

Five minutes passed and the temperatures stabilized. For some reason, the temperature of the first and fourth cores differs by 10 degrees. The difference in temperatures is present in all tests, even in idle it is noticeable. I'm not going to say why this is happening.

It's been 15 minutes since the test started. Temperatures are stable, the cooling system copes. The clock frequency remains at 2.6 GHz.

48 minutes have passed. The laptop continues to work under load, temperatures are stable (well, rose by a degree). The clock frequency is the same:

Well, at least in winter and in a not very hot room, the DV7 can operate at the maximum available frequency indefinitely. The power of the cooling system is enough for Intel Turbo Boost to keep the maximum available "overclocking" frequency without any problems. Theoretically, it would be possible to overclock the processor a little more.

This conclusion differs from previous results. Now you know what to buy quality laptop: if the designers did a good job of creating a cooling system, you will receive dividends not only in the form of a high-quality and strong case, but also in performance!

Well, let's move on to the second very interesting part of the article: a comparison of the Core i7-2630QM mobile processor with desktop processors of the Sandy Bridge series in a desktop testing methodology.

Comparison of Core i7-2630QM mobile processor performance with desktop processors of the Sandy Bridge series

For comparison, we use results from our study of Core i7 and Core i5 desktop processors based on Sandy Bridge.

Let's compare the participants' configurations by including information about the Core i7-2630QM in the table.

| CPU | Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM |

| Kernel name | Sandy Bridge | Sandy Bridge | Sandy Bridge | Sandy Bridge | Sandy Bridge |

|---|---|---|---|---|---|

| Production technology | 32 nm | 32 nm | 32 nm | 32 nm | 32 nm |

| Core frequency (std/max), GHz | 2,8/3,1 | 3,1/3,4 | 3,3/3,7 | 3,4/3,8 | 2,0/2,9 |

| Starting multiplication factor | 28 | 31 | 33 | 34 | 20 |

| How Turbo Boost works | 3-2-2-1 | 3-2-2-1 | 4-3-2-1 | 4-3-2-1 | n/a |

| Number of cores/threads of calculation | 4/4 | 4/4 | 4/4 | 4/8 | 4/8 |

| L1 cache, I/D, KB | 32/32 | 32/32 | 32/32 | 32/32 | n/a |

| L2 cache, KB | 4×256 | 4×256 | 4×256 | 4×256 | n/a |

| L3 cache, MiB | 6 | 6 | 6 | 8 | 6 |

| RAM | 2×DDR3-1333 | ||||

| GMA HD graphics core | 2000 | 2000 | 2000/3000 | 2000/3000 | 3000 |

| Graphics core frequency (max), MHz | 1100 | 1100 | 1100 | 1350 | 1100 |

| socket | LGA1155 | LGA1155 | LGA1155 | LGA1155 | n/a |

| TDP | 95 W | 95 W | 95 W | 95 W | 45 W |

The clock frequency of the mobile processor is lower, which is obvious. In the maximum Turbo Boost mode, it slightly overclocks the junior desktop Core i5, which works without Turbo Boost, but nothing more. But the thermal package is much lower - more than twice. In addition, it has a smaller last-level cache, only 6 MB. Of the pluses, it is worth noting that the mobile processor has four cores and eight computation threads, because this is Core i7. At least some advantage over the younger desktop Core i5. Let's see how it turns out in practice.

Unfortunately, a full comparison still did not work. Some packages from the desktop methodology did not start (for example, Pro/Engineer stably hung on our test system), as a result, their results had to be thrown out of the rating, which means that the rating itself changed compared to the ratings from the main material.

Let's move on to the tests. The phrase “test did not start” means that the test did not start on our laptop, so the results of all test participants were removed. Ratings in this case are recalculated.

According to the results, it is immediately clear that the mobile processor loses quite seriously to the desktop one - it cannot reach the performance level of even the junior processor of the new desktop line. The results of the Core i7 desktop processor, in my opinion, are rather weak, yet it should be much more powerful than the Core i5 line, according to the results, the dependence seems to be linear. The results of Solidworks are generally almost the same for all desktop systems. Does this test care what clock speed the processor has?

Let's look at the rendering speed of 3D scenes.

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| 3ds max | 181 | 195 | 207 | 233 | 157 |

| light wave | 153 | 168 | 180 | 234 | 161 |

| Maya | 142 | 170 | 181 | 240 | 165 |

| Rendering | 159 | 178 | 189 | 236 | 161 |

Here the situation is a little more fun - the mobile system still reached the level of the younger desktop. However, the desktop Core i7 is far ahead in all tests. For comparison, here are the absolute results of one of the tests, Maya. The result of this test is the time spent on the project, which is more visible than the scores in other tests.

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| Maya | 00:08:47 | 00:07:20 | 00:06:52 | 00:05:11 | 00:07:34 |

As you can see, even with a not very long project calculation time, the difference is significant. In the case of more complex projects, it should be even more.

Let's move on to the next test.

Almost all applications use complex mathematical calculations, so the desktop ruler with more high frequency obviously ahead. At the same time, I am very confused by the too small difference between the desktop Core i5-2500 and Core i7-2600, in some applications the more powerful processor even loses. Is hyper-dreading really so inefficient in these applications that even the difference in clock speeds cannot compensate for the slowdown it causes? This is all the more interesting, because the core configuration in the mobile processor is the same as in the 2600 series, and in general it is not so far behind the younger desktop processor, given the difference in operating frequencies between them.

And we move on to less professional and more common tests. And let's start with raster graphics. Unfortunately, one of the tests did not start, which again affected the picture of the tests.

And again, the mobile system is consistently at a level just below the youngest desktop solution. And then unexpectedly high score in Photoimpact, otherwise the picture would be even sadder. For clarity, I will give the results for two packages in absolute numbers.

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| ACDSee | 00:04:20 | 00:03:59 | 00:03:46 | 00:03:34 | 00:04:57 |

| photoshop | 00:03:36 | 00:03:15 | 00:03:07 | 00:02:58 | 00:04:00 |

This way you can estimate the specific difference in the execution time of the task.

Let's move on to archiving tests. These are simple calculations that feel good about both speed and the presence of additional processor cores (although there are questions about this).

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| 7zip | 140 | 151 | 156 | 213 | 137 |

| RAR | 191 | 207 | 216 | 229 | 173 |

| Unpack (RAR) | 179 | 194 | 206 | 219 | 167 |

| Archivers | 170 | 184 | 193 | 220 | 159 |

And again, and again... If you look at the results of 7-zip, you can see that multi-core (even in the form of hyper-threading) gives significant dividends. But, apparently, the clock speed also gives significant dividends, because the mobile Core i7 with eight cores again fell short even of the younger desktop processor. And the same situation persisted in the Winrar tests. But the desktop Core i7-2600 in the 7-zip test goes very far ahead.

Compilation test, again using the mathematical capabilities of the processor ...

In the Java application performance test, the trend is basically confirmed. But the backlog of the mobile processor is even greater.

Let's take a look at Javascript performance in modern browsers.

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| Google V8 | 161 | 176 | 190 | 191 | 148 |

| sun spider | 156 | 162 | 167 | 170 | 198 |

| Browser | 159 | 169 | 179 | 181 | 173 |

If the test results from Google roughly match what we've seen before, then there's clearly something wrong with Sunspider. Although, in principle, in all browsers, this test worked on a mobile processor faster than on all desktop ones, including the desktop Core i7 (which, however, according to the results, is very slightly different from the older Core i5).

In general, a very unexpected result of the second test, which I cannot explain. Perhaps something worked differently in the software?

Let's leave Internet applications and move on to working with video and audio. It is also a fairly popular type of activity, including for mobile computers.

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| Apple loss | 135 | 149 | 154 | 206 | 126 |

| FLAC | 145 | 159 | 171 | 233 | 144 |

| Monkey's Audio | 150 | 165 | 174 | 230 | 139 |

| MP3 (LAME) | 162 | 179 | 191 | 258 | 152 |

| Nero AAC | 154 | 171 | 179 | 250 | 148 |

| Ogg Vorbis | 164 | 179 | 191 | 252 | 147 |

| Audio | 152 | 167 | 177 | 238 | 143 |

Audio encoding does not present us with any surprises. The mobile Core i7-2630QM is a little weaker than all the tested desktop processors, the desktop Core i7 is far behind. What about video coding?

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| DivX | 146 | 160 | 170 | 157 | 96 |

| Main concept (VC-1) | 153 | 167 | 175 | 187 | 133 |

| Premiere | 155 | 169 | 178 | 222 | 132 |

| Vegas | 164 | 177 | 185 | 204 | 131 |

| x264 | 152 | 165 | 174 | 225 | 136 |

| Xvid | 166 | 180 | 190 | 196 | 133 |

| video | 156 | 170 | 179 | 199 | 127 |

The backlog of the mobile processor has increased, the desktop Core i7 is still well ahead of all other processors, although the gap has narrowed.

Well, one of the most "real" testing: games!

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| Batman | 131 | 134 | 135 | 134 | 40 |

| Borderlands | 142 | 149 | 157 | 160 | 234 |

| DiRT 2 | 109 | 110 | 110 | 110 | 36 |

| Far Cry 2 | 200 | 218 | 232 | 237 | 84 |

| Fritz Chess | 142 | 156 | 166 | 215 | 149 |

| GTA IV | 162 | 164 | 167 | 167 | 144 |

| resident evil | 125 | 125 | 125 | 125 | 119 |

| S.T.A.L.K.E.R. | 104 | 104 | 104 | 104 | 28 |

| UT3 | 150 | 152 | 157 | 156 | 48 |

| Crysis: Warhead | 127 | 128 | 128 | 128 | 40 |

| world in conflict | 163 | 166 | 168 | 170 | 0 |

| Games | 141 | 146 | 150 | 155 | 84 |

It makes me want to say "oh". All games are clearly divided into processor-dependent and graphics-dependent. By installing a more powerful processor, you can greatly increase the speed in Borderlands, Far Cry 2 and Fritz Chess. Some games react very little to more powerful processors, some do not react at all. If we remove from consideration World in Confict, where the mobile Core i7 got 0, then the overall rating looks like this.

The results were disappointing for mobile system, and for the most part, the processor is not to blame for this. Before drawing conclusions, let's look at the absolute performance figures in games.

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| Batman | 205 | 209 | 210 | 209 | 63 |

| Borderlands | 75 | 79 | 83 | 85 | 124 |

| DiRT 2 | 76 | 77 | 77 | 77 | 25 |

| Far Cry 2 | 76 | 83 | 88 | 90 | 32 |

| Fritz Chess | 8524 | 9368 | 9982 | 12956 | 8936 |

| GTA IV | 63 | 64 | 65 | 65 | 56 |

| resident evil | 128 | 128 | 128 | 128 | 121,6 |

| S.T.A.L.K.E.R. | 62,9 | 62,9 | 63 | 62,9 | 17,2 |

| UT3 | 166 | 169 | 174 | 173 | 53 |

| Crysis: Warhead | 57,4 | 57,6 | 57,7 | 57,7 | 18,1 |

| world in conflict | 62,6 | 63,5 | 64,3 | 65 |

As you can see, if desktop processors almost always show quite good results, then the mobile system is in many places on the threshold of playability or below it.

For almost all games, processors are too fast, the final result depends mainly on the performance of the video card. At the same time, the performance level of the mobile system is significantly lower, which allows us to draw some conclusions about the very large difference between desktop and mobile video solutions. The difference on the example of our tests is on average three times. Standing apart are GTA IV and Resident Evil, which show similar results on all systems, including mobile.

In a CPU-intensive chess program, the mobile Core i7 performs well between budget desktop models.

Well, let's sum it up.

| Core i5-2300 | Core i5-2400 | Core i5-2500/2500K | Core i7-2600/2600K | Core i7-2630QM | |

| Overall score | 157 | 170 | 180 | 203 | 141 |

The overall result confirms the trend: one of the most powerful mobile processors The Core i7-2360QM can't match the performance of the junior desktop processor in the weaker Core i5 line. The desktop Core i7 processor in terms of performance is far behind even the desktop processors from the younger line, to say nothing of the mobile version.

Output

So, time to jump to conclusions. Let me remind you of some results from the previous material.

At first glance, Sandy Bridge is indeed a very successful processor. Firstly, it has been greatly improved, illogical solutions have been removed (the same two separate crystals made according to different technical processes), the chip structure has become logical and well optimized. Improved communication bus components inside the processor (which now includes the video core!). Secondly, the structure of the processor cores has been optimized, which should also affect better side on performance. Practice confirms the theory: the processor that we had on the test goes far ahead in performance compared to the current platform.

Indeed, in practical core testing The i7-2630QM, supposed to be the youngest in the new Core i7 mobile lineup, seriously outperforms the Core i7-720QM, the most common high-performance (or the highest-performing common) processor in the first-generation Intel Core mobile lineup. Apparently, 2630QM should take its place, i.e. become mainstream productive processor in the 2nd generation Core line.

In general, we can conclude that the second generation of Core mobile processors in terms of performance is a good step forward. As for other advantages of the line, I think it's worth waiting for the release of younger lines, and just a large number of models on new processors, and even then evaluate such qualities of the new line as heating, energy efficiency, etc.

However, compared to the new Sandy Bridge Core i5 and i7 desktop processors, the new mobile Core i7-2630QM still loses. Moreover, the mobile platform is weaker and stable in all groups of tests. This is a normal situation, because when creating mobile rulers not only performance is a priority, but also low power consumption (to ensure longer battery life), and low power consumption (due to smaller and weaker cooling systems). It is worth looking at least at the thermal package of the new mobile processor, which is more than two times (!) Lower than that of the desktop versions. You have to pay for this, including lower nominal frequency and performance in general.

By the way, if we talk about frequencies. Hewlett-Packard DV7 presented a pleasant surprise in this regard (although it is possible that things will not be so rosy in the hot summer). The processor, with a good cooling system, can operate indefinitely at a maximum Turbo Boost frequency of 2.6 GHz, so it is quite capable of demonstrating a higher level of performance than according to standard specifications. Of course, there is no guarantee that the cooling system will cope in summer, and if not, then the level real performance relative to desktop systems, it can be significantly lower than in our tests. Therefore, the presence of a competent cooling system in a laptop with a new Core i7 mobile processor comes to the fore.

You can find a detailed review of it on our website (however, support for C6 deep sleep state and low-voltage LV-DDR3 memory appeared only in Westmere). And what appeared in SB?

First - the second type of temperature sensors. A familiar thermal diode, the readings of which are “seen” by the BIOS and utilities, measures the temperature to adjust the fan speed and protect against overheating (frequency throttling and, if it doesn’t help, an emergency shutdown of the CPU). However, its area is very large, because there is only one of them in each core (including the GPU) and in the system agent. To them, in each large block, several compact analog circuits with thermal transistors were added. They have a smaller operating measurement range (80–100 °C), but they are needed to refine the data of the thermal diode and build an accurate crystal heating map, without which the new functions of TB 2.0 cannot be implemented. What's more, the power controller can even use an external sensor if the motherboard manufacturer places and connects one - although it's not entirely clear how it will help.

The function of renumbering C-states has been added, for which the history of transitions between them is tracked for each core. The transition takes more time, the larger the “sleep number” into which the nucleus enters or exits. The controller determines whether it makes sense to put the core to sleep, taking into account the likelihood of its "waking up". If one is expected soon, then instead of the requested OS, the kernel will be transferred to C3 or C1, respectively, i.e., to a more active state, which will quickly go into operation. Oddly enough, despite the higher energy consumption in such a dream, total savings may not suffer, because both are reduced transition period, during which the processor does not sleep at all.

For mobile models, the transfer of all cores to C6 causes a reset and disabling of the L3 cache common for banks power keys. This will reduce consumption even more when idle, but is fraught with an additional delay when waking up, since the cores will have to miss L3 several hundred or thousand times until the necessary data and code are pumped there. Obviously, in conjunction with the previous function, this will happen only if the controller is sure that the CPU falls asleep for a long time (by the standards of processor time).

Core i3/i5 of the previous generation were a kind of champions in terms of the complexity of the CPU power system on the motherboard, requiring as many as 6 voltages - more precisely, all 6 were before, but not all led to the processor. In SB, they changed not by number, but by use:

- x86-cores and L3 - 0.65–1.05 V (in Nehalem L3 is separated);

- GPU - similar (in Nehalem, almost the entire northbridge, which, we recall, was the second CPU chip there, is powered by a common bus);

- a system agent with a fixed frequency and a constant voltage of 0.8, 0.9 or 0.925 V (the first two options are for mobile models), or a dynamically adjustable 0.879–0.971 V;

- - constant 1.8 V or adjustable 1.71-1.89 V;

- memory bus driver - 1.5 V or 1.425–1.575 V;

- PCIe driver - 1.05 V.

Regulated versions of the power rails are used in the unlocked SB types with the letter K. Desktop models have increased the idle clock of x86 cores from 1.3 to 1.6 GHz, apparently without sacrificing economy. At the same time, a 4-core CPU consumes 3.5-4 watts at full idle. Mobile versions are idle at 800 MHz and ask for even less. Models and chipsets

PerformanceWhat does this chapter do in a theoretical microarchitecture overview? And the fact that there is one generally recognized test that has been used for 20 years (in different versions) to evaluate not the theoretical, but the programmatically achievable speed of computers - SPEC CPU. It can comprehensively evaluate processor performance, and in the best case for it, when the test source code is compiled and optimized for the system under test (i.e., the compiler with libraries is also checked in passing). In this way, useful programs will be faster only with hand-written inserts in assembler, which today are rare daredevil programmers with a lot of time. SPEC can be attributed to semi-synthetic tests, because it does not calculate anything useful, and does not give any specific numbers (IPC, flops, timings, etc.) - the "parrots" of one CPU are needed only for comparison with others.

Typically, Intel provides results for their CPUs almost at the same time they are released. But there was an incomprehensible 3-month delay with SB, and the numbers received in March are still preliminary. What exactly is delaying them is not clear, but it is still better than the situation with AMD, which did not release any official results their latest CPUs. The following figures for Opteron are given by server manufacturers using the Intel compiler, so these results may be under-optimized: what Intel software toolkit can do with code running on a "foreign" CPU . ;)

Comparison of systems in SPEC CPU2006 tests. Table compiled by David Kanter as of March 2011.

Compared to previous CPUs, SB shows excellent (in the literal sense) results in absolute terms and even record-breaking results for each core and gigahertz. Enabling HT and adding 2MB to L3 gives +3% real speed and +15% integer speed. However, the 2-core model has the highest specific speed, and this is an instructive observation: obviously, Intel used AVX, but since an integer gain cannot be obtained yet, we can expect a sharp acceleration of only real indicators. But there is no jump for them either, which is shown by a comparison of 4-core models - and the results for the i3-2120 reveal the reason: having the same 2 ICP channels, each core receives twice the memory bandwidth, which is reflected by a 34% increase in specific real speed. Apparently, the 6-8 MB L3 cache is too small, and scaling its own PS using the ring bus does not help anymore. Now it's clear why Intel plans to equip server Xeons with 3- and even 4-channel ICPs. Only now there are 8 cores already and they are not enough to turn around to the fullest ...

Addition: The final results of SB appeared - the numbers (expectedly) grew a little, but the qualitative conclusions are the same. Prospects and results

A lot is known about the 22nm successor to Sandy Bridge, the Ivy Bridge, coming out in the spring of 2012. Nuclei general purpose will support a slightly updated subset of AES-NI; it is quite possible and "free" copying of registers at the stage of renaming. Improvements in Turbo Boost are not expected, but the GPU (which, by the way, will work on all versions of the chipset) will increase the maximum number of FUs to 16, will support connecting not two, but three screens, and will finally acquire normal support for OpenCL 1.1 (along with DirectX 11 and OpenGL 3.1) and improve hardware video processing capabilities. Most likely, already in desktop and mobile models The ICP will support a frequency of 1600 MHz, and the PCIe controller will support bus version 3.0. The main technological innovation is that the L3 cache will use (for the first time in mass microelectronic production!) transistors with a vertically located multilateral gate-fin (FinFET), which have radically improved electrical characteristics (details - in one of the upcoming articles). Rumor has it that the GPU versions will again become multi-chip, only this time one or more fast video memory chips will be added to the processor.

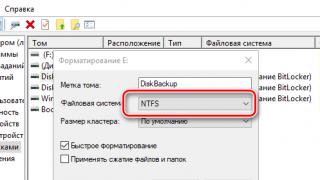

Ivy Bridge will connect to new chipsets (i.e. south bridges) 70 series: Z77, Z75 and H77 for home (replaces Z68/P67/H67) and Q77, Q75 and B75 for office (instead of Q67/Q65/B65). She(i.e., the physical chip under different names) will still have no more than two SATA 3.0 ports, and USB 3.0 support will finally appear, but a year later than the competitor. Built-in PCI support will disappear (after 19 years, it's time for the bus to rest), but the controller disk subsystem the Z77 and Q77 will receive Smart Response technology to increase performance by caching disks using an SSD. However, the most exciting news is that despite good old Traditionally, desktop versions of Ivy Bridge will not only be placed in the same LGA1155 socket as SB, but will also be backwards compatible with them - i.e. modern boards will fit the new CPU.

Well, for enthusiasts, already in the 4th quarter of this year, a much more powerful X79 chipset will be ready (for 4-8-core SB-E for the "extreme server" LGA2011 connector). It won't have USB 3.0 yet, but there will be 10 of 14 SATA 3.0 ports (plus support for 4 types of RAID), and 4 of 8 PCIe lanes can connect to the CPU in parallel with DMI, doubling the CPU-chipset link PS. Unfortunately, the X79 will not work with 8-core Ivy Bridge.

As an exception (and perhaps a new rule), we will not give a list of what we would like to improve and fix in Sandy Bridge. It is already obvious that any change is a complex compromise - strictly according to the law of conservation of matter (in the formulation of Lomonosov): if something has arrived somewhere, then somewhere the same amount will decrease. If Intel rushed to fix the mistakes of the old one in each new architecture, then the number of broken firewood and flying chips could exceed the benefits received. Therefore, instead of extremes and an unattainable ideal, it is more economically profitable to seek a balance between constantly changing and sometimes opposite requirements.

Despite some spots, the new architecture should not only shine brightly (which, judging by the tests, it does), but also outshine all the previous ones - both its own and its rival. The announced goals for performance and economy have been achieved, with the exception of optimization for the AVX set, which is about to appear in new versions of popular programs. And then Gordon Moore will once again be surprised at his insight. Apparently, Intel is fully prepared for the Epic Battle between architectures that we will see this year.

Thanks are expressed:

- Maxim Loktyukhin, the same “Intel representative”, an employee of the software and hardware optimization department, for answering numerous clarifying questions.

- To Mark Buxton, Lead Software Engineer and Head of Optimization, for his responses and also for the opportunity to get some kind of official response.

- Agner Fog, programmer and processor researcher, for independent low-level testing of SB, which revealed a lot of new and mysterious things.

- Attentive Reader - for attentiveness, steadfastness and loud snoring.

- Furious fans of the Opposite Camp - to the heap.

Finally Intel officially announced new processors running on a new microarchitecture Sandy Bridge. For most people, the “Sandy Bridge announcement” is just words, but by and large, Intel Core ll generations are, if not a new era, then at least an update to almost the entire processor market.

Initially, it was reported that only seven processors were launched, but on the most useful page arc.intel.com there is already information about all the new products. There were a few more processors, or rather their modifications (in parentheses, I indicated the estimated price - how much each processor will cost in a batch of 1000 pieces):

Mobile:

Intel Core i5-2510E (~$266)Intel Core i5-2520M

Intel Core i5-2537M

Intel Core i5-2540M

Visual detailed comparison of mobile Intel processors Core i5 second generation.

Intel Core i7-2617M

Intel Core i7-2620M

Intel Core i7-2629M

Intel Core i7-2649M

Intel Core i7-2657M

Intel Core i7-2710QE (~$378)

Intel Core i7-2720QM

Intel Core i7-2820QM

Intel Core i7-2920XM Extreme Edition

Visual detailed comparison of second generation Intel Core i7 mobile processors.

Desktop:

Intel Core i3-2100 (~$117)Intel Core i3-2100T

Intel Core i3-2120 ($138)

A visual, detailed comparison of 2nd generation Intel Core i3 desktop processors.

Intel Core i5-2300 (~$177)

Intel Core i5-2390T

Intel Core i5-2400S

Intel Core i5-2400 (~184$)

Intel Core i5-2500K (~$216)

Intel Core i5-2500T

Intel Core i5-2500S

Intel Core i5-2500 (~$205)

A visual, detailed comparison of 2nd generation Intel Core i5 desktop processors.

Intel Core i7-2600K (~$317)

Intel Core i7-2600S

Intel Core i7-2600 (~$294)

Visually detailed comparison of second generation Intel Core i7 desktop processors.

As you can see, the model names now have four digits in the name - this is done so that there is no confusion with the previous generation processors. The lineup turned out to be quite complete and logical - the most interesting i7 series are clearly separated from the i5 by the presence of technology Hyper Threading and increased cache size. And the processors of the i3 family differ from the i5 not only in a smaller number of cores, but also in the lack of technology turbo boost.

Probably, you also paid attention to the letters in the names of the processors, without which the lineup has become very thin. So, the letters S And T talk about reduced power consumption, and TO is a free multiplier.

A visual structure of the new processors:

As you can see, in addition to the graphics and computing cores, cache memory and memory controller, there is a so-called System Agent- a lot of things are dumped there, for example, DDR3 memory controllers and PCI-Express 2.0, a power management model and blocks that are responsible at the hardware level for the operation of the built-in GPU and for displaying an image if it is used.

All "core" components (including the graphics processor) are interconnected by a high-speed ring bus with full access to the L3 cache, which increased the overall data exchange speed in the processor itself; interestingly, this approach allows you to increase performance in the future, simply by increasing the number of cores added to the bus. Although even now everything promises to be on top - compared to the previous generation processors, the performance of the new ones is more adaptive and, according to the manufacturer, in many tasks it can demonstrate a 30-50% increase in task execution speed!

If there is a desire to learn more about the new architecture, then in Russian I can advise these three articles -,,.

The new processors are built entirely on the 32nm process and for the first time have a "visually smart" microarchitecture that combines best-in-class processing power and 3D graphics processing technology on a single chip. There are indeed many innovations in Sandy Bridge graphics, mainly aimed at increasing performance when working with 3D. You can argue for a long time about the "imposition" of an integrated video system, but there is no other solution as such. But there is such a slide from the official presentation, which claims to be plausible, including in mobile products (laptops):

I have already talked about the new technologies of the second generation of Intel Core processors, so I will not repeat myself. I'll just focus on development. Intel Insider, the appearance of which many were surprised. As I understand it, this will be a kind of store that will give computer owners access to high-definition films directly from the creators of these films - something that used to appear only some time after the announcement and appearance DVD discs or Blu-ray. To demonstrate this feature, Intel VP Muli Eden(Mooly Eden) invited to the stage Kevin Tsujiharu(Kevin Tsujihara), President of Warner Home Entertainment Group. I quote:

« Warner Bros. finds personal systems the most versatile and widespread platform for delivering high-quality entertainment content, and now Intel is making this platform even more reliable and secure. From now on, we will be able to provide PC users with new releases and films from our catalog in true HD quality through the WBShop, as well as our partners such as CinemaNow.”- Muli Eden demonstrated the work of this technology using the example of the film “Inception”. By partnering with industry-leading studios and media giants (such as Best Buy CinemaNow, Hungama Digital Media Entertainment, Image Entertainment, Sonic Solutions, Warner Bros. Digital Distribution, and more), Intel is building a secure and piracy-proof (hardware) ecosystem for distribution, storage and playback of high-quality video.

« Warner Bros. finds personal systems the most versatile and widespread platform for delivering high-quality entertainment content, and now Intel is making this platform even more reliable and secure. From now on, we will be able to provide PC users with new releases and films from our catalog in true HD quality through the WBShop, as well as our partners such as CinemaNow.”- Muli Eden demonstrated the work of this technology using the example of the film “Inception”. By partnering with industry-leading studios and media giants (such as Best Buy CinemaNow, Hungama Digital Media Entertainment, Image Entertainment, Sonic Solutions, Warner Bros. Digital Distribution, and more), Intel is building a secure and piracy-proof (hardware) ecosystem for distribution, storage and playback of high-quality video.

The operation of the technology mentioned above will be compatible with two equally interesting developments that are also present in all models of new generation processors. I'm talking about ( Intel WiFi 2.0) and Intel InTru 3-D. The first is for wireless transmission of HD video (supporting resolutions up to 1080p), the second is for displaying stereo content on monitors or HDTVs through a connection HDMI 1.4.

Two more functions for which I did not find a more suitable place in the article - Intel Advanced Vector Extensions(AVX). The processors' support for these instructions improves the performance of data-intensive applications such as audio editors and audio editing software. professional editing photos.

… And Intel Quick Sync Video- thanks to joint work with software companies such as CyberLink, Corel and ArcSoft, the processor giant has been able to improve performance on this task (transcoding between H.264 and MPEG-2 formats) by 17 times compared to previous generation integrated graphics.

Suppose there are processors - how to use them? That's right - along with them, new chipsets (logic sets) were also announced, which are representatives of the "sixtieth" series. Apparently, there are only two sets reserved for the thirsty Consumers, these are Intel H67 And Intel P67, on which most new motherboards will be built. The H67 is capable of working with the integrated video core, while the P67 is equipped with Performance Tuning to overclock the processor. All processors will work in the new socket, 1155 .

I am glad that it seems that the new processors are compatible with Intel processor sockets with the next generation architecture. This plus is useful for both ordinary users and manufacturers who do not have to re-design and create new devices.

In total, Intel introduced more than 20 chips, chipsets and wireless adapters, including the new Intel Core i7, i5 and i3 processors, Intel chipsets 6 Series and Intel Centrino Wi-Fi and WiMAX adapters. In addition to those mentioned above, the following “badges” may also appear on the market:

More than 500 models are expected to be released this year on new processors desktop computers and laptops of the world's leading brands.

And finally, once again, an awesome video, in case someone has not seen it:

![]()

The difference between "fully" and "partially" unlocked processors

What is the result? Having tested Turbo Boost on past generations of processors, Intel decided to turn it into a tool for real price positioning of their products relative to each other. If earlier enthusiasts most often bought junior processors in the series, often easily overclocking them to the level of older models, now the 400 MHz difference between the i3-2100 and i3-2120 costs $21, and you can’t do anything about it.

Both unlocked processors will cost a bit more than regular models. This difference will be less than in the case of previous generations - $11 for the 2500 model and $23 for the 2600. Intel still doesn't want to scare off overclockers too much. However, now $216 is the threshold for joining the club. Overclocking is entertainment that you have to pay for. It is clear that such a position can draw some users into the camp of AMD, whose budget processors overclock very well.

Overclocking itself as a whole has become easier - the requirements for the motherboard and RAM have decreased, there is less hassle with timings and various coefficients. But extreme people have a place to turn around - for sure, entire treatises will be written about adjusting BCLK.

Graphics core and Quick Sync

Intel began to tighten up the performance of its integrated graphics core even with the announcement of Clarkdale and Arrandale, but at that time it was not possible to overtake the competitors. A further bar has been set by AMD, which is about to destroy the entry-level discrete graphics market. Intel's solution came early, but will it be up to the task?

Let's start with two solutions. They are called HD 2000 and HD 3000, and the difference between them lies in the different number of execution units (EU). In the first case, there are 6 of them, and in the second - 12. GMA HD also had 12 of them, but the performance increase due to integration and the redesigned architecture turned out to be very significant. In the lineup of Intel desktop processors, only a couple of processors with an unlocked multiplier received advanced graphics. These are exactly the models in which the integrated graphics will be used with the least probability. This decision seems very strange to us. It remains to be hoped that in the future Intel will also release modifications of lower processors with a fully unlocked graphics core.

Fortunately, all of the company's new mobile processors are equipped with the HD 3000. Intel is determined to put pressure on competitors in this segment as hard as it can, because it should be easier to reach the level of performance of entry-level solutions here.

The performance of integrated graphics depends on more than just the number of EUs. All desktop Sandys have the same base frequency (850 MHz), but the older ones (2600 and 2600K) have a higher maximum Turbo Boost frequency - 1350 MHz versus 1100 for the rest. The result will also be affected to some extent by the power of the CPU cores, but much more strongly by the amount of its cache memory. After all, one of the main features new graphics is the use of the third level cache memory with the computing cores, implemented thanks to the LLC ring bus.

As with Clarkdale processors, the new products use hardware acceleration for MPEG, VC-1 and AVC decoding. However, this process is now much faster. As in "adult" discrete graphics, Sandy Bridge processors have a separate block that deals with video encoding / decoding. Unlike the processors of the previous generation, it completely takes on this task. Using hardware acceleration is much more beneficial in terms of energy efficiency, and performance in the case of SNB is very high. Intel promises the ability to simultaneously decode more than two 1080p streams. Such performance may be needed to quickly transcode existing video into a format suitable for a mobile device. Moreover, the rich multimedia capabilities make SNB the best choice when building an HTPC system.

The development of graphics solutions for Intel processors is carried out by a separate division of the company. The new developments of this division are also very relevant for the company's mobile processors. Until the Larrabee project in one form or another gets the proper development, Intel will have to put up with "non-x86" components in their CPUs.

Intel Core i5-2400 and Core i5-2500K

We got 2 processors based on the Sandy Bridge architecture. First of all, the 2500K model is of interest, as it has an unlocked multiplier. In the future, benchmarks of dual-core models and processors of the i7 series may be published separately.

Is the superiority of the first Core i (Nehalem and, in 2009, Westmere) over the opponent's CPU final? The situation is a bit like the first year after the release of the Pentium II: resting on our laurels and getting record profits, it would be nice to continue a successful architecture without changing its name much, adding new ones, the use of which will significantly improve performance, not forgetting other innovations that speed up today's versions programs. True, unlike the situation 10 years ago, one should also pay attention to the currently fashionable topic of energy efficiency, played with the ambiguous adjective Cool - “cool” and “cold”, - and the no less fashionable desire to build into the processor everything that still exists as separate . Here, under such a sauce, a novelty is served.

"The day before yesterday", "yesterday" and "today" of Intel processors.

Conveyor front. colors show different types information and blocks processing or storing it.

Prediction

Let's start with Intel's announcement of a completely redesigned (BPU). As in Nehalem, it predicts the address of the next 32-byte portion of code every cycle (and ahead of the actual execution) depending on the expected behavior of jump instructions in the portion just predicted - and, apparently, regardless of the number and type of jumps. More precisely, if the current chunk contains a supposedly triggered transition, its own and target addresses are given out; otherwise, it jumps to the next chunk in a row. The predictions themselves have become even more accurate due to doubling (BTB), lengthening (GBHR) and optimization of the access hash function (BHT). True, actual tests have shown that in some cases the prediction efficiency is still slightly worse than in Nehalem. Perhaps the increase in performance with a decrease in consumption is not compatible with high-quality branch prediction? Let's try to figure it out.

In Nehalem (as well as other modern architectures) BTB is present in the form of a two-level hierarchy - small - "fast" L1 and large - "slow" L2. This happens for the same reason why there are several levels: a single-level solution will be too compromise in all parameters (size, response speed, consumption, etc.). But in SB, the architects decided to put one level, and twice the size of Nehalem's L2 BTB, i.e. probably at least 4096 cells - that's exactly how many there are in Atom. (It should be noted that the size of the most frequently executed code is slowly growing and is less and less likely to fit in the cache, the size of which is the same for all Intel CPUs from the first Pentium M.) In theory, this will increase the area occupied by BTB, and because the total area to change not recommended (this is one of the initial postulates of the architecture) - something will have to be taken away from some other structure. But there is still speed. Considering that the SB has to be designed for a slightly higher speed for the same process, one would expect this large structure to be the bottleneck of the entire conveyor - unless it is also pipelined (two are already enough). True, the total number of transistors operating per cycle in the BTB will double in this case, which does not contribute to energy saving at all. Deadlock again? To this, Intel replies that the new BTB stores addresses in some kind of compressed state, which allows you to have twice as many cells with similar area and consumption. But it is not yet possible to verify this.

We look from the other side. SB received not new prediction algorithms, but optimized old ones: general, for indirect jumps, loops and returns. Nehalem has 18-bit GBHR and BHT of unknown size. However, it can be guaranteed that the number of cells in the table is less than 2 18 , otherwise it would take up most of the kernel. Therefore, there is a special hash function that collapses the 18 bits of the history of all transitions and the bits of the instruction address into an index of a smaller length. And, most likely, there are at least two hashes - for all GBHR bits and for those that reflect the operation of the most difficult transitions. And here is the effectiveness of the chaotic distribution by indexes of various patterns of behavior by BHT cell numbers determines the success of the general predictor. While it's not explicitly stated, Intel has certainly improved the hashes to allow longer GBHRs with equally efficient padding. But one can still guess about the size of BHT - as well as about how the energy consumption of the predictor as a whole has actually changed ... As for (RSB), it is still 16-address, but a new restriction on the calls themselves has been introduced - no more four by 16 bytes of code.

Before we go further, let's say about a slight discrepancy between the declared theory and the observed practice - and it showed that the cycle predictor in SB is removed, as a result of which the prediction of the final transition to the beginning of the cycle is made by a general algorithm, i.e. worse. An Intel rep assured us that it couldn't get "worse", however...

Decoding and IDQ

The addresses of executable commands predicted in advance (alternately for each thread - with the technology enabled) are issued to check their presence in the instruction caches (L1I) and (L0m), but we will keep silent about the latter - we will describe the rest of the front for now. Oddly enough, Intel kept the size of the instruction portion read from L1I at 16 bytes (here the word "portion" is understood according to ours). Until now, this has been an obstacle for code whose average instruction size has grown to 4 bytes, and therefore the 4 instructions that are desirable for execution per cycle will no longer fit in 16 bytes. AMD solved this problem in the K10 architecture by expanding the instruction portion to 32 bytes - although its CPUs have no more than 3 pipelines so far. In SB, the size inequality leads to a side effect: the predictor outputs the next address of the 32-byte block, and if a (presumably) triggered transition is found in its first half, then it is not necessary to read and decode the second - however, it will be done.

From L1I, the portion goes to the predecoder, and from there - to the length meter itself (), processing up to 7 or 6 commands / clock (with and without ; Nehalem could do a maximum of 6), depending on their total length and complexity. Immediately after the transition, processing begins with a command at the target address, otherwise, from the byte before which the predecoder stopped a cycle earlier. Similarly with the final point: either this is (probably) a triggered branch, the address of the last byte of which came from BTB, or the last byte of the portion itself - unless the limit of 7 commands / clock is reached, or an “uncomfortable” command is encountered. Most likely, the length gauge buffer has only 2-4 servings, however, the length gauge can get any 16 from it successive byte. For example, if 7 two-byte commands are recognized at the beginning of a portion, then in the next cycle, 16 more bytes can be processed, starting from the 15th.

The length gauge, among other things, is engaged in the detection of pairs of macro-merging commands. We'll talk about the pairs themselves a little later, but for now, note that, as in Nehalem, no more than one such pair can be detected per cycle, although a maximum of 3 (and one more single command) could be marked. However, measuring instruction lengths is a partially serial process, so it would not be possible to determine several macro-merging pairs during a cycle.

Labeled commands fall into one of two commands (IQ: instruction queue) - one per thread, 20 commands each (which is 2 more than Nehalem). alternately reads commands from queues and translates them into uops. It has 3 simple ones (translate 1 instruction into 1 uop, and with macro merge - 2 instructions into 1 uop), a complex translator (1 instruction into 1-4 uop or 2 commands into 1 uop) and a microsequencer for the most complex commands requiring 5 and more mops from . Moreover, it stores only the “tails” of each sequence, starting from the 5th mop, because the first 4 are issued by a complex translator. Moreover, if the number of uops in the firmware is not divisible by 4, then their last four will be incomplete, but inserting 1–3 more uops from translators in the same measure will not work. The result of decoding comes in and two (one per stream). The latter (officially called IDQ - instruction decode queue, queue of decoded commands) still have 28 uops and the ability to block the loop if its executable part fits there.

All this (except for the mop cache) was already in Nehalem. And what are the differences? First of all, obviously, the decoder has been taught to handle new subset instructions. Support for SSE sets with all digits is no longer surprising, and command encryption acceleration (including PCLMULQDQ) has been added to Westmere (the 32nm version of Nehalem). A pitfall has been added: this function does not work for commands that have both a constant and RIP-relative addressing (RIP-relative, the address is relative to the command pointer - the usual way accessing data in 64-bit code). Such commands require 2 uops (separate loading and operation), which means that the decoder will process them no more than one per cycle, using only a complex translator. Intel claims that these sacrifices are made to save energy, but it is not clear on what: twice placement, execution and uops will clearly take more resources, and therefore consume energy, than one.

Macro merge is optimized - previously only arithmetic or logical comparison (CMP or TEST) could be the first merged command, now simple arithmetic commands of addition and subtraction (ADD, SUB, INC, DEC) and logical "AND" (AND) are allowed, also changers for the transition (the second team of the pair). This allows you to reduce the last 2 commands to 1 uop in almost any loop. Of course, the restrictions on merged commands remain, but they are not critical, because the listed situations for a pair of commands are almost always executed:

- the first of the first command must be a register;

- if the second operand of the first instruction is in memory, RIP-relative addressing is invalid;

- the second command cannot be at the beginning or cross a line boundary.

The rules for the transition itself are:

- only TEST and AND are compatible with any condition;

- comparisons on (not) equals and any signed ones are compatible with any allowed first command;

- comparisons on (not) carry and any unsigned ones are not compatible with INC and DEC;

- other comparisons (sign, overflow, parity and their negations) are allowed only for TEST and AND.

The main change in the queues of uops is that merged uops of type , whose memory access requires reading the index register, (and a few other rare types) are divided into pairs when writing to IDQ. Even if there are 4 such mops, then all 8 final ones will be recorded in IDQ. This is done because the mop (IDQ), dispatcher (ROB) and reservation queues now use the shortened mop format without the 6-bit index field (of course, to save money when moving mop). It is assumed that such cases will be rare, and therefore the speed will not be greatly affected.

We will describe the history of the occurrence of the cycle blocking mode in this buffer below, but here we will only indicate one trifle: the transition to the beginning of the cycle previously took 1 additional cycle, forming a “bubble” between readings of the end and beginning of the cycle, but now it is gone. However, the last uops from the current iteration and the first ones from the next one cannot be in the four uops read per cycle, so ideally the number of uops in the loop should be divisible by 4. Well, the criteria for blocking it have not changed much:

- loop mops must be generated by no more than 8 32-byte portions of the source code;

- these portions must be cached in L0m (in Nehalem, of course, in L1I);

- up to 8 unconditional jumps are allowed, predicted to fire (including the final one);

- calls and returns are not allowed;

- unpaired accesses to the stack are not allowed (most often with an unequal number of PUSH and POP commands) - more on that below.

stack engine

There is one more mechanism, the work of which we did not consider in previous articles - the stack engine (stack pointer tracker, “tracker for the pointer (to the top) of the stack”), located before IDQ. It appeared in the Pentium M and has not changed to this day. Its essence is that the modification of the stack pointer (the ESP / RSP register for 32/64-bit mode) with commands for working with it (PUSH, POP, CALL and RET) is made by a separate adder, the result is stored in a special register and returned to the mop as constant - instead of modifying the pointer to after each instruction, as required and as was the case with Intel CPUs before the Pentium M.

This happens until some instruction directly accesses the pointer (and in some other rare cases) - the stack engine compares the shadow pointer with zero and, if the value is non-zero, inserts uops into the stream before the instruction calling the pointer synchronizes uop that writes to the pointer the actual value from the special register (and the register itself is reset). Since this is rarely needed, most stack accesses that only implicitly modify the pointer use a shadow copy of it that is modified at the same time as other operations. That is, from the point of view of pipeline blocks, such commands are encoded by a single merged mop and are no different from ordinary memory accesses, without requiring processing in the ALU.