Server virtualization may seem like a daunting task, but our guidance will help you lift the veil of mystery from it and take the first steps towards solving it. - Paul Venice

The benefits of server virtualization are now so significant that the need to implement the appropriate technology is beyond doubt. First of all, server virtualization allows you to use computing resources much more efficiently than physical servers - after all, in this case, several virtual servers are launched on one physical computer at once. You might be surprised to learn how many instances of general-purpose virtual servers can run simultaneously on just one modern computer.

Another major benefit of virtualization is the ability to move running virtual servers between physical hosts to balance the load and organize support. You can use virtual server snapshots to save current copies of running servers before making any changes to the configuration (for example, before updating software). If something goes wrong, it reverts to the saved snapshot, after which the server continues to run as if no adjustments were made. It is clear that this approach saves a lot of time and effort.

1. Start small on your desktop or laptop

As a rule, virtualization covers entire server rooms, but it is possible to apply this technology in offices on a much smaller scale. All you need is a desktop or laptop computer.

In general, today's desktop and laptop PCs have a huge amount of resources that go unused when performing simple daily tasks (reading email or browsing the web). If from time to time you need to use some other operating system (for example, to support applications of another OS), you can run a virtual desktop on the local system without having to physically install it.

This option is especially useful if you find incompatibilities that occur when you run old programs in a new operating environment. The free solution here can be the VirtualBox software for PC.

2. Set up a small and, if possible, free testing lab

If you have recently decommissioned servers at your disposal, you can use them as a base for creating a virtualization test lab. The main thing is that they have several gigabit network interfaces and as much RAM as possible. Virtualization imposes significantly greater demands on RAM than on processor resources, especially if the virtualization method used does not use shared RAM technologies to optimize physical memory space.

If there are no free servers, you can purchase a new cheap server for testing (again with a large amount of RAM). If you have spare parts on hand, try to assemble the server from the available components. In the lab, the capabilities of this machine will be enough to confirm the correctness of the chosen concept, but in production conditions it is not worth using it.

As for choosing virtualization software, try out the options on a lab system first. Armed with multiple hard drives, install VMware ESXi, Microsoft Hyper-V, CitrixXenServer, or Red Hat RHEV on each and boot from them one at a time, figuring out which system best suits your needs. All of these packages are available as free or trial versions with an evaluation period of 30 days or more.

3. Build your own shared storage

To realize the benefits of a virtualization environment spanning many physical servers, you need shared storage. If you want to be able to move virtual servers between physical hosts, for example, the storage for those virtual servers must reside on a shared device that both hosts can access.

Virtualization tools support various storage protocols: NFS, iSCSI, Fiber-Channel. For lab research or testing, just add a few hard drives to a Windows or Linux system, share them using NFS or iSCSI, and map the lab servers to these storage resources. If you're interested in a more complete solution that you can control, try an open source storage system (like FreeNAS). This software offers an easy way to integrate a variety of storage solutions based on low cost hardware into a lab or production network.

4. Give enough time to laboratory research

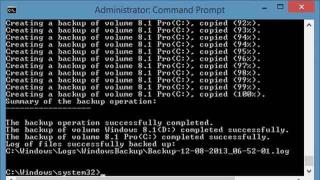

With shared storage and at least two physical servers, you can count on everything you need to build a complete virtualization platform. In the process of evaluating the capabilities of different software packages, spend at least a week experimenting with each of them. Don't forget to test all the features that are important to you: online virtual server migration, snapshots, deployment and cloning of virtual servers, ensuring their high level of availability. Looking for booking Moscow hotels located near the Garden Ring or Red Square? Do you want to quickly find Moscow hotels by metro on the map? Do you know how much 4 star hotels cost? Visit site ex-hotel.ru and you will receive comprehensive information on Moscow hotels.

You may have a chance to evaluate production modes in the lab to get an idea of how the system will perform in the real world. You can, for example, deploy a database server (DB) and use a backup copy of a real data set to get some reports, or use a benchmarking tool to evaluate the performance of a web application server. This will not only familiarize you with the day-to-day functions of the virtualization platform, but also help you understand what resources virtual servers will need when they are transferred to production mode.

5. Keep the lab up and running after the production system is up and running

After all this, it is necessary to determine the parameters of the production environment. You have gained an understanding of management tools and how to behave in real conditions. However, it is too early to dismantle the laboratory.

When you start purchasing new equipment for your production infrastructure, do not forget to refer to the results of laboratory tests. The virtual servers you plan to deploy must be up to the task.

Once the production system is built, the lab can be used to test new functionality, upgrades, and other things that should ensure that the production platform is stable and stable.

Article:

Recently, many different companies operating not only in the IT sector, but also in other areas, have begun to seriously look at virtualization technologies. Home users have also experienced the reliability and convenience of virtualization platforms that allow them to run multiple operating systems in virtual machines at the same time. At the moment, virtualization technologies are one of the most promising, according to various researchers of the information technology market. The market for virtualization platforms and management tools is currently growing strongly, with new players periodically appearing in it, as well as the process of absorption by large players of small companies involved in the development of software for virtualization platforms and tools for increasing the efficiency of the use of virtual infrastructures.

Meanwhile, many companies are not yet ready to invest heavily in virtualization, because they cannot accurately assess the economic effect of the introduction of this technology and do not have sufficient staff qualifications. If in many Western countries there are already professional consultants who are able to analyze the IT infrastructure, prepare a plan for virtualizing the company's physical servers and assess the profitability of the project, then in Russia there are very few such people. Of course, in the coming years, the situation will change, and at a time when various companies will appreciate the benefits of virtualization, there will be specialists with sufficient knowledge and experience to implement virtualization technologies at various scales. At the moment, many companies are only conducting local experiments on the use of virtualization tools, using mainly free platforms.

Fortunately, many vendors, in addition to commercial virtualization systems, also offer free platforms with limited functionality so that companies can partially use virtual machines in the production environment of the enterprise and, at the same time, evaluate the possibility of moving to serious platforms. In the desktop sector, users are also starting to use virtual machines in their day-to-day activities and do not place great demands on virtualization platforms. Therefore, free funds are considered by them first of all.

Leaders in the production of virtualization platforms

The development of virtualization tools at various levels of system abstraction has been going on for more than thirty years. However, it is only relatively recently that the hardware capabilities of servers and desktop PCs have allowed this technology to be taken seriously in relation to operating system virtualization. It so happened that for many years, both various companies and enthusiasts have been developing various tools for virtualizing operating systems, but not all of them are currently actively supported and are in a state acceptable for effective use. To date, the leaders in the production of virtualization tools are VMware, Microsoft, SWSoft (together with its Parallels company), XenSource, Virtual Iron and InnoTek. In addition to the products of these vendors, there are also such developments as QEMU, Bosch and others, as well as virtualization tools for operating system developers (for example, Solaris Containers), which are not widely used and are used by a narrow circle of specialists.

Companies that have had some success in the server virtualization platform market are distributing some of their products for free, relying not on the platforms themselves, but on the management tools without which it is difficult to use virtual machines on a large scale. In addition, commercial desktop virtualization platforms designed for use by IT professionals and software companies are significantly more powerful than their free counterparts.

However, if server virtualization is applied on a small scale, in the SMB (Small and Medium Business) sector, free platforms may well fill a niche in a company's production environment and provide significant cost savings.

When to Use Free Platforms

If you do not need mass deployment of virtual servers in an organization, constant monitoring of the performance of physical servers under changing load and a high degree of their availability, you can use virtual machines based on free platforms to maintain the organization's internal servers. With an increase in the number of virtual servers and a high degree of their consolidation on physical platforms, the use of powerful tools for managing and maintaining a virtual infrastructure is required. Depending on whether you need to use a variety of systems and storage networks, such as Storage Area Network (SAN), backup and disaster recovery tools, and hot migration of running virtual machines to other hardware, you may not be able to use free virtualization platforms, however, it should be noted that free platforms are constantly updated and acquire new functions, which expands the scope of their use.

Another important point is technical support. Free virtualization platforms exist either within the Open Source community, where many enthusiasts are involved in product development and support, or are supported by the platform vendor. The first option involves the active participation of users in the development of the product, their reporting of errors and does not guarantee the solution of your problems when using the platform, while in the second case, most often, technical support is not provided at all. Therefore, the qualifications of personnel deploying free platforms must be at a high level.

Free desktop virtualization platforms are most useful for isolating user environments, decoupling them from specific hardware, educational purposes for learning about operating systems, and safely testing various software. It is hardly worth using free desktop platforms on a large scale for developing or testing software in software companies, since they do not have sufficient functionality for this. However, for home use, free virtualization products are quite suitable, and you can even give examples when virtual machines based on free desktop virtualization systems are used in a production environment.

Free server virtualization platforms

In almost any organization that uses a server infrastructure, there is often a need to use both standard network services (DNS, DHCP, Active Directory) and several internal servers (applications, databases, corporate portals) that do not experience heavy loads and are distributed over different physical servers. These servers can be consolidated into multiple virtual machines on a single physical host. This simplifies the process of migrating servers from one hardware platform to another, reduces equipment costs, simplifies the backup procedure and increases their manageability. Depending on the types of operating systems running network services and the requirements for a virtualization system, you can choose the right free product for a corporate environment. When choosing a server virtualization platform, it is necessary to take into account performance characteristics (they depend both on the virtualization technique used and on the quality of implementation of various components of the vendor platform), ease of deployment, scalability of the virtual infrastructure, and the availability of additional management, maintenance and monitoring tools.

OpenVZ

Unfortunately, Microsoft recently announced that virtualization on the Windows 2008 platform will not be available until mid-2008, so VMware still has plenty of time to capture even more virtualization market share.

Another advantage of Virtual Server is the ability to manage the virtualization server through Windows Management Instrumentation (WMI) and its tight integration with other Microsoft products and services. Virtual Server-based virtual machines can be managed through both thin and thick clients.

Of course, the Virtual Server product can be used to maintain the internal infrastructure of virtual servers not only in the SMB sector, but also in large organizations. It is not yet clear what kind of integrated virtualization will be in the Windows Server 2008 platform, but Microsoft will certainly compete well with VMware platforms.

VMware Server

VMware is currently the undisputed leader in the production of both server and desktop virtualization platforms. In the server virtualization sector, VMware offers two fundamentally different products at once: the free VMware Server and the commercial VMware ESX Server. The first is aimed at the virtualization segment for small and medium businesses, while the second is part of a solution for building a virtual infrastructure in a large organization. The VMware Server product, although it currently has version 1.0.3, has been developed by VMware for a long time, and was previously called VMware GSX Server. Becoming free in 2006, this product has gained immense popularity not only as a server virtualization tool, but is also often used as a desktop virtualization platform by IT professionals and software companies.

VMware Server has all the necessary capabilities to implement virtualization in the SMB sector to maintain a virtual infrastructure in the company. Windows and Linux platforms can be used as host platforms, which allows using virtualization in a heterogeneous enterprise environment. The list of supported guest operating systems is very extensive, and the ease of use of the product allows it to be used by a wide range of users. VMware Server supports 32-bit and 64-bit host and guest operating systems and provides remote management of virtual machines and virtual server. VMware Server includes support for Intel VT, APIs for third-party applications to interact with virtual machines, and can be run as a service when the host system starts. A virtual machine running VMware Server can have up to 4 virtual network interfaces, 3.6 GB of RAM and be managed by multiple users. With a mature virtual server infrastructure, you may need additional management tools that the VMware Virtual Center product provides.

From the point of view of convenience and ease of use, VMware Server is the undisputed leader, and in terms of performance it does not lag behind commercial platforms (especially in Linux host systems). The disadvantages include the lack of support for hot migration and the lack of backup tools, which, however, are provided, most often, only by commercial platforms. By far, VMware Server is the best choice for quickly deploying an organization's internal servers, including pre-installed virtual server templates, which can be found in abundance on various resources (for example, the Virtual Appliance Marketplace).

Results

Summing up the review of free server virtualization platforms, we can say that each of them currently occupies its niche in the SMB sector, where, through the use of virtual machines, you can significantly increase the efficiency of the IT infrastructure, make it more flexible and reduce the cost of purchasing equipment. Free platforms, first of all, allow you to evaluate the possibilities of virtualization not on paper and feel all the benefits of this technology. In conclusion, here is a summary table of characteristics of free virtualization platforms that will help you choose the right server platform for your purposes. After all, it is through free virtualization that the path to further investment in virtualization projects based on commercial systems lies.

| Platform name, developer | Host OS | Officially Supported Guest OS | Support for multiple virtual processors (Virtual SMP) | Virtualization technique | Typical use | Productivity |

| OpenVZ | An Open Source community project powered by SWSoft Linux | Various Linux distributions | Yes | Operating system level virtualization | Isolation of virtual servers (including for hosting services) | Lossless |

| Virtual Iron 3.7 Virtual Iron Software, Inc. |

Not required | Windows, RedHat, SuSE | Yes (up to 8) | Server virtualization in a production environment | close to native | |

| Virtual Server 2005 R2 SP1 Microsoft |

Windows | Windows, Linux (Red Hat and SUSE) | Not | Native virtualization, hardware virtualization | Virtualization of internal servers in a corporate environment | Close to native (with Virtual Machine Additions installed) |

| VMware Server VMware |

Windows, Linux | DOS, Windows, Linux, FreeBSD, Netware, Solaris | Yes | Native virtualization, hardware virtualization | Small business server consolidation, development/testing | close to native |

| Xen Express and Xen XenSource (supported by Intel and AMD) |

NetBSD, Linux, Solaris | Linux, NetBSD, FreeBSD, OpenBSD, Solaris, Windows, Plan 9 | Yes | Paravirtualization, hardware virtualization | Developers, testers, IT professionals, small business server consolidation | Close to native (some losses due to networking and heavy disk usage) |

Please enable JavaScript to view the

Virtual environment concept

A new direction of virtualization, which gives an overall holistic picture of the entire network infrastructure using aggregation technology.

Types of virtualization

Virtualization is a general term covering the abstraction of resources for many aspects of computing. The types of virtualization are listed below.

Software virtualization

Dynamic Broadcast

With dynamic translation ( binary translation) problematic guest OC commands are intercepted by the hypervisor. After these commands are replaced with safe ones, control is returned to the guest OS.

Paravirtualization

Paravirtualization is a virtualization technique in which guest operating systems are prepared to run in a virtualized environment, for which their kernel is slightly modified. The operating system interacts with the hypervisor program, which provides it with a guest API, instead of directly using resources such as the memory page table.

The paravirtualization method allows to achieve higher performance than the dynamic translation method.

The paravirtualization method is applicable only if the guest OS are open source, which can be modified according to the license, or the hypervisor and guest OS are developed by the same manufacturer, taking into account the possibility of paravirtualization of the guest OS (although provided that a hypervisor can run under the hypervisor lower level, then the paravirtualization of the hypervisor itself).

The term first appeared in the Denali project.

Built-in virtualization

Advantages:

- Sharing resources between both operating systems (catalogues, printers, etc.).

- User-friendly interface for application windows from different systems (overlapping application windows, same minimization of windows as in the host system)

- When fine-tuned to the hardware platform, performance differs little from the original native OS. Fast switching between systems (less than 1 sec.)

- A simple procedure for updating the guest OS.

- Two-way virtualization (applications on one system run on another and vice versa)

Implementations:

Hardware virtualization

Advantages:

- Simplify the development of virtualization software platforms by providing hardware-based management interfaces and support for virtualized guests. This reduces the complexity and time for the development of virtualization systems.

- Ability to increase the performance of virtualization platforms. Virtual guest systems are managed directly by a small software middleware layer, the hypervisor, which results in a performance increase.

- Security improves, it becomes possible to switch between several running independent virtualization platforms at the hardware level. Each of the virtual machines can work independently, in its own space of hardware resources, completely isolated from each other. This allows you to eliminate performance losses to maintain the host platform and increase security.

- The guest system is not tied to the architecture of the host platform and to the implementation of the virtualization platform. Hardware virtualization technology makes it possible to run 64-bit guests on 32-bit host systems (with 32-bit host virtualization environments).

Application examples:

- test labs and training: It is convenient to test applications in virtual machines that affect the settings of operating systems, such as installation applications. Due to the ease of deployment of virtual machines, they are often used to train new products and technologies.

- distribution of pre-installed software: many software developers create ready-made images of virtual machines with pre-installed products and provide them for free or commercially. These services are provided by Vmware VMTN or Parallels PTN

Server virtualization

- placement of several logical servers within one physical (consolidation)

- combining several physical servers into one logical one to solve a specific problem. Example: Oracle Real Application Cluster , grid technology , high performance clusters.

- SVISTA

- twoOStwo

- Red Hat Enterprise Virtualization for Servers

- PowerVM

In addition, server virtualization simplifies the recovery of failed systems on any available computer, regardless of its specific configuration.

Workstation virtualization

Resource virtualization

- Resource partitioning. Resource virtualization can be thought of as dividing a single physical server into several parts, each of which is visible to the owner as a separate server. It is not a virtual machine technology, it is implemented at the OS kernel level.

On systems with a type 2 hypervisor, both operating systems (guest and hypervisor) consume physical resources and require separate licensing. Virtual servers operating at the OS kernel level almost never lose speed, which makes it possible to run hundreds of virtual servers on one physical server that do not require additional licenses.

Shared disk space or network bandwidth into a number of smaller, lighter-used resources of the same type.

For example, the implementation of resource sharing can be attributed to (Project Crossbow), which allows you to create several virtual network interfaces based on one physical one.

- The aggregation, distribution, or addition of many resources into large resources, or pooling of resources. For example, symmetrical multiprocessor systems combine multiple processors; RAID and disk managers combine many disks into one large logical disk; RAID and networking uses multiple channels bundled together to appear as a single broadband channel. At the meta level, computer clusters do all of the above. Sometimes this also includes network file systems abstracted from the data stores on which they are built, for example, Vmware VMFS, Solaris /OpenSolaris ZFS, NetApp WAFL

Application virtualization

Advantages:

- isolation of application execution: absence of incompatibilities and conflicts;

- every time in its original form: the registry is not cluttered up, there are no configuration files - it is necessary for the server;

- lower resource costs compared to emulating the entire OS.

see also

Links

- Overview of Virtualization Methods, Architectures, and Implementations (Linux), www.ibm.com

- Virtual Machines 2007. Natalia Elmanova, Sergey Pakhomov, ComputerPress 9'2007

- Server virtualization. Neil McAllister, InfoWorld

- Virtualization of servers of standard architecture. Leonid Chernyak, Open Systems

- Alternatives to leaders in the channel 2009, August 17, 2009

- Hardware Virtualization Technologies, ixbt.com

- Spirals of hardware virtualization. Alexander Alexandrov, Open Systems

Notes

Wikimedia Foundation. 2010 .

See what "Virtualization" is in other dictionaries:

virtualization- The SNIA works give the following general definition. "Virtualization is an action (act) to combine several devices, services or functions of the internal component of the infrastructure (back end) with an additional external (front ... ...

virtualization- Separation of the physical layer of the network (location and connections of devices) from its logical layer (workgroups and users). Set up a network configuration based on logical criteria instead of physical ones. … Technical Translator's Handbook

Network virtualization is the process of combining hardware and software network resources into a single virtual network. Network virtualization is divided into external, that is, connecting many networks into one virtual, and internal, creating ... ... Wikipedia

For the virtualization of operating systems, a series of approaches are used, which, according to the type of implementation, are divided into software and hardware.

Let's consider each of these types of virtualization separately. Let's start with software methods.

Dynamic translation implies the interception of commands from the guest operating system, as a result of which the hypervisor modifies them and returns them to the guest OS. Thus, the guest operating system actually becomes one of the applications of the main operating system from which it is launched. The guest system actually thinks it is running on a real physical platform.

Paravirtualization is a virtualization technology in which guest operating systems are prepared for execution in a virtualized environment, for which their kernel is slightly modified. The operating system interacts with the hypervisor program, which provides it with a guest API. This is done so that different virtual machines can work with the hardware without conflicting with other virtual machines. The paravirtualization method allows to achieve higher performance than the dynamic translation method. The main disadvantage of this method is that it is applicable only if the guest operating systems have open source codes that can be modified according to the license. Or, the hypervisor and the guest operating system are developed by the same manufacturer, taking into account the possibility of paravirtualization of the guest system (although, provided that a lower-level hypervisor can run under the hypervisor, then the hypervisor itself can also be paravirtualized). Of the advantages, one can single out the absence of the need to use a full-fledged operating system as the main one, it is enough to use a special system (hypervisor). And, as a result, hardware resources are used more efficiently by virtual environments, since they actually work directly, with little or no mediation of the main operating system.

Figure 1 Diagram of paravirtualization

In the case of full virtualization, unmodified instances of guest operating systems are used. In order to support the operation of these guest systems, a common emulation layer is used on top of the main operating system. This technology is used, for example, in such applications as VMware Workstation, Parallels Desktop, MS Virtual PC, Virtual Iron. Among the advantages of this virtualization method is the relative ease of implementation. This solution is quite reliable and versatile. All control functions are taken over by the main operating system. In addition to the advantages, there are also disadvantages. Among them are high additional loads on hardware resources and rather weak flexibility in the use of hardware.

Figure 2 Scheme of full virtualization

Embedded virtualization is a new method based on the use of hardware-assisted virtualization capabilities, which allows users to use any version of the OS in combination with various operating environments. Essentially, embedded virtualization is full virtualization implemented at the hardware level. This approach was implemented as part of the BlueStacks Multi-OS project.

Figure 3 Operating system virtualization scheme

The most common form of virtualization at the moment is operating system virtualization. A virtual operating system is a combination of several operating systems that operate on the same hardware basis. The main advantage of this method is the high efficiency of the use of hardware resources. Schematically, the principle of operation is shown in Figure 3.

The result of application virtualization is the transformation from an application requiring installation on the operating system to a non-requiring, standalone application. The virtualizer software determines during the installation of the virtualized application the necessary operating system components for operation and emulates them. As a result of these actions, a specialized environment for a specific application is created, which ensures complete isolation of the operation of the launched application. To create such an application, the virtualized software is placed in a special folder. When a virtual application is launched, the software itself and the folder that is its working environment are launched. Thus, a barrier is created between the application and the operating system, which eliminates conflicts between the software and the operating system. Application virtualization is provided by programs such as Citrix XenApp, SoftGrid, and VMWare ThinApp.

The classic process of software virtualization implies the presence of the main operating system, on top of which the virtualization platform is launched. It is this platform that takes care of the emulation of hardware components and manages resources in relation to the guest system.

These methods are quite difficult to implement. Their main disadvantage is the significant performance loss associated with the consumption of resources by the main system.

It should also be noted that there is a significant decrease in security, because as a result of gaining control over the base operating system, control over guest systems is automatically intercepted.

Unlike software methods, with the help of virtualization hardware it is possible to obtain isolated guest systems that are directly controlled by the hypervisor.

The hardware virtualization process practically does not have any cardinal differences from the software one. In fact, this is a virtualization process backed by hardware support.

You should also consider the main types of virtualization of various components of the IT infrastructure.

When it comes to resource virtualization, it means dividing one physical server into several. Each separate part is displayed to the user as a separate server. This method is implemented at the operating system kernel level. The main advantage of this method is the fact that virtual servers operating at the operating system kernel level are just as fast, which allows you to run hundreds of virtual servers on one physical server.

An example of the implementation of resource sharing is the OpenSolaris Network Virtualization and Resource Control project, which allows you to create several virtual network interfaces based on one physical one.

Also, this process involves the merging, distribution and pooling of resources. For example, symmetrical multiprocessor systems combine multiple processors; RAID and disk managers combine many disks into one large logical disk. Often, this subtype also includes network file systems abstracted from the data stores on which they are built (Vmware VMFS, Solaris/OpenSolaris ZFS, NetApp WAFL).

There are no related articles.

Recently, users are increasingly hearing about such a thing as "virtualization". It is believed that its use is cool and modern. But far from every user clearly understands what virtualization is in general and in particular. Let's try to shed light on this issue and touch on server virtualization systems. Today, these technologies are cutting-edge because they have many advantages both in terms of security and administration.

What is virtualization?

Let's start with the simplest - the definition of a term that describes virtualization as such. We note right away that on the Internet you can find and download some kind of manual on this issue, such as the PDF-format "Server Virtualization for Dummies" reference book. But when studying the material, an unprepared user may encounter a large number of incomprehensible definitions. Therefore, we will try to clarify the essence of the issue, so to speak, on the fingers.

First of all, when considering server virtualization technology, let's dwell on the initial concept. What is virtualization? Following simple logic, it is easy to guess that this term describes the creation of some kind of emulator (likeness) of some physical or software component. In other words, it is an interactive (virtual) model that does not exist in reality. However, there are some nuances here.

Main types of virtualization and technologies used

The fact is that in the concept of virtualization there are three main areas:

- representation;

- applications;

- servers.

To understand, the simplest example would be the use of so-called ones that provide users with their own computing resources. The user program is executed exactly on and the user sees only the result. This approach allows to reduce the system requirements for the user terminal, the configuration of which is outdated and cannot cope with the given calculations.

For applications, such technologies are also widely used. For example, it can be virtualization of a 1C server. The essence of the process is that the program is launched on one isolated server, and a large number of remote users get access to it. The software package is updated from a single source, not to mention the highest level of security for the entire system.

Finally, it implies the creation of an interactive computer environment, the server virtualization in which completely repeats the real configuration of the "iron" brothers. What does this mean? Yes, what, by and large, on one computer, you can create one or more additional ones that will work in real time, as if they existed in reality (server virtualization systems will be discussed in more detail a little later).

At the same time, it does not matter at all which operating system will be installed on each such terminal. By and large, this does not have any effect on the main (host) OS and the virtual machine. This is similar to the interaction of computers with different operating systems on a local network, but in this case, virtual terminals may not be connected to each other.

Equipment selection

One of the obvious and indisputable advantages of virtual servers is the reduction of material costs for the creation of a fully functional hardware and software structure. For example, there are two programs that require 128 MB of RAM to work properly, but they cannot be installed on the same physical server. How to proceed in this case? You can purchase two separate servers of 128 MB each and install them separately, or you can buy one with 128 MB of “RAM”, create two virtual servers on it and install two applications on them.

If anyone has not yet understood, in the second case, the use of RAM will be more rational, and material costs are significantly lower than when buying two independent devices. But the matter is not limited to this.

Security Benefits

As a rule, the server structure itself implies the presence of several devices to perform certain tasks. In terms of security, system administrators install Active Directory domain controllers and Internet gateways not on the same server, but on different servers.

In the case of an attempted external intervention, the gateway is always the first to be attacked. If a domain controller is also installed on the server, then the probability of damage to the AD databases is very high. In a situation with targeted actions, attackers can take over all this. Yes, and restoring data from a backup is a rather troublesome business, although it takes relatively little time.

If you approach this issue from the other side, it can be noted that server virtualization allows you to bypass installation restrictions, as well as quickly restore the desired configuration, because the backup is stored in the virtual machine itself. True, as it is believed, server virtualization with Windows Server (Hyper-V) in this view looks unreliable.

In addition, the issue of licensing remains quite controversial. So, for example, for Windows Server 2008 Standard, only one virtual machine can be launched, for Enterprise - four, and for Datacenter - an unlimited number (even copies).

Administration Issues

The benefits of this approach, not to mention the security and cost savings, even when servers are virtualized with Windows Server, should primarily be appreciated by system administrators who maintain these machines or local networks.

Very often it becomes the creation of system backups. Usually, when creating a backup, third-party software is required, and reading from optical media or even from the Network takes longer than the speed of the disk subsystem. Cloning the server itself can be done in just a couple of clicks, and then quickly deploy a working system even on “clean” hardware, after which it will work without failures.

In VMware vSphere, server virtualization allows you to create and save so-called snapshots of the virtual machine itself (snapshots), which are special images of its state at a certain point in time. They can be represented in a tree structure within the machine itself. Thus, restoring the performance of a virtual machine is much easier. At the same time, you can arbitrarily choose restore points, rolling back the state back and then forward (Windows systems can only dream of this).

Server virtualization programs

If we talk about software, here you can use a huge number of applications to create virtual machines. In the simplest case, native tools of Windows systems are used, with the help of which server virtualization can be performed (Hyper-V is a built-in component).

However, this technology also has some drawbacks, so many prefer software packages like WMware, VirtualBox, QUEMI, or even MS Virtual PC. Although the names of such applications differ, the principles of working with them do not differ much (except in details and some nuances). With some versions of applications, virtualization of Linux servers can also be performed, but these systems will not be considered in detail, since most of our users still use Windows.

Server virtualization on Windows: the simplest solution

Since the release of the seventh version of Windows, a built-in component called Hyper-V has appeared in it, which made it possible to create virtual machines with the system's own means without the use of third-party software.

As in any other application of this level, in this package you can simulate the future by specifying the size of the hard drive, the amount of RAM, the presence of optical drives, the desired characteristics of a graphics or sound chip - in general, everything that is in the "hardware" of a conventional server terminal .

But here you need to pay attention to the inclusion of the module itself. Hyper-V server virtualization cannot be performed without first enabling this feature on the Windows system itself.

In some cases, it may also be necessary to enable the activation of support for the corresponding technology in the BIOS.

Use of third party software products

Nevertheless, even despite the means by which virtualization of Windows-system servers can be performed, many experts consider this technology to be somewhat inefficient and even overly complicated. It is much easier to use a ready-made product, in which similar actions are performed on the basis of automatic selection of parameters, and the virtual machine has great capabilities and flexibility in management, configuration and use.

We are talking about the use of such software products as Oracle VirtualBox, VMware Workstation (VMware vSphere) and others. For example, a VMware virtualization server can be created in such a way that computer analogs made inside a virtual machine work separately (independently of each other). Such systems can be used in learning processes, testing of any software, etc.

By the way, it can be noted separately that when testing software in a virtual machine environment, you can even use programs infected with viruses that will show their effect only in the guest system. This will not affect the main (host) OS in any way.

As for the process of creating a computer inside the machine, in VMware vSphere, server virtualization, as well as in Hyper-V, is based on the "Wizard", however, if we compare this technology with Windows systems, the process itself looks somewhat simpler, since the program itself can offer some semblance of templates or automatically calculate the necessary parameters for a future computer.

The main disadvantages of virtual servers

But, despite how many advantages server virtualization gives to the same system administrator or end user, such programs also have some significant drawbacks.

First, you can't jump over your head. That is, the virtual machine will use the resources of the physical server (computer), and not in full, but in a strictly limited amount. Thus, for the virtual machine to work properly, the initial hardware configuration must be powerful enough. On the other hand, buying one powerful server will still be much cheaper than buying several with a lower configuration.

Secondly, although it is believed that several servers can be clustered, and if one of them fails, you can “move” to another, this cannot be achieved in the same Hyper-V. And this looks like a clear minus in terms of fault tolerance.

Thirdly, the issue of transferring resource-intensive DBMS or systems like Mailbox Server, Exchange Server, etc. to the virtual space will be clearly controversial. In this case, a clear inhibition will be observed.

Fourth, for the correct operation of such an infrastructure, it is impossible to use exclusively virtual components. In particular, this applies to domain controllers - at least one of them must be "hardware" and initially available on the Web.

Finally, fifthly, server virtualization is fraught with another danger: the failure of the physical host and the host operating system will automatically shut down all related components. This is the so-called single point of failure.

Summary

Nevertheless, despite some disadvantages, the advantages of such technologies are clearly greater. If you look at the question of why server virtualization is needed, here are several main aspects:

- reduction in the amount of "iron" equipment;

- reduction of heat generation and energy consumption;

- reduction of material costs, including the purchase of equipment, payment for electricity, acquisition of licenses;

- simplification of maintenance and administration;

- the possibility of "migration" of the OS and the servers themselves.

Actually, the advantages of using such technologies are much greater. While it may seem that there are some serious drawbacks, with the right organization of the entire infrastructure and the implementation of the necessary controls for smooth operation, in most cases, such situations can be avoided.

Finally, for many, the question of choosing software and the practical implementation of virtualization remains open. But here it is better to turn to specialists for help, since in this case we were faced only with a question of general familiarization with server virtualization and the feasibility of implementing the system as such.